Android ve ChromeOS, çeşitli hizmetler sunan uygulamalar oluşturmanıza yardımcı olacak çeşitli API'ler sağlar.

olağanüstü bir ekran kalemi deneyimi yaşatıyor. İlgili içeriği oluşturmak için kullanılan

MotionEvent sınıfının gösterdiği sunum

ekran kalemi basıncı, ekran kalemi basıncı gibi ekran kaleminin ekranla etkileşimi hakkında

yön, yatırma, fareyle üzerine gelme ve avuç içi algılama. Düşük gecikmeli grafikler ve hareketler

tahmin kitaplıkları, özellikle ekran kalemini kullanarak

doğal, kalem ve kağıt benzeri bir deneyim sunuyor.

MotionEvent

MotionEvent sınıfı, kullanıcı girişi etkileşimlerini (ör. konum) temsil eder.

ve hareketlerini kontrol edebilir. Ekran kalemi girişi için MotionEvent

basınç, yön, yatırma ve fareyle üzerine gelme verilerini de gösterir.

Etkinlik verileri

.Kalem MotionEvent nesnelerine erişmek için pointerInteropFilter değiştiriciyi bir çizim yüzeyine ekleyin. Hareket etkinliklerini işleyen bir yöntem içeren bir ViewModel sınıfı uygulayın; yöntemi pointerInteropFilter değiştiricisinin onTouchEvent lambda'sı olarak iletin:

@Composable @OptIn(ExperimentalComposeUiApi::class) fun DrawArea(modifier: Modifier = Modifier) { Canvas( modifier = modifier .clipToBounds() .pointerInteropFilter { viewModel.processMotionEvent(it) } ) { // Drawing code here. } }

MotionEvent nesnesi, kullanıcı arayüzünün aşağıdaki yönleriyle ilgili veriler sağlar

etkinlik:

- Eylemler: Cihazla fiziksel etkileşim (ekrana dokunma, bir işaretçiyi ekran yüzeyinin üzerinde gezdirerek ve imleçle ekran üzerinde yüzey

- İşaretçiler: Ekranla etkileşimde bulunan nesnelerin tanımlayıcıları (parmak, parmak, ekran kalemi, fare

- Eksen: Veri türü—x ve y koordinatları, basınç, yatırma, yön, ve fareyle üzerine gelme (mesafe)

İşlemler

Ekran kalemi desteğini uygulamak için kullanıcının ne yaptığını anlamanız gerekir yardımcı olur.

MotionEvent, hareketi tanımlayan çok çeşitli ACTION sabitleri sağlar

etkinlikler. Ekran kalemiyle ilgili en önemli işlemler şunlardır:

| İşlem | Açıklama |

|---|---|

| ACTION_DOWN ACTION_POINTER_DOWN |

İşaretçi ekranla temas kurdu. |

| İŞLEM | İşaretçi ekranda hareket ediyor. |

| ACTION_UP ACTION_POINTER_UP |

İşaretçi artık ekranla temas halinde değil |

| İŞLEM_İPTAL | Önceki veya geçerli hareket setinin iptal edilmesi gerektiğinde. |

Uygulamanız, ACTION_DOWN olduğunda yeni kulaç başlatma gibi görevleri gerçekleştirebilir

Çizgiyi ACTION_MOVE, ile çizer ve

ACTION_UP tetiklendi.

Belirli bir dönem için ACTION_DOWN ile ACTION_UP arasındaki MotionEvent işlemleri grubu

hareket kümesi adı verilir.

İşaretçiler

Çoğu ekran çoklu dokunma özelliğine sahiptir. Sistem her parmak için bir işaretçi atar ve ekran kalemi, fare veya ekranla etkileşimde bulunan başka bir işaret eden nesne. İşaretçi dizin, belirli bir işaretçi için eksen bilgilerini almanızı sağlar (örneğin, ekrana veya ikinciye dokunan ilk parmağın konumu.

İşaretçi dizinleri,

MotionEvent#pointerCount()

eksi 1.

İşaretçilerin eksen değerlerine getAxisValue(axis,

pointerIndex) yöntemiyle erişilebilir.

İşaretçi dizini atlandığında, sistem ilk

işaretçi, sıfır (0) değerini alır.

MotionEvent nesneleri, kullanılan işaretçi türüyle ilgili bilgiler içerir. Siz

işaretçi türünü, işaretçi dizinlerini

tekrarlayarak ve

"the"

getToolType(pointerIndex)

yöntemidir.

İşaretçiler hakkında daha fazla bilgi edinmek için bkz. Çoklu dokunma özelliğini kullanma hareketler bölümüne gidin.

Ekran kalemi girişleri

Ekran kalemi girişlerini şu şekilde filtreleyebilirsiniz:

TOOL_TYPE_STYLUS:

val isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex)

Ekran kalemi ayrıca şunlarla birlikte silgi olarak kullanıldığını da bildirebilir.

TOOL_TYPE_ERASER:

val isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex)

Ekran kalemi ekseni verileri

ACTION_DOWN ve ACTION_MOVE, ekran kalemiyle ilgili eksen verilerini (x ve x) sağlar.

y koordinatları, basınç, yön, yatırma ve fareyle üzerine gelme.

MotionEvent API, bu verilere erişimi etkinleştirmek için

getAxisValue(int),

Burada parametre, aşağıdaki eksen tanımlayıcılarından herhangi biridir:

| Axis | Döndürülen değer: getAxisValue() |

|---|---|

AXIS_X |

Bir hareket etkinliğinin X koordinatı. |

AXIS_Y |

Bir hareket etkinliğinin Y koordinatı. |

AXIS_PRESSURE |

Dokunmatik ekran veya dokunmatik alanda parmak, ekran kalemi ya da başka bir işaretçi tarafından uygulanan basınç. Fare veya izleme topu için birincil düğmeye basıldıysa 1, birincil düğmeye basıldıysa 0. |

AXIS_ORIENTATION |

Dokunmatik ekran veya dokunmatik alan söz konusu olduğunda parmak, ekran kalemi veya başka bir işaretçinin cihazın dikey düzlemine göre yönü. |

AXIS_TILT |

Ekran kaleminin radyan cinsinden yatırma açısı. |

AXIS_DISTANCE |

Ekran kaleminin ekrandan uzaklığı. |

Örneğin, MotionEvent.getAxisValue(AXIS_X) işlevi şunun x koordinatını döndürür:

tıklayın.

Ayrıca bkz. Çoklu dokunma özelliğini işleme hareketler bölümüne gidin.

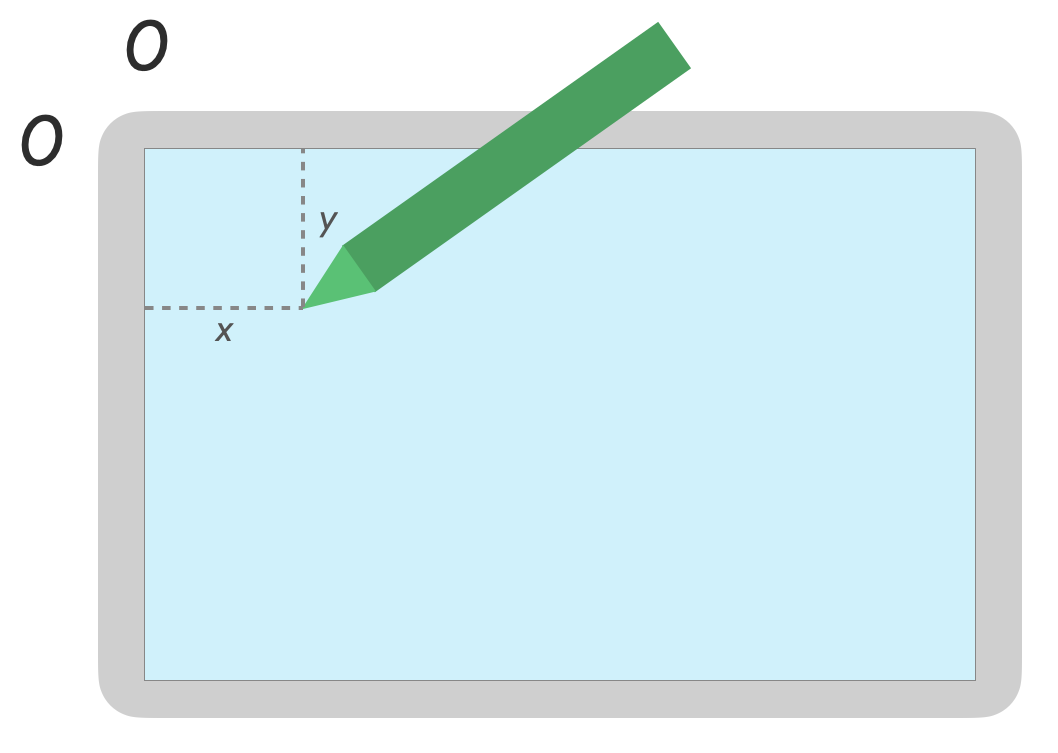

Konum

Aşağıdaki çağrıları kullanarak bir işaretçinin x ve y koordinatlarını alabilirsiniz:

MotionEvent#getAxisValue(AXIS_X)veyaMotionEvent#getX()MotionEvent#getAxisValue(AXIS_Y)veyaMotionEvent#getY()

Basınç

İşaretçi basıncını

MotionEvent#getAxisValue(AXIS_PRESSURE) veya ilk işaretçi için

MotionEvent#getPressure()

Dokunmatik ekranlar veya dokunmatik alanlar için basınç değeri 0 (hayır) basınç) ve 1'dir, ancak ekrana bağlı olarak daha yüksek değerler döndürülebilir. kalibrasyondur.

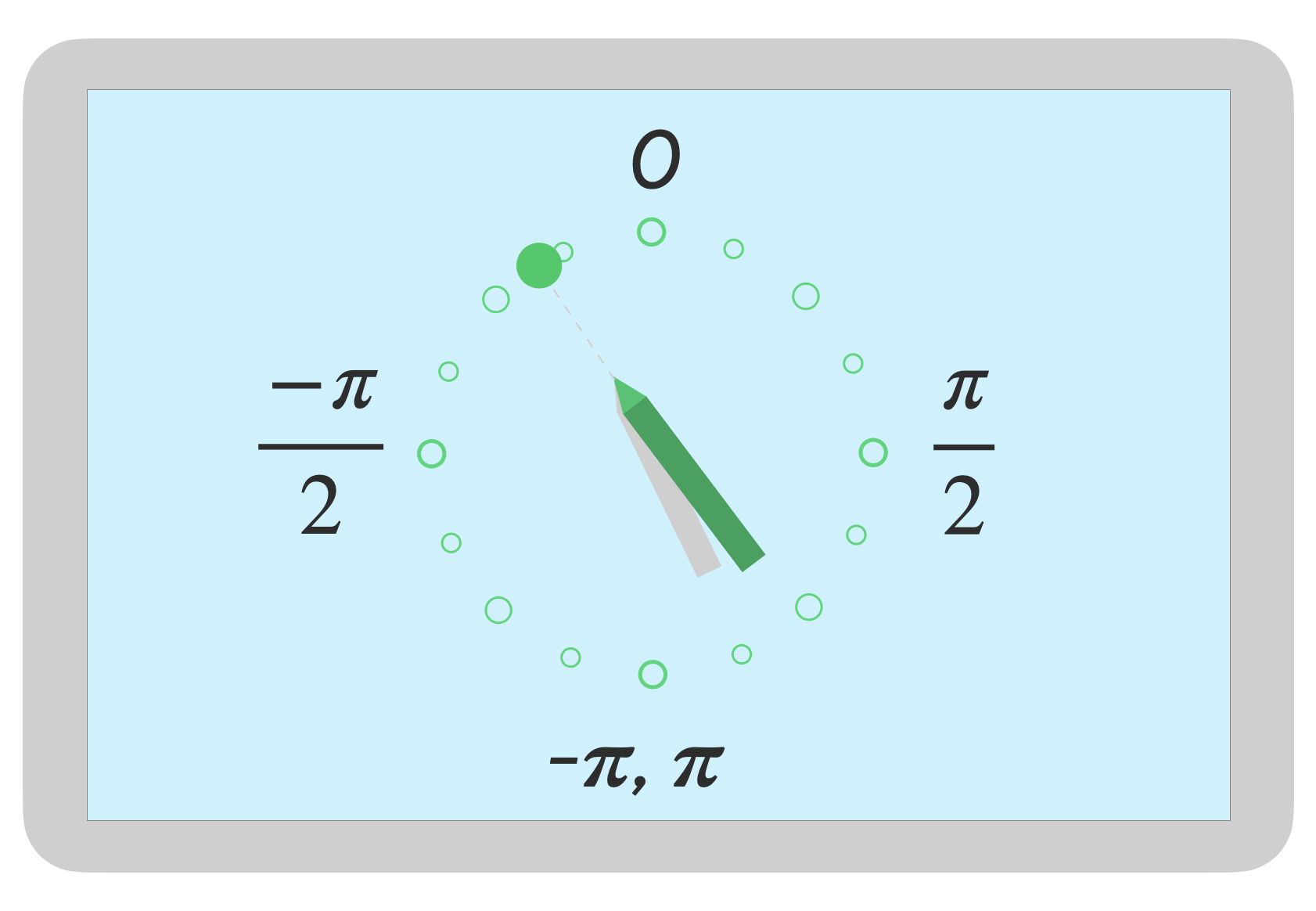

Yön

Yön, ekran kaleminin hangi yönü gösterdiğini belirtir.

İşaretçi yönü, getAxisValue(AXIS_ORIENTATION) veya

getOrientation()

(ilk işaretçi için).

Ekran kaleminde yön, 0 ile pi (÷) arasında bir radyan değeri olarak döndürülür veya saat yönünün tersine 0'dan -pi'ye ayarlayabilirsiniz.

Yön, gerçek hayattan bir fırça uygulamanıza olanak tanır. Örneğin, ekran kalemi düz bir fırçayı temsil eder; düz fırçanın genişliği ise ekran kalemi yönü.

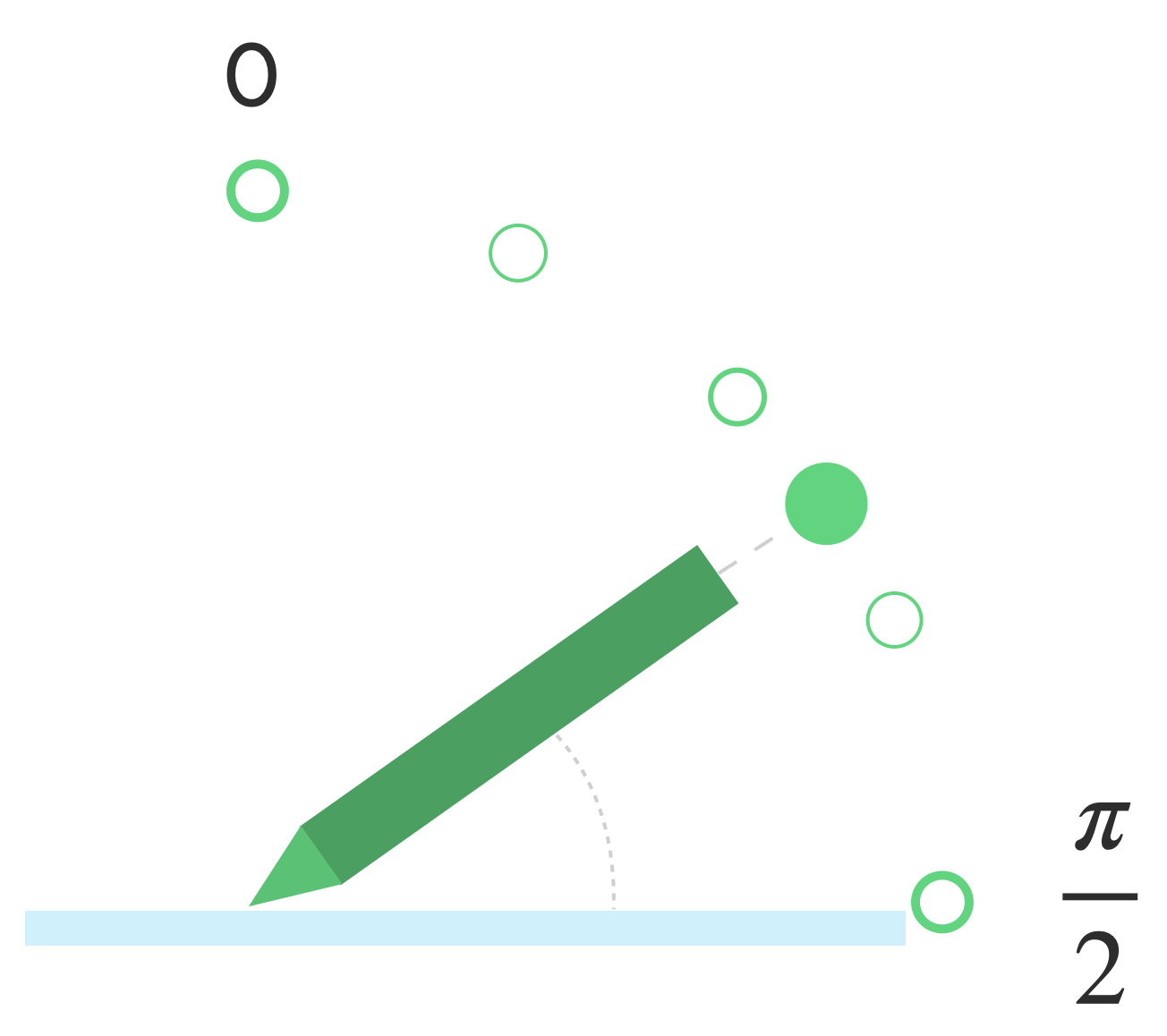

Eğme

Eğme, ekran kaleminin ekrana göre eğimini ölçer.

Eğme, ekran kaleminin pozitif açısını radyan cinsinden döndürür. Burada sıfır değeri vardır. ekrana dik ve ÷/2 yüzey üzerinde düzdür.

Eğme açısı getAxisValue(AXIS_TILT) kullanılarak alınabilir (

ilk işaretçi).

Yatırma, gerçek hayattan mümkün olduğunca yakın araçlar oluşturmak için kullanılabilir. eğik bir kalemle gölgelendirmeyi taklit etme.

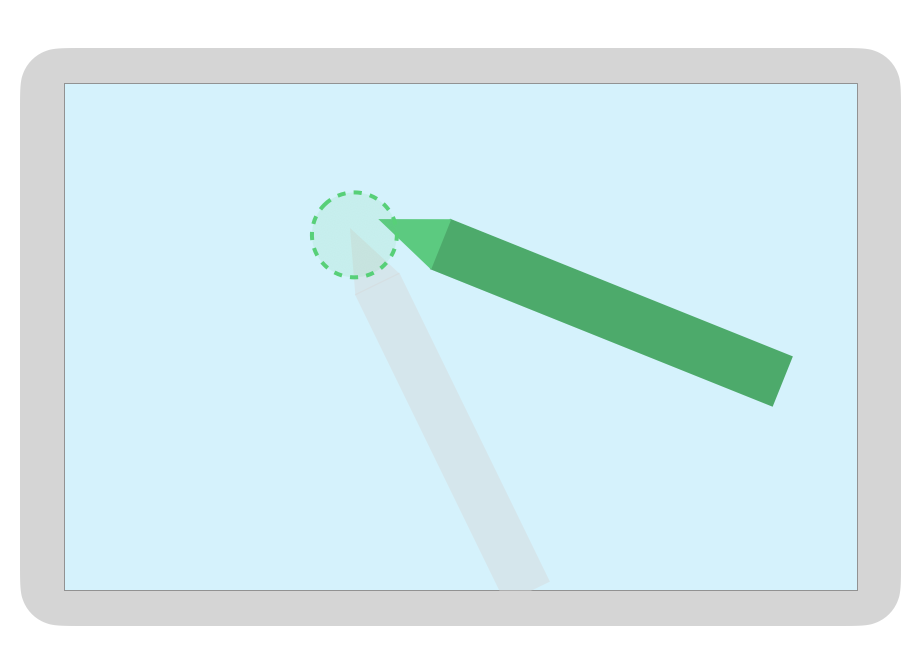

İmleçle üzerine gelin

Ekran kaleminin ekrandan uzaklığı

getAxisValue(AXIS_DISTANCE) Yöntem 0.0'dan bir değer döndürür (contact with

(ekran kalemi ekrandan uzaklaştıkça) daha yüksek değerlere. Fareyle üzerine gelme

ekran ile ekran kaleminin ucu (nokta) arasındaki mesafe

hem ekran hem de ekran kalemi üreticisidir. Çünkü uygulamalar

uygulama açısından kritik işlevler için kesin değerlere güvenmeyin.

Fırçanın boyutunu önizlemek için ekran kalemiyle üzerine gelindiğinde veya seçili olacaktır.

Not: Oluştur, kullanıcı arayüzü öğelerinin etkileşimli durumunu etkileyen değiştiriciler sağlar:

hoverable: Bileşeni, işaretçi giriş ve çıkış etkinliklerini kullanarak üzerine getirilebilir olacak şekilde yapılandırın.indication: Etkileşim gerçekleştiğinde bu bileşen için görsel efektler çizer.

Avuç içi reddi, gezinme ve istenmeyen girişler

Çoklu dokunmatik ekranlar bazen istenmeyen dokunmaları algılayabilir. Örneğin, bir kullanıcı

kullanıcı, el yazısını desteklemek için doğal olarak elini ekrana koymaktadır.

Avuç içi reddi, bu davranışı tespit eden ve size

son MotionEvent set iptal edilecek.

Sonuç olarak, istenmeyen dokunmaların önüne geçmek için kullanıcı girişlerinin kaydını tutmalısınız. gerçek kullanıcı girişleri ekrandan kaldırılabilir ve meşru kullanıcı girişleri yeniden oluşturuldu.

ACTION_CANCEL ve FLAG_CANCELED

ACTION_CANCEL ve

FLAG_CANCELED

iki grup da önceki MotionEvent setinin

işlemi son ACTION_DOWN içinde iptal edildi. Böylece, örneğin, son

belirli bir işaretçi için bir çizim uygulaması için bir çizgi.

İŞLEM_İPTAL

Android 1.0'a eklendi (API düzeyi 1)

ACTION_CANCEL, önceki hareket etkinlikleri grubunun iptal edilmesi gerektiğini belirtir.

Aşağıdakilerden herhangi biri algılandığında ACTION_CANCEL tetiklenir:

- Gezinme hareketleri

- Avuç içi reddi

ACTION_CANCEL tetiklendiğinde etkin işaretçiyi

getPointerId(getActionIndex()). Ardından, bu işaretçiyle oluşturulan fırçayı giriş geçmişinden kaldırıp sahneyi yeniden oluşturun.

İŞARET_İPTAL EDİLDİ

Android 13'e eklendi (API düzeyi 33)

FLAG_CANCELED

İşaretçinin yukarıya doğru yapılan isteksiz bir dokunuş olduğunu gösterir. Bayrak

genellikle kullanıcı yanlışlıkla ekrana dokunduğunda (ör. elinizle dokunmak gibi) ayarlanır

veya avucunuzu ekrana koyduğunuzdan emin olun.

İşaret değerine şu şekilde erişebilirsiniz:

val cancel = (event.flags and FLAG_CANCELED) == FLAG_CANCELED

Bayrak ayarlanırsa son MotionEvent ayarını geri almanız gerekir

Bu işaretçiden ACTION_DOWN.

ACTION_CANCEL gibi, işaretçi de getPointerId(actionIndex) ile bulunabilir.

MotionEvent set oluşturulur. Avuç içi dokunma işlemi iptal edildi ve ekran yeniden oluşturulur.

Tam ekran, uçtan uca ve gezinme hareketleri

Bir uygulama tam ekransa ve kenarlara yakın bir yerde işlem yapılabilir öğeler (örneğin, bir çizim veya not alma uygulamasının tuvali. Parmağınızı ekranın alt kısmından navigasyonu görüntülemek veya uygulamayı arka plana taşımak, istenmeyen dokunuş olabilir.

Hareketlerin uygulamanızda istenmeyen dokunmaları tetiklemesini önlemek için şunları yapabilirsiniz:

eklerin ve

ACTION_CANCEL.

Ayrıca bkz. Palm reddi, gezinme ve istenmeyen girişler bölümüne bakın.

Şunu kullanın:

setSystemBarsBehavior()

yöntem ve

BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

/

WindowInsetsController

Gezinme hareketlerinin istenmeyen dokunma etkinliklerine neden olmasını önlemek için:

// Configure the behavior of the hidden system bars.

windowInsetsController.systemBarsBehavior =

WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

Inset ve hareket yönetimi hakkında daha fazla bilgi edinmek için aşağıdaki konulara bakın:

- Yoğun içerik modu için sistem çubuklarını gizle

- Hareketle gezinmeyle uyumluluğu sağlama

- Uygulamanızda içeriği uçtan uca görüntüleme

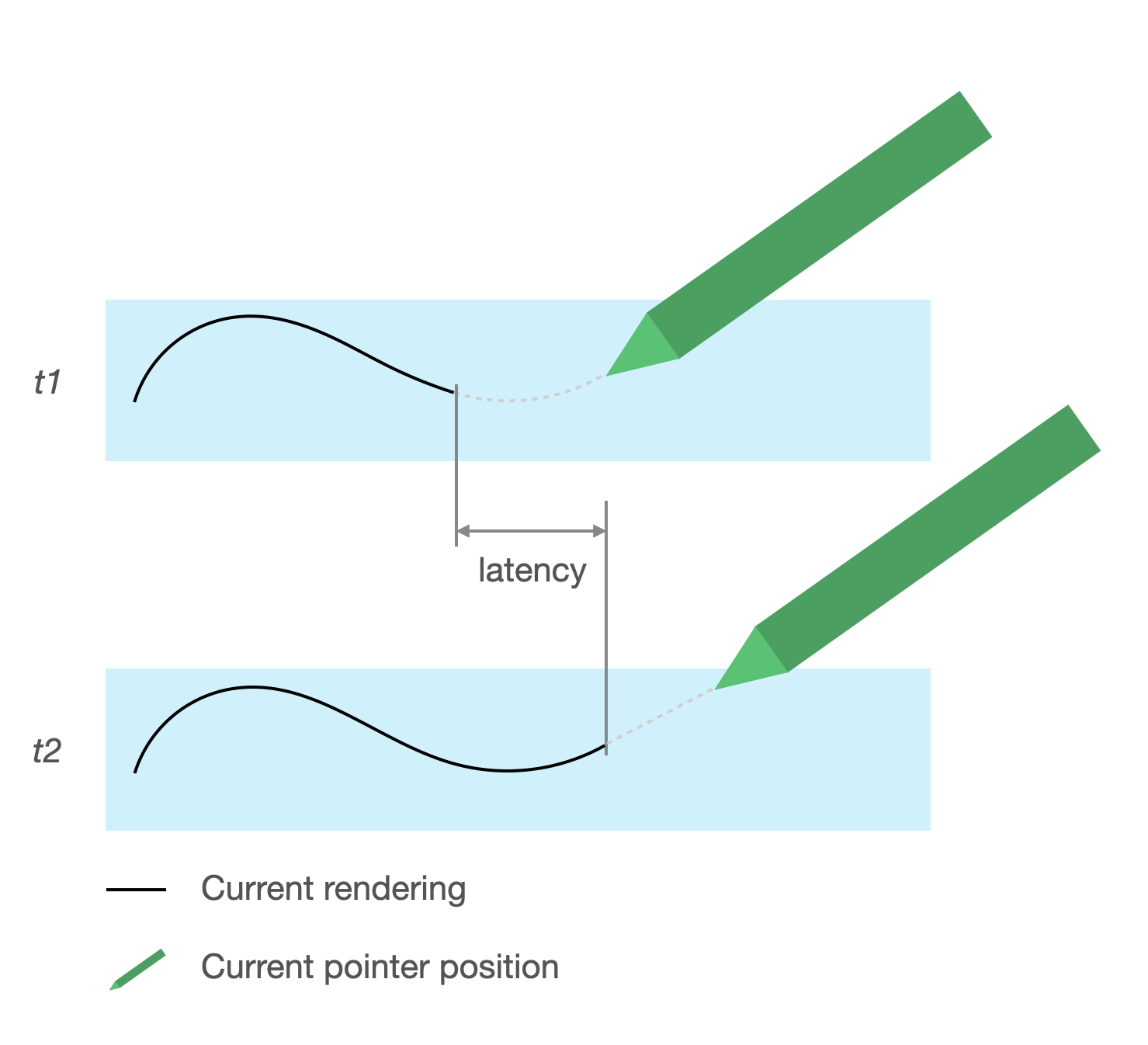

Düşük gecikme

Gecikme; donanım, sistem ve uygulamanın işlenmesi için gereken süredir. oluşturmak için kullanılır.

Gecikme = donanım ve işletim sistemi giriş işleme + uygulama işleme + sistem birleştirme

- donanım oluşturma

Gecikme kaynağı

- Dokunmatik ekranlı ekran kalemi (donanım): İlk kablosuz bağlantı Ekran kalemi ve işletim sistemi kaydedilip senkronize edilmek üzere iletişim kurduğunda.

- Dokunmatik örnekleme hızı (donanım): Dokunmatik ekranda saniyede yapılan işlem sayısıdır. bir işaretçinin yüzeye temas edip etmediğini kontrol eder (60 ile 1000 Hz aralığında).

- Giriş işleme (uygulama): Renk, grafik efektleri ve dönüştürme uygulama çok önemlidir.

- Grafik oluşturma (OS + donanım): Arabellek değiştirme, donanım işleme.

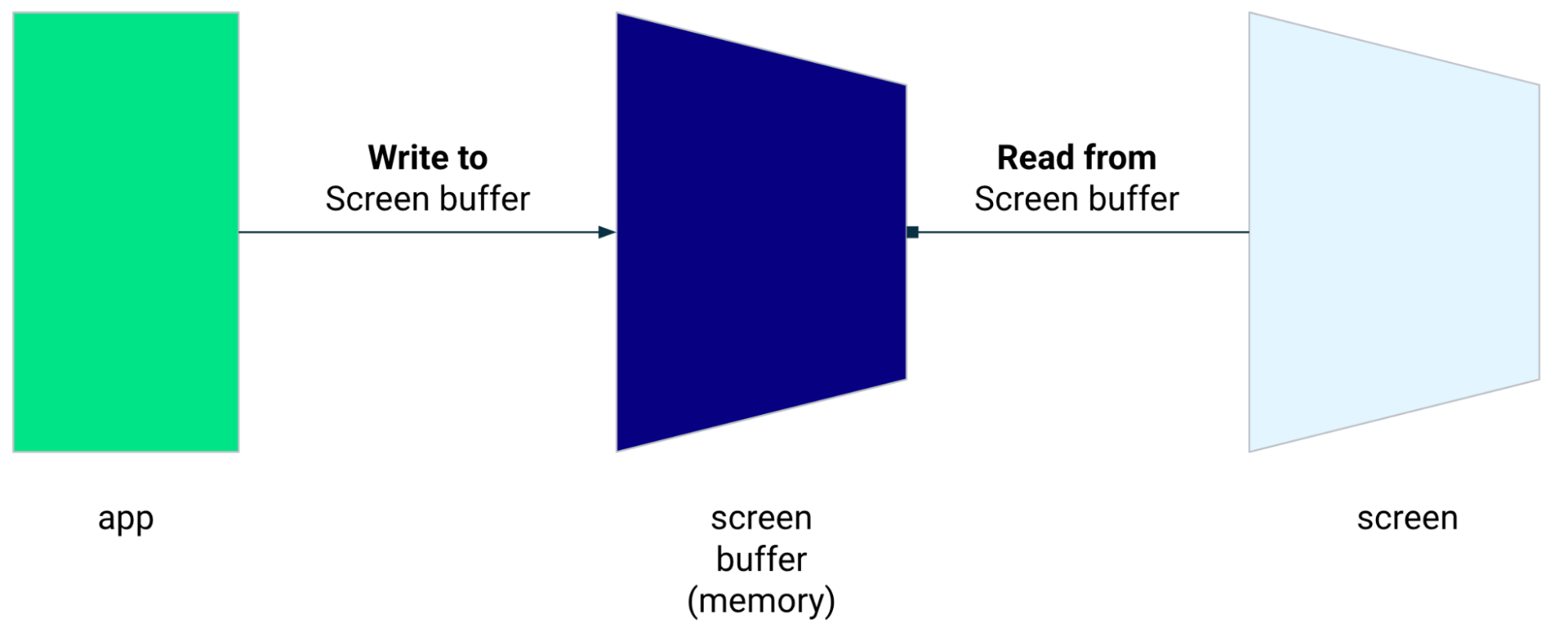

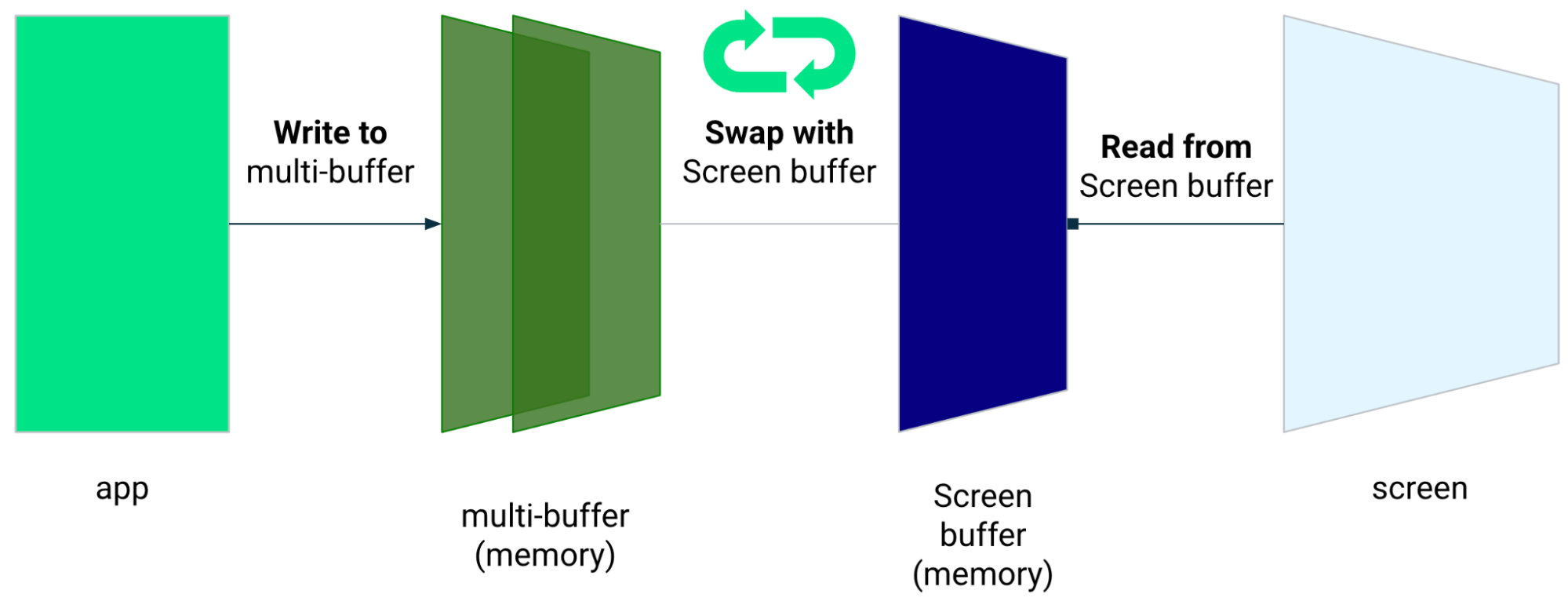

Düşük gecikmeli grafikler

Jetpack düşük gecikmeli grafik kitaplığı kullanıcı girişi ile ekranda oluşturma arasındaki işlem süresini kısaltır.

Kitaplık, çoklu arabellek oluşturmayı önleyerek ve otomatik olarak ön arabellek oluşturma tekniğinden yararlanıyoruz. Bu teknik, ekranda görebilirsiniz.

Ön arabellek oluşturma

Ön arabellek, ekranın oluşturma için kullandığı bellektir. Size en yakın doğrudan ekrana çizime geçebiliriz. Düşük gecikmeli kitaplık, oluşturmak için uygulamalar oluşturabilirsiniz. Bu da performansı şu kadar artırır: arabellek değişimini (normal çoklu arabellek oluşturmada gerçekleşebilir) veya çift arabellek oluşturma (en yaygın durum).

Ön arabellek oluşturma, videonuzun küçük bir alanını oluşturmak için tüm ekranı yenilemek için kullanılacak şekilde tasarlanmamıştır. Entegre ön arabellek oluşturmaya başladığınızda uygulama, içeriği önceden oluşturulan bir arabelleğe ekranda okuma işlemi yapılır. Sonuç olarak, kopyalama (aşağıya bakın).

Düşük gecikme kitaplığı, Android 10 (API düzeyi 29) ve sonraki sürümlerde kullanılabilir ve Android 10 (API düzeyi 29) ve sonraki sürümleri çalıştıran ChromeOS cihazlarda.

Bağımlılıklar

Düşük gecikmeli kitaplık, ön arabellek oluşturma için bileşenleri sağlar

hakkında bilgi edindiniz. Kitaplık, uygulamanın modülüne bir bağımlılık olarak eklenir

build.gradle dosyası:

dependencies {

implementation "androidx.graphics:graphics-core:1.0.0-alpha03"

}

GLFrontBufferRenderer geri çağırmaları

Düşük gecikmeli kitaplıkta şunlar bulunur:

GLFrontBufferRenderer.Callback

arayüzü, aşağıdaki yöntemleri tanımlar:

Düşük gecikmeli kitaplık, birlikte kullandığınız veri türünü dikkate almaz

GLFrontBufferRenderer

Ancak kitaplık, verileri yüzlerce veri noktasından oluşan bir akış olarak işler; Bu nedenle verilerinizi, bellek kullanımını ve ayırmayı optimize edecek şekilde tasarlayın.

Geri çağırma işlevleri

Geri çağırmaların oluşturulmasını etkinleştirmek için GLFrontBufferedRenderer.Callback ve

onDrawFrontBufferedLayer() ve onDrawDoubleBufferedLayer() değerlerini geçersiz kılın.

GLFrontBufferedRenderer, verilerinizi en iyi şekilde oluşturmak için geri çağırmaları kullanır

şekilde optimize edebilirsiniz.

val callback = object: GLFrontBufferedRenderer.Callback<DATA_TYPE> {

override fun onDrawFrontBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

param: DATA_TYPE

) {

// OpenGL for front buffer, short, affecting small area of the screen.

}

override fun onDrawMultiDoubleBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

params: Collection<DATA_TYPE>

) {

// OpenGL full scene rendering.

}

}

GLFrontBufferedRenderer örneği tanımlama

SurfaceView ve sağlayarak GLFrontBufferedRenderer hazırlayın.

geri çağırmaları yapalım. GLFrontBufferedRenderer, oluşturmayı optimize eder

öne ve çift arabelleğe alma işlemini geri çağırmanızı öneririz:

var glFrontBufferRenderer = GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks)

Oluşturma

Ön arabellek oluşturma işlemi,

renderFrontBufferedLayer()

yöntemini çağırın. Bu yöntem onDrawFrontBufferedLayer() geri çağırmasını tetikler.

commit()

işlevini tetikleyen onDrawMultiDoubleBufferedLayer() geri çağırmasını gerçekleştirin.

Aşağıdaki örnekte, işlem ön arabelleğe (hızlı)

(ACTION_DOWN) ekranda çizim yapmaya başlayıp ardından hareket ettiğinde

tıklayın (ACTION_MOVE). İşlem, çift arabelleğe oluşturulur

İşaretçi, ekran yüzeyinden (ACTION_UP) çıktığında.

Tekliflerinizi otomatikleştirmek ve optimize etmek için

requestUnbufferedDispatch()

giriş sisteminin hareket etkinliklerini toplu olarak değil, bunun yerine

kullanılabilir hale gelir gelmez:

when (motionEvent.action) {

MotionEvent.ACTION_DOWN -> {

// Deliver input events as soon as they arrive.

view.requestUnbufferedDispatch(motionEvent)

// Pointer is in contact with the screen.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_MOVE -> {

// Pointer is moving.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_UP -> {

// Pointer is not in contact in the screen.

glFrontBufferRenderer.commit()

}

MotionEvent.CANCEL -> {

// Cancel front buffer; remove last motion set from the screen.

glFrontBufferRenderer.cancel()

}

}

Oluşturmayla ilgili yapılması ve yapılmaması gerekenler

Ekranın küçük bölümleri, el yazısı, çizim, eskiz.

Tam ekran güncelleme, kaydırma, yakınlaştırma. Yırtılmaya neden olabilir.

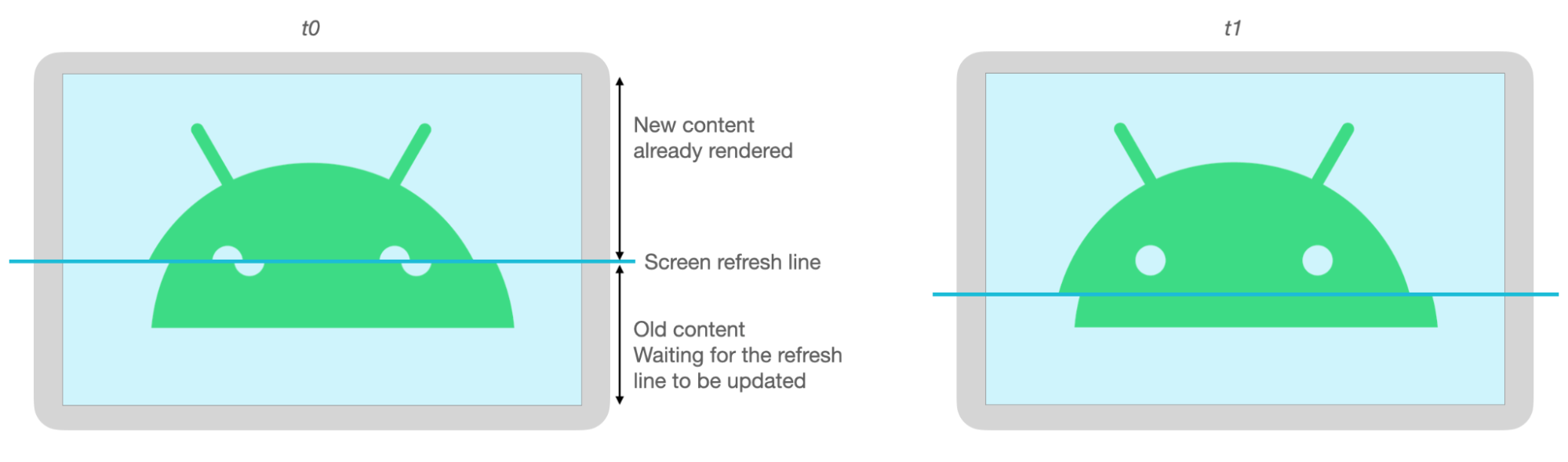

Yırtılma

Ekran arabelleği çalışırken ekran yenilendiğinde yırtılma meydana gelir değiştirilebilir. Ekranın bir bölümünde yeni veriler gösterilirken, başka bir bölümde eski verileri gösterir.

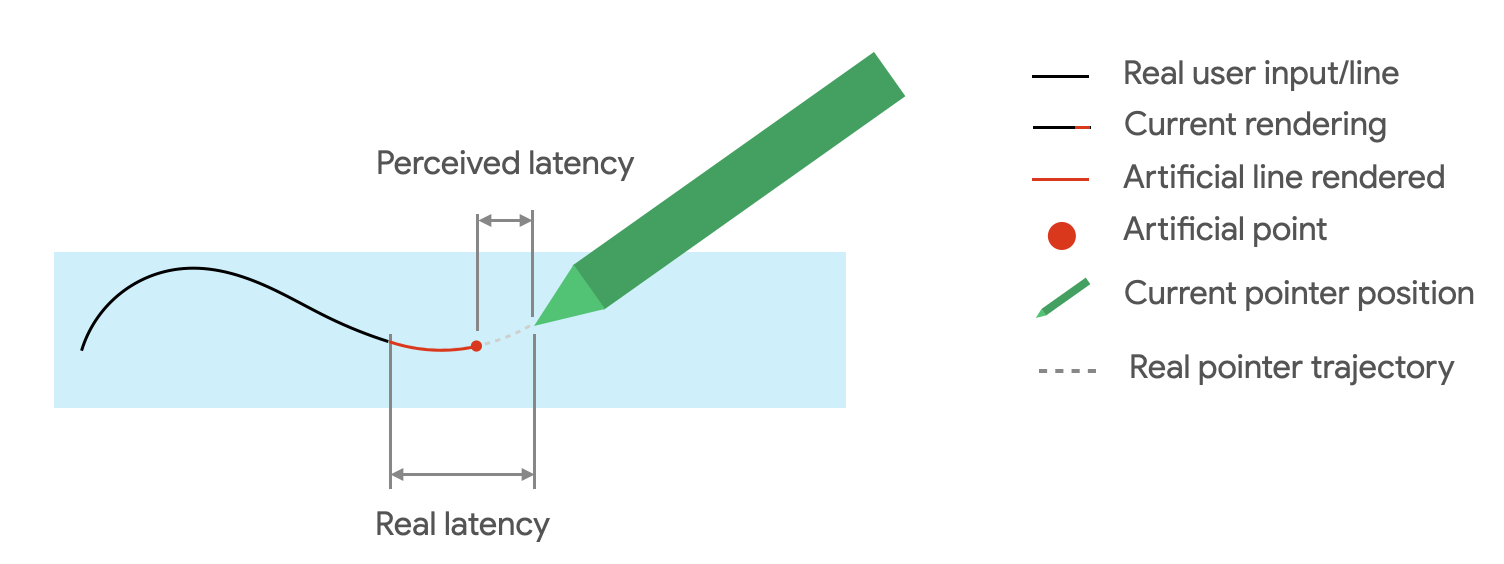

Hareket tahmini

Jetpack hareket tahmini kitaplık kullanıcının inme yolunu tahmin edip geçici veya geçici bir süre sağlayarak oluşturmak için yapay noktaları kullanmanız gerekir.

Hareket tahmini kitaplığı, gerçek kullanıcı girişlerini MotionEvent nesneleri olarak alır.

Nesneler x ve y koordinatları, basınç ve zaman ile ilgili bilgileri içerir.

hareket tahmincileri tarafından ilerideki MotionEvent hareketlerini tahmin etmek için kullanılır.

nesneler'i tıklayın.

Tahmin edilen MotionEvent nesneleri yalnızca tahminidir. Tahmin edilen olaylar,

algılanan gecikme ancak tahmin edilen verilerin gerçek MotionEvent ile değiştirilmesi gerekir

verileri alır.

Hareket tahmini kitaplığı, Android 4.4 (API düzeyi 19) ve sonraki sürümlerde daha yüksek ve Android 9 (API düzeyi 28) ve sonraki sürümleri çalıştıran ChromeOS cihazlarda.

Bağımlılıklar

Hareket tahmini kitaplığı, tahminin uygulanmasını sağlar. İlgili içeriği oluşturmak için kullanılan

kitaplığı, uygulamanın build.gradle dosyasına bir bağımlılık olarak eklenir:

dependencies {

implementation "androidx.input:input-motionprediction:1.0.0-beta01"

}

Uygulama

Hareket tahmini kitaplığı şunları içerir:

MotionEventPredictor

arayüzü, aşağıdaki yöntemleri tanımlar:

record():MotionEventnesneyi kullanıcı işlemlerinin kaydı olarak depolarpredict(): Tahmin edilen birMotionEventdöndürür

MotionEventPredictor örneği bildir

var motionEventPredictor = MotionEventPredictor.newInstance(view)

Tahminciye veri sağlayın

motionEventPredictor.record(motionEvent)

Tahmin

when (motionEvent.action) {

MotionEvent.ACTION_MOVE -> {

val predictedMotionEvent = motionEventPredictor?.predict()

if(predictedMotionEvent != null) {

// use predicted MotionEvent to inject a new artificial point

}

}

}

Hareket tahmininde yapılması ve yapılmaması gerekenler

Tahmin edilen yeni bir nokta eklendiğinde tahmin noktalarını kaldırır.

Nihai oluşturma işlemi için tahmin noktalarını kullanmayın.

Not alma uygulamaları

ChromeOS, uygulamanızın bazı not alma işlemlerini bildirmesine olanak tanır.

Bir uygulamayı ChromeOS'te not alma uygulaması olarak kaydetmek için bkz. Giriş uyumluluk başlıklı makaleyi inceleyin.

Bir uygulamayı Android'de not alma işlevine kaydetmek için bkz. Not alma işlemi oluşturma uygulamasında gösterilir.

Android 14 (API düzeyi 34),

ACTION_CREATE_NOTE

intent (uygulamanızın kilitte not alma etkinliği başlatmasını sağlar)

tıklayın.

ML Kit ile dijital mürekkep tanıma

ML Kit dijital mürekkeple tanıma, Uygulamanız dijital bir yüzeyde el yazısı metinleri yüzlerce kez dil. Çizimleri de sınıflandırabilirsiniz.

Makine Öğrenimi Kiti,

Ink.Stroke.Builder

makine öğrenimi modelleri tarafından işlenebilecek Ink nesne oluşturmak için sınıf

el yazısını metne dönüştürün.

Model, el yazısı tanımaya ek olarak, bilgisayar hareketler, sağlayabilirsiniz.

Bkz. Dijital mürekkep tanıma konulu videomuzu izleyin.