Android et ChromeOS fournissent diverses API pour vous aider à créer des applications qui offrent

aux utilisateurs une expérience exceptionnelle. La

La classe MotionEvent expose

des informations sur l'interaction du stylet avec l'écran, y compris la pression du stylet,

l'orientation, l'inclinaison, le survol et la détection de la paume de la main. Graphismes et mouvements à faible latence

les bibliothèques de prédiction améliorent le rendu à l'écran pour fournir

comme un stylo et du papier.

MotionEvent

La classe MotionEvent représente les interactions d'entrée utilisateur telles que la position

et les mouvements des pointeurs tactiles à l'écran. Pour la saisie au stylet, MotionEvent

expose également les données de pression, d'orientation, d'inclinaison et de survol.

Données d'événement

Pour accéder aux données MotionEvent, configurez un rappel onTouchListener:

Kotlin

val onTouchListener = View.OnTouchListener { view, event ->

// Process motion event.

}

Java

View.OnTouchListener listener = (view, event) -> {

// Process motion event.

};

L'écouteur reçoit des objets MotionEvent du système afin que votre application puisse les traiter.

Un objet MotionEvent fournit des données sur les aspects suivants d'une UI

événement:

- Actions: interaction physique avec l'appareil (toucher l'écran) déplacement d'un pointeur sur la surface de l'écran ou sur l'écran Surface

- Pointeurs: identifiants des objets qui interagissent avec l'écran (doigt, stylet, souris

- Axe: type de données (coordonnées X et Y, pression, inclinaison, orientation et survol (distance)

Actions

Pour implémenter la prise en charge des stylets, vous devez comprendre l'action de l'utilisateur des performances.

MotionEvent fournit une grande variété de constantes ACTION qui définissent le mouvement.

événements. Voici les actions les plus importantes pour le stylet :

| Action | Description |

|---|---|

| ACTION_DOWN ACTION_POINTER_DOWN |

Le pointeur a établi un contact avec l'écran. |

| ACTION_MOVE | Le pointeur se déplace à l'écran. |

| ACTION_UP ACTION_POINTER_UP |

Le pointeur n'est plus en contact avec l'écran. |

| ACTION_CANCEL | Intervient lorsque l'ensemble de mouvements précédent ou actuel doit être annulé. |

Votre appli peut effectuer des tâches comme commencer un nouveau trait lorsque ACTION_DOWN

se produit, en dessinant le trait avec ACTION_MOVE, et en le finalisant lorsque

ACTION_UP est déclenché.

Ensemble d'actions MotionEvent de ACTION_DOWN à ACTION_UP pour une valeur

s'appelle un ensemble de mouvements.

Pointeurs

La plupart des écrans sont multipoint: le système attribue un pointeur à chaque doigt, stylet, souris ou autre objet pointu interagissant avec l'écran. Un pointeur "index" vous permet d'obtenir des informations sur l'axe d'un pointeur spécifique, tel que le la position du premier doigt touchant l'écran ou du second.

Les index de pointeur sont compris entre zéro et le nombre de pointeurs renvoyés par

MotionEvent#pointerCount()

moins 1.

Les valeurs d'axe des pointeurs sont accessibles avec la méthode getAxisValue(axis,

pointerIndex).

Lorsque l'index de pointeur est omis, le système renvoie la valeur du premier

pointeur zéro (0).

Les objets MotionEvent contiennent des informations sur le type de pointeur utilisé. Toi

d'obtenir le type de pointeur en effectuant une itération sur les index de pointeur et en appelant

la

getToolType(pointerIndex)

.

Pour en savoir plus sur les pointeurs, consultez Gérer les gestes à plusieurs doigts. gestes.

Saisies au stylet

Vous pouvez filtrer les saisies au stylet avec

TOOL_TYPE_STYLUS:

Kotlin

val isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex)

Java

boolean isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex);

Le stylet peut également indiquer qu'il est utilisé comme gomme

TOOL_TYPE_ERASER:

Kotlin

val isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex)

Java

boolean isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex);

Données d'axe concernant le stylet

ACTION_DOWN et ACTION_MOVE fournissent des données d'axe concernant le stylet, à savoir x et

les coordonnées Y, la pression, l'orientation, l'inclinaison et le survol.

Pour permettre l'accès à ces données, l'API MotionEvent fournit

getAxisValue(int),

où le paramètre correspond à l'un des identifiants d'axe suivants:

| Axe | Valeur renvoyée pour getAxisValue() |

|---|---|

AXIS_X |

Coordonnée X d'un événement de mouvement. |

AXIS_Y |

Coordonnée Y d'un événement de mouvement. |

AXIS_PRESSURE |

Sur un écran tactile ou un pavé tactile, il s'agit de la pression appliquée par un doigt, un stylet ou un autre pointeur. Pour une souris ou un trackball, 1 si l'utilisateur appuie sur le bouton principal, 0 dans le cas contraire. |

AXIS_ORIENTATION |

Pour un écran tactile ou un pavé tactile, orientation du doigt, du stylet ou d'un autre pointeur par rapport au plan vertical de l'appareil. |

AXIS_TILT |

Angle d'inclinaison du stylet en radians. |

AXIS_DISTANCE |

Distance du stylet par rapport à l'écran. |

Par exemple, MotionEvent.getAxisValue(AXIS_X) renvoie la coordonnée X de la

premier pointeur.

Consultez également la section Gérer l'interaction multipoint gestes.

Position

Vous pouvez récupérer les coordonnées X et Y d'un pointeur avec les appels suivants :

MotionEvent#getAxisValue(AXIS_X)ouMotionEvent#getX()MotionEvent#getAxisValue(AXIS_Y)ouMotionEvent#getY()

Pression

Vous pouvez connaître la pression du pointeur avec

MotionEvent#getAxisValue(AXIS_PRESSURE) ou, pour le premier pointeur,

MotionEvent#getPressure()

La valeur de pression pour les écrans tactiles ou les pavés tactiles est une valeur comprise entre 0 (pas pression) et 1, mais des valeurs plus élevées peuvent être renvoyées en fonction de l'écran un étalonnage.

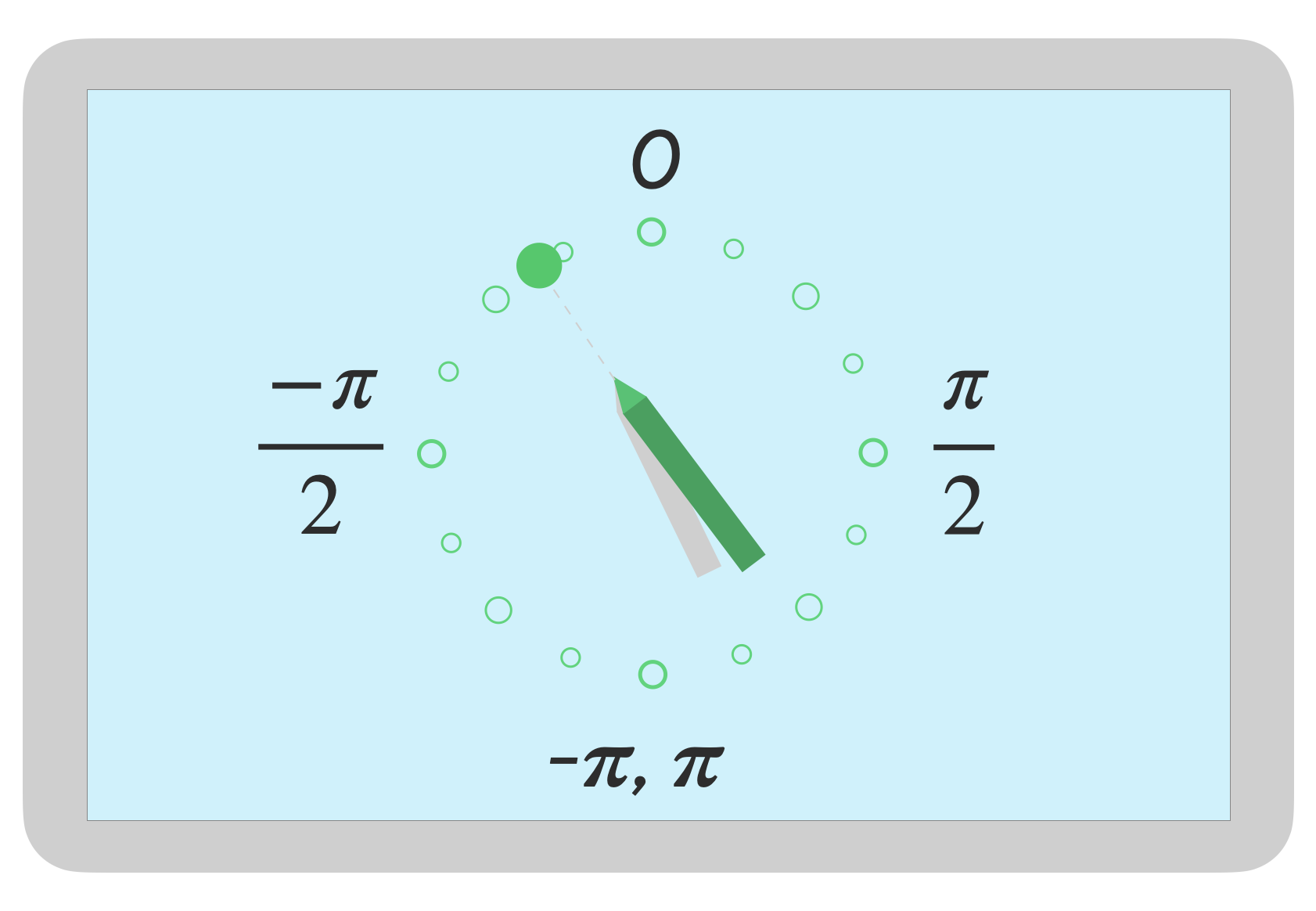

Orientation

L'orientation indique le sens vers lequel pointe le stylet.

Vous pouvez déterminer l'orientation du pointeur à l'aide de getAxisValue(AXIS_ORIENTATION) ou

getOrientation()

(pour le premier pointeur).

Pour un stylet, l'orientation est renvoyée sous la forme d'une valeur radian comprise entre 0 et pi (π) dans le sens des aiguilles d'une montre ou de 0 à -pi dans le sens inverse des aiguilles d'une montre.

L'orientation vous permet d'implémenter un pinceau réel. Par exemple, si le stylet représente un pinceau plat, la largeur de ce pinceau dépend du orientation du stylet.

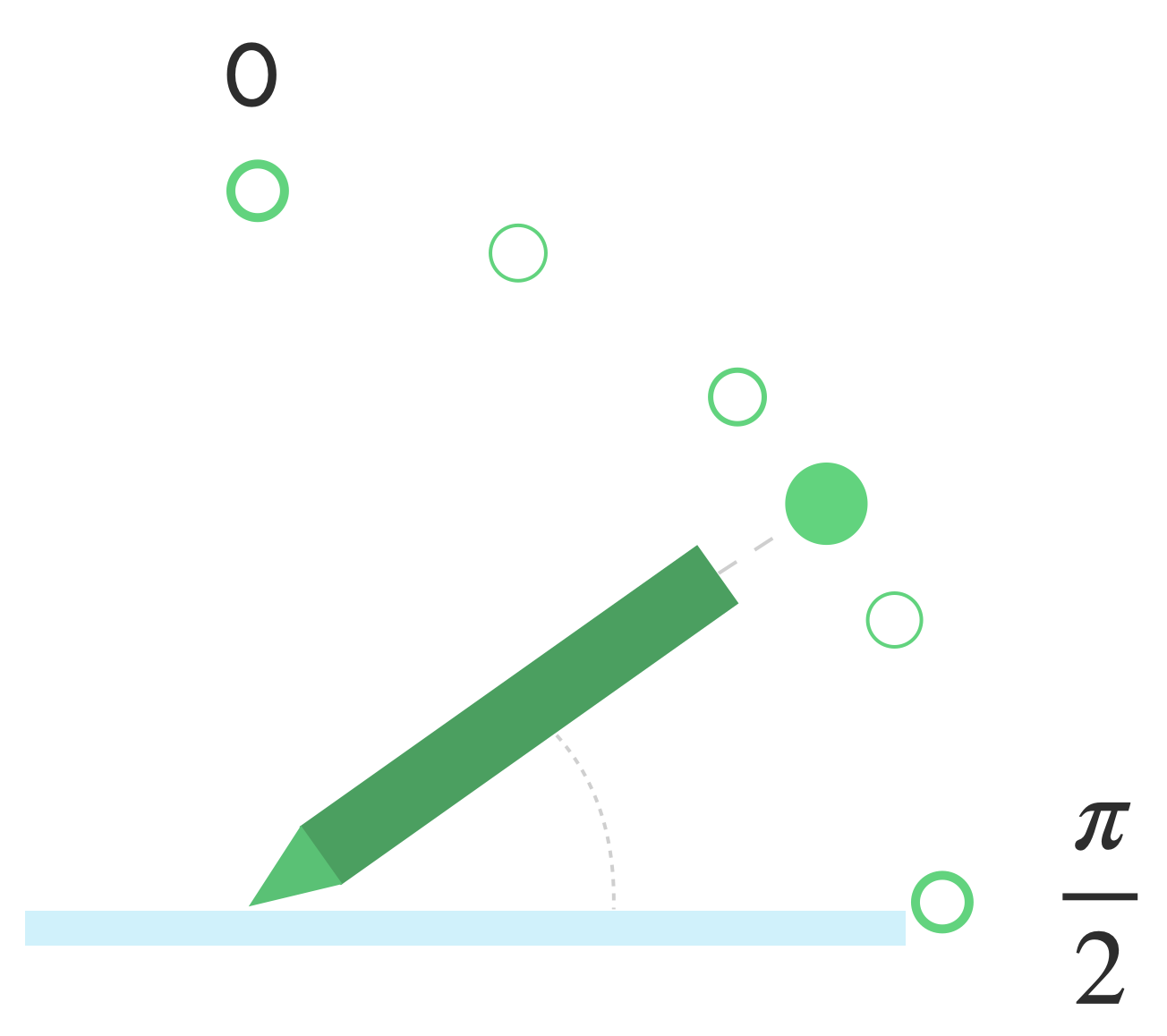

Inclinaison

Il s'agit ici de l'inclinaison du stylet par rapport à l'écran.

L'inclinaison renvoie l'angle positif du stylet en radians, où zéro est perpendiculaire à l'écran et π/2 est à plat sur la surface.

L'angle d'inclinaison peut être déterminé à l'aide de getAxisValue(AXIS_TILT) (aucun raccourci pour

le premier pointeur).

L'inclinaison peut être utilisée pour reproduire aussi près que possible des outils réels, comme en imitant l'ombre avec un crayon incliné.

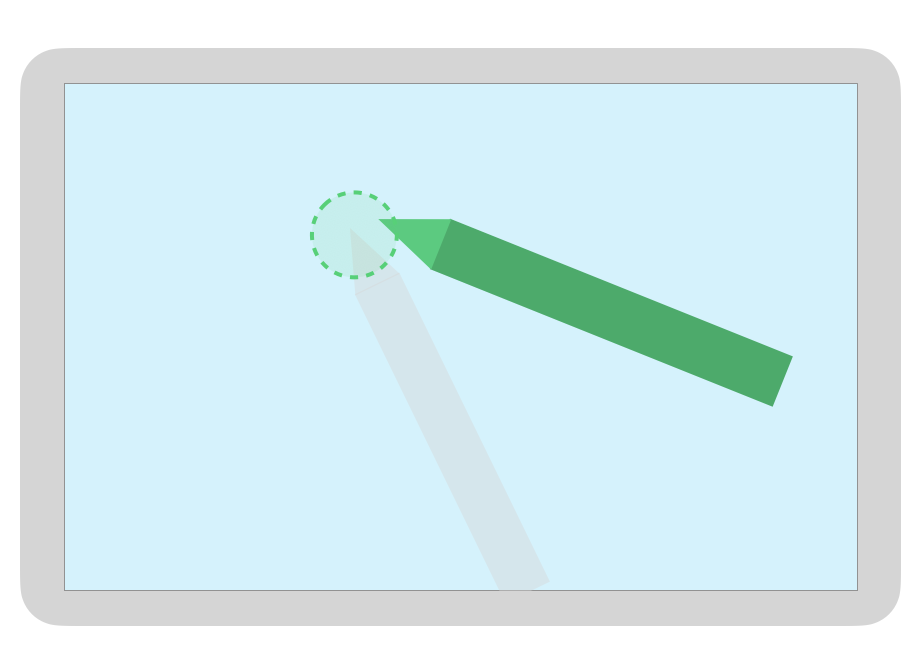

Survol

La distance entre le stylet et l'écran peut être obtenue avec

getAxisValue(AXIS_DISTANCE) La méthode renvoie une valeur comprise entre 0.0 (contact avec

l'écran) à des valeurs plus élevées lorsque le stylet s'éloigne de l'écran. Survol

la distance entre l'écran et la pointe du stylet dépend

fabricant de l'écran et du stylet. Comme les implémentations peuvent

varient, ne comptez pas sur des valeurs précises pour les fonctionnalités essentielles de l'application.

Vous pouvez utiliser le stylet pour prévisualiser la taille du pinceau ou indiquer qu'une est sélectionné.

Remarque:Compose fournit des modificateurs qui affectent l'état interactif des éléments de l'interface utilisateur:

hoverable: permet de configurer le composant pour qu'il soit possible de passer le stylet dessus à l'aide d'événements d'entrée et de sortie du pointeur.indication: permet de dessiner des effets visuels pour ce composant lorsque des interactions se produisent.

Refus de la paume de la main, navigation et entrées indésirables

Parfois, les écrans à plusieurs doigts peuvent enregistrer des interactions involontaires, par exemple, lorsqu'un

l'utilisateur pose naturellement sa main

sur l'écran pendant qu'il écrit.

Le refus de la paume de la main est un mécanisme qui détecte ce comportement et vous informe que

le dernier ensemble MotionEvent doit être annulé.

Par conséquent, vous devez conserver un historique des entrées utilisateur pour que les gestes involontaires peuvent être supprimés de l'écran, et les entrées utilisateur légitimes peuvent être à nouveau.

ACTION_CANCEL et FLAG_CANCELED

ACTION_CANCEL et

Les FLAG_CANCELED sont

pour vous informer que l'ensemble MotionEvent précédent doit être

annulée à partir de la dernière période de ACTION_DOWN. Vous pouvez ainsi, par exemple, annuler la dernière

trait d'une application de dessin pour un pointeur donné.

ACTION_CANCEL

Ajouté dans Android 1.0 (niveau d'API 1).

ACTION_CANCEL indique que l'ensemble d'événements de mouvement précédent doit être annulé.

ACTION_CANCEL est déclenché lorsque l'un des éléments suivants est détecté :

- Gestes de navigation

- Refus de la paume de la main

Lorsque ACTION_CANCEL est déclenché, vous devez identifier le pointeur actif avec

getPointerId(getActionIndex()) Supprimez ensuite le trait créé avec ce pointeur de l'historique des entrées et réaffichez la scène.

FLAG_CANCELED

Ajouté dans Android 13 (niveau d'API 33).

FLAG_CANCELED

indique que le pointeur était une action involontaire de l'utilisateur. L'indicateur est

généralement défini lorsque l'utilisateur touche accidentellement l'écran, par exemple en saisissant

l'appareil ou en plaçant

la paume de la main sur l'écran.

Vous pouvez accéder à la valeur de cet indicateur comme suit :

Kotlin

val cancel = (event.flags and FLAG_CANCELED) == FLAG_CANCELED

Java

boolean cancel = (event.getFlags() & FLAG_CANCELED) == FLAG_CANCELED;

Si l'indicateur est défini, vous devez annuler le dernier MotionEvent, à partir des derniers

ACTION_DOWN à partir de ce pointeur.

Comme ACTION_CANCEL, le pointeur peut être identifié avec getPointerId(actionIndex).

MotionEvent. La pression de la paume est annulée, et l'écran est à nouveau affiché.

Plein écran, bord à bord et gestes de navigation

Si une application est en plein écran et comporte des éléments exploitables à proximité du bord, comme le le canevas d'une application de dessin ou de prise de notes, en balayant l'écran du bas d'afficher la navigation ou de déplacer l'application en arrière-plan peut entraîner une sur le canevas.

Pour éviter que des gestes ne déclenchent des pressions indésirables dans votre application, vous pouvez effectuer

profiter des encarts et

ACTION_CANCEL

Consultez également Refus de la paume de la main, navigation et entrées indésirables. .

Utilisez les

setSystemBarsBehavior()

méthode et

BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

de

WindowInsetsController

pour empêcher les gestes de navigation de provoquer des événements tactiles indésirables:

Kotlin

// Configure the behavior of the hidden system bars.

windowInsetsController.systemBarsBehavior =

WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPEJava

// Configure the behavior of the hidden system bars. windowInsetsController.setSystemBarsBehavior( WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE );

Pour en savoir plus sur la gestion des encarts et des gestes, consultez les sections suivantes :

- Masquer les barres système pour le mode immersif

- Assurer la compatibilité avec la navigation par gestes

- Afficher le contenu bord à bord dans votre application

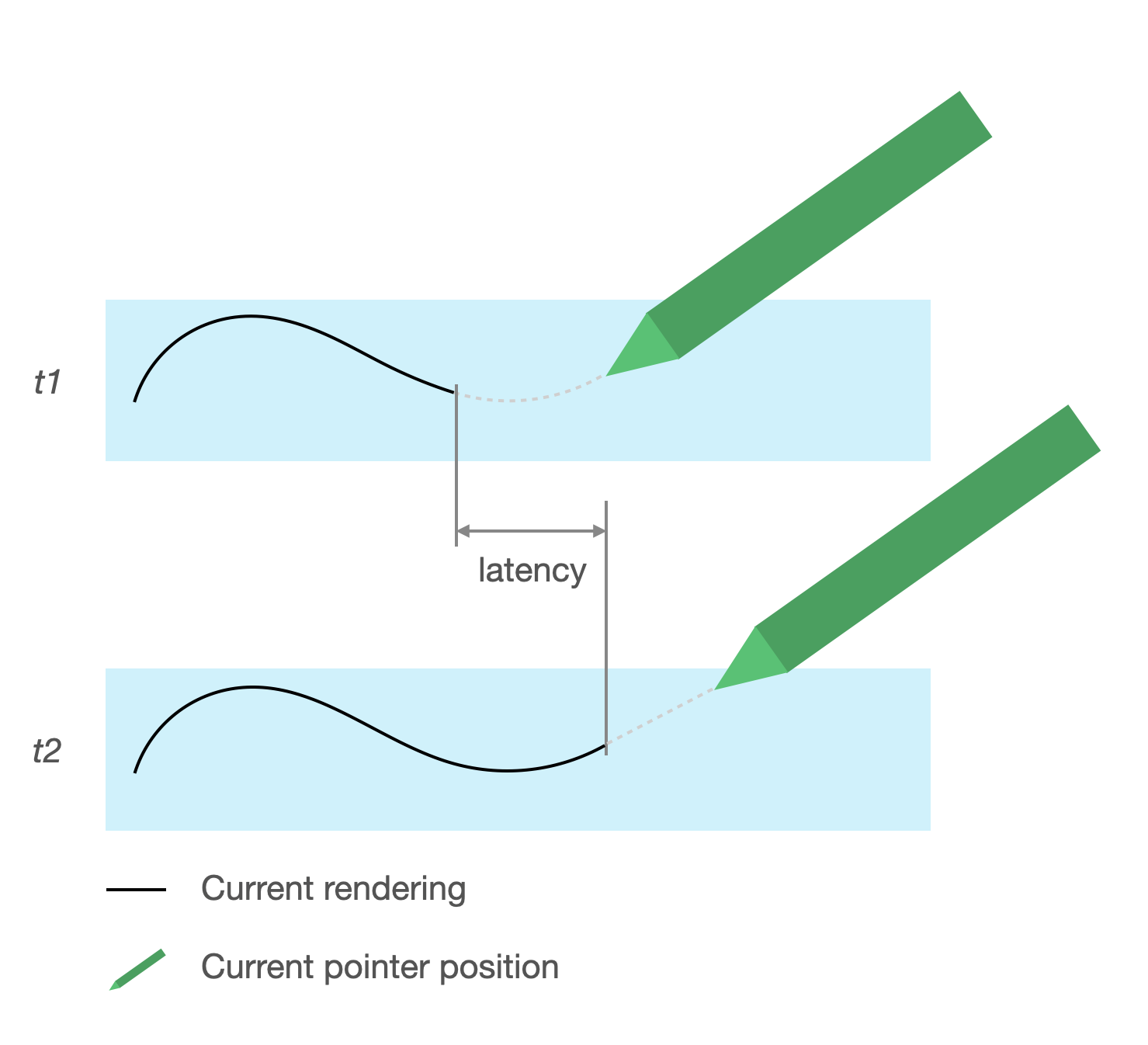

Latence faible

La latence est le temps nécessaire au matériel, au système et à l'application pour traiter et afficher les entrées utilisateur.

Latence = traitement des entrées par matériel et système d'exploitation + traitement de l'application + composition du système

- rendu matériel

<ph type="x-smartling-placeholder">

<ph type="x-smartling-placeholder">Source de latence

- Enregistrement du stylet avec l'écran tactile (matériel): connexion initiale sans fil Lorsque le stylet et l'OS communiquent pour être enregistrés et synchronisés.

- Taux d'échantillonnage tactile (matériel): nombre de fois par seconde un écran tactile vérifie si un pointeur touche la surface, avec une fréquence de 60 à 1 000 Hz.

- Traitement des entrées (application): appliquer des couleurs, des effets graphiques et des transformations en fonction des entrées utilisateur.

- Rendu graphique (OS + matériel) : échange de tampons, traitement matériel.

Graphiques à faible latence

La bibliothèque graphique à faible latence Jetpack réduit le temps de traitement entre l'entrée utilisateur et le rendu à l'écran.

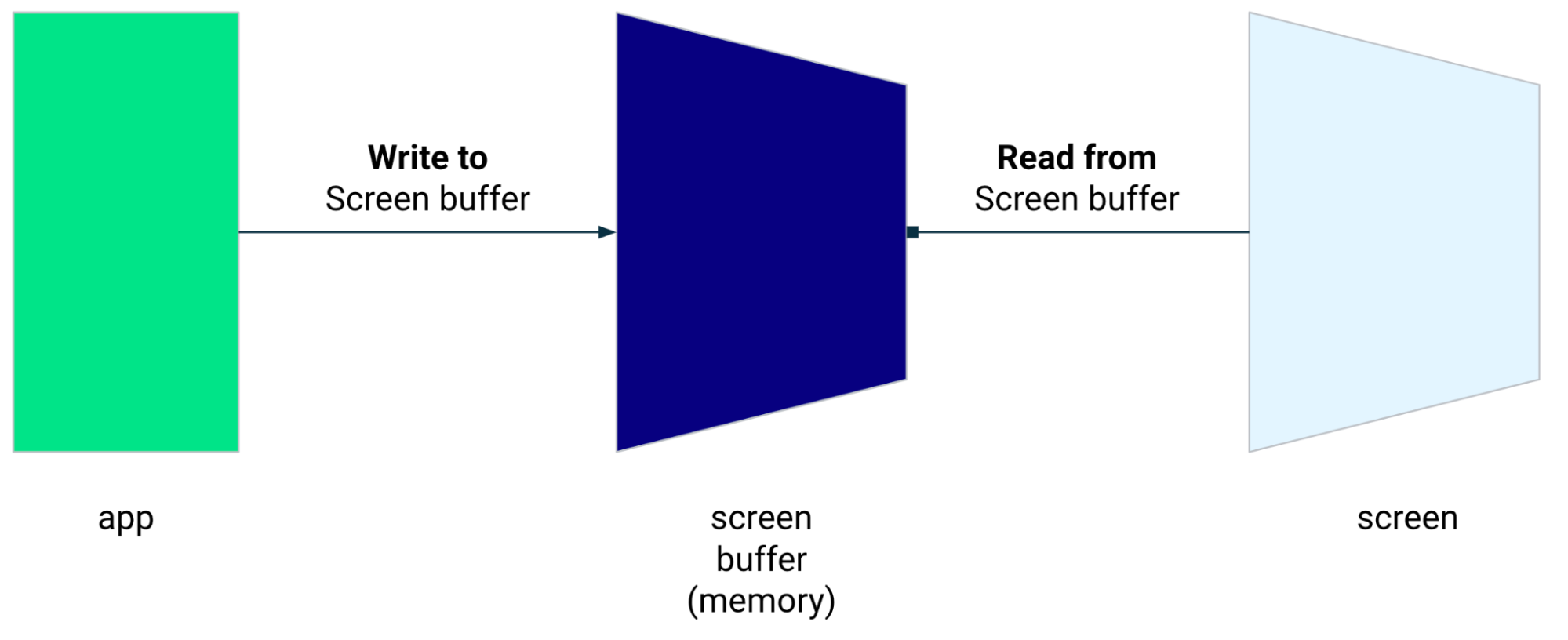

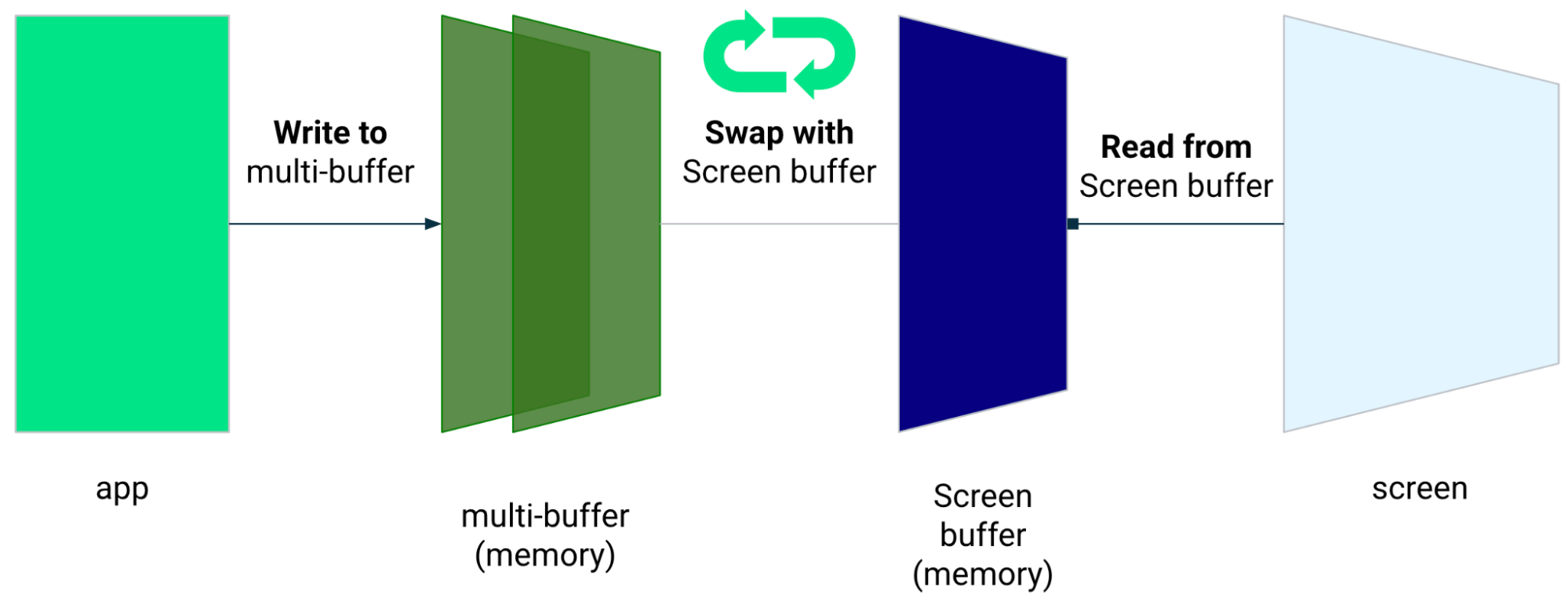

Elle réduit le temps de traitement en évitant le rendu multitampon et en exploitant une technique de rendu du tampon d'affichage, ce qui signifie écrire directement dans l'écran.

Rendu du tampon d'affichage

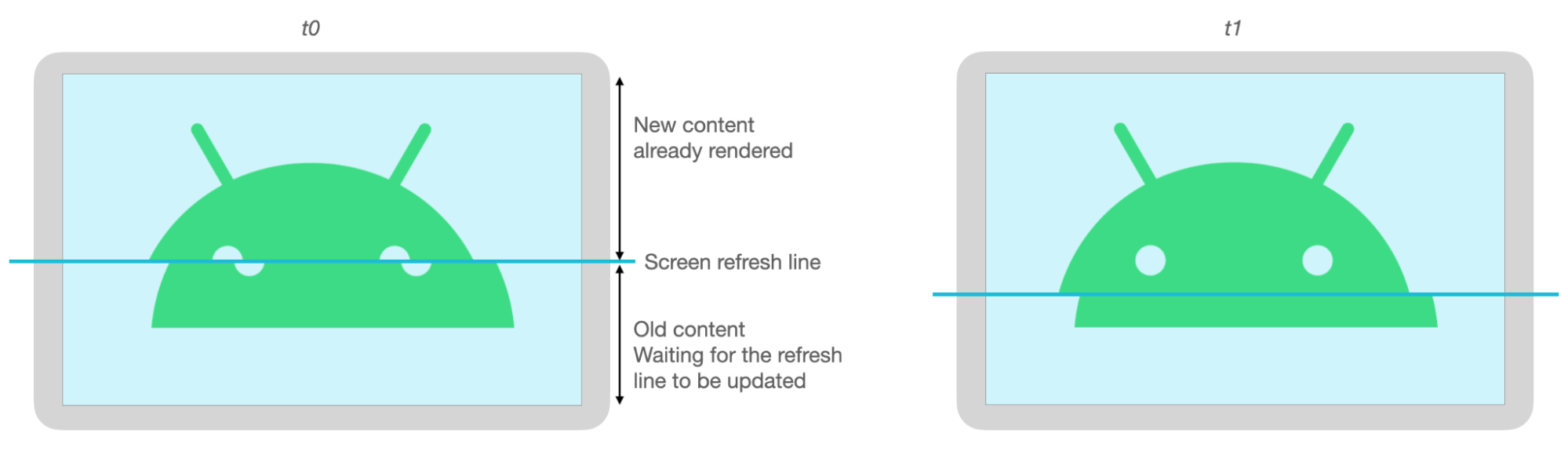

Le tampon d'affichage correspond à la mémoire utilisée par l'écran pour le rendu. Il s'agit de l'instance la plus proche les applications peuvent dessiner directement sur l'écran. La bibliothèque à faible latence permet d'effectuer un rendu directement dans le tampon d'affichage. Cela permet d'améliorer les performances empêchant le changement de tampon, ce qui peut se produire pour le rendu multitampon standard ou le rendu double tampon (cas le plus courant).

Le rendu du tampon d'affichage est une excellente technique pour afficher une petite zone à l'écran, elle n'est pas conçue pour actualiser l'intégralité de l'écran. Avec le rendu du tampon d'affichage : l'application affiche le contenu dans un tampon à partir duquel l'écran lit. Par conséquent, il est possible d'afficher ou déchirures (voir ci-dessous).

La bibliothèque à faible latence est disponible à partir d'Android 10 (niveau d'API 29) ou version ultérieure. et sur les appareils ChromeOS équipés d'Android 10 (niveau d'API 29) ou version ultérieure.

Dépendances

La bibliothèque à faible latence fournit les composants nécessaires au rendu du tampon d'affichage

la mise en œuvre. La bibliothèque est ajoutée en tant que dépendance dans le module de l'application

Fichier build.gradle:

dependencies {

implementation "androidx.graphics:graphics-core:1.0.0-alpha03"

}

Rappels GLFrontBufferRenderer

La bibliothèque à faible latence inclut

GLFrontBufferRenderer.Callback

, qui définit les méthodes suivantes:

La bibliothèque à faible latence n'est pas déterminée par le type de données que vous utilisez

GLFrontBufferRenderer

Cependant, la bibliothèque traite les données comme un flux de centaines de points de données ; Concevez donc vos données pour optimiser l'utilisation et l'allocation de la mémoire.

Rappels

Pour activer les rappels de rendu, implémentez GLFrontBufferedRenderer.Callback et

ignorer onDrawFrontBufferedLayer() et onDrawDoubleBufferedLayer().

GLFrontBufferedRenderer utilise les rappels pour afficher vos données dans les

le plus efficacement possible.

Kotlin

val callback = object: GLFrontBufferedRenderer.Callback<DATA_TYPE> {

override fun onDrawFrontBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

param: DATA_TYPE

) {

// OpenGL for front buffer, short, affecting small area of the screen.

}

override fun onDrawMultiDoubleBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

params: Collection<DATA_TYPE>

) {

// OpenGL full scene rendering.

}

}Java

GLFrontBufferedRenderer.Callback<DATA_TYPE> callbacks = new GLFrontBufferedRenderer.Callback<DATA_TYPE>() { @Override public void onDrawFrontBufferedLayer(@NonNull EGLManager eglManager, @NonNull BufferInfo bufferInfo, @NonNull float[] transform, DATA_TYPE data_type) { // OpenGL for front buffer, short, affecting small area of the screen. } @Override public void onDrawDoubleBufferedLayer(@NonNull EGLManager eglManager, @NonNull BufferInfo bufferInfo, @NonNull float[] transform, @NonNull Collection<? extends DATA_TYPE> collection) { // OpenGL full scene rendering. } };

Déclarer une instance de GLFrontBufferedRenderer

Préparez le GLFrontBufferedRenderer en fournissant les SurfaceView et

que vous avez créés précédemment. GLFrontBufferedRenderer optimise l'affichage

dans le tampon d'affichage et le double tampon à l'aide de vos rappels:

Kotlin

var glFrontBufferRenderer = GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks)

Java

GLFrontBufferedRenderer<DATA_TYPE> glFrontBufferRenderer = new GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks);

Affichage

Le rendu du tampon d'affichage commence lorsque vous appelez la méthode

renderFrontBufferedLayer()

, ce qui déclenche le rappel onDrawFrontBufferedLayer().

Le rendu en double tampon reprend lorsque vous appelez la méthode

commit()

qui déclenche le rappel onDrawMultiDoubleBufferedLayer().

Dans l'exemple suivant, le processus effectue le rendu dans le tampon d'affichage (rapide

rendu) lorsque l'utilisateur commence à dessiner à l'écran (ACTION_DOWN) et se déplace

le pointeur de la souris (ACTION_MOVE). Le processus effectue le rendu dans le double tampon

lorsque le pointeur quitte la surface de l'écran (ACTION_UP).

Vous pouvez utiliser

requestUnbufferedDispatch()

que le système d'entrée ne traite pas

les événements de mouvement par lot,

dès qu'ils sont disponibles:

Kotlin

when (motionEvent.action) {

MotionEvent.ACTION_DOWN -> {

// Deliver input events as soon as they arrive.

view.requestUnbufferedDispatch(motionEvent)

// Pointer is in contact with the screen.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_MOVE -> {

// Pointer is moving.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_UP -> {

// Pointer is not in contact in the screen.

glFrontBufferRenderer.commit()

}

MotionEvent.CANCEL -> {

// Cancel front buffer; remove last motion set from the screen.

glFrontBufferRenderer.cancel()

}

}Java

switch (motionEvent.getAction()) { case MotionEvent.ACTION_DOWN: { // Deliver input events as soon as they arrive. surfaceView.requestUnbufferedDispatch(motionEvent); // Pointer is in contact with the screen. glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE); } break; case MotionEvent.ACTION_MOVE: { // Pointer is moving. glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE); } break; case MotionEvent.ACTION_UP: { // Pointer is not in contact in the screen. glFrontBufferRenderer.commit(); } break; case MotionEvent.ACTION_CANCEL: { // Cancel front buffer; remove last motion set from the screen. glFrontBufferRenderer.cancel(); } break; }

Bonnes pratiques et pratiques interdites concernant le rendu

Petites parties de l'écran, écriture manuscrite, dessin, croquis.

Mise à jour en plein écran, panoramique, zoom. Peut entraîner une désynchronisation des données.

Désynchronisation des données

Un déchiquetage se produit lorsque l'écran s'actualise alors que la mise en mémoire tampon de l'écran est en cours modifiées au même moment. Une partie de l'écran affiche les nouvelles données, tandis qu'une autre affiche d'anciennes données.

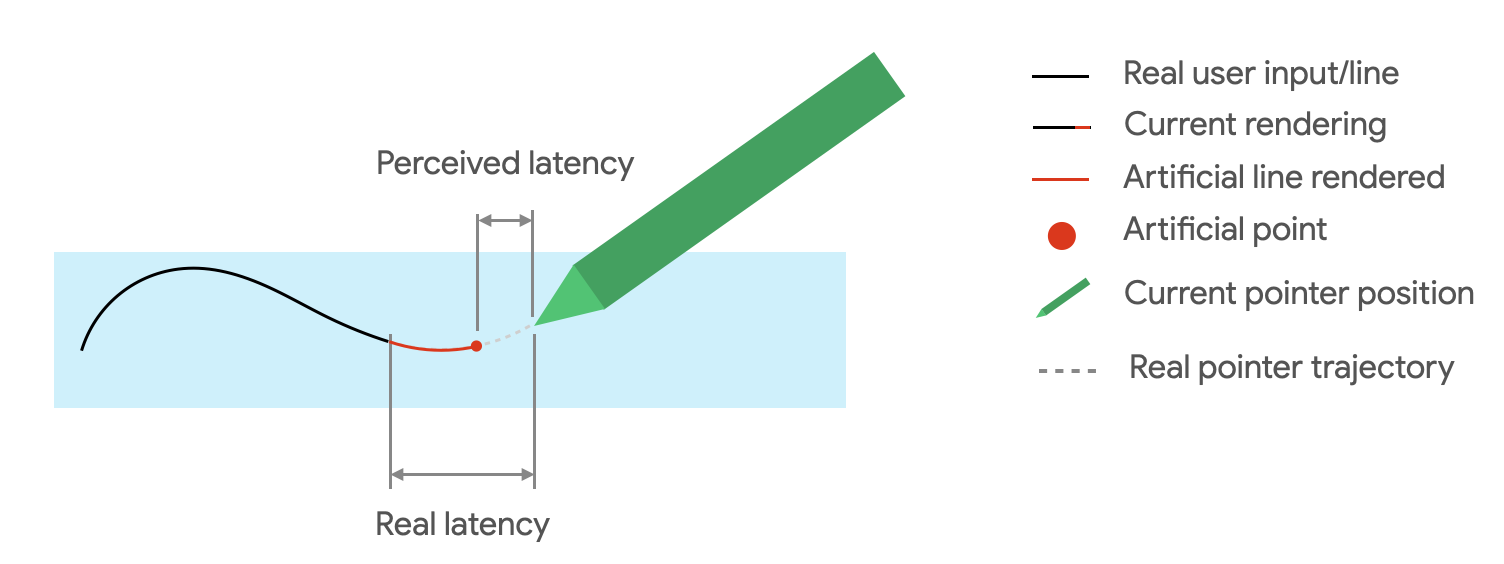

Prédiction de mouvement

La prédiction de mouvement Jetpack bibliothèque réduit la latence perçue en estimant le tracé du trait de l'utilisateur et en fournissant vers le moteur de rendu.

La bibliothèque de prédiction de mouvement reçoit les entrées utilisateur réelles en tant qu'objets MotionEvent.

Les objets contiennent des informations sur les coordonnées X et Y, la pression et le temps.

qui sont exploitées par le prédicteur de mouvement pour prédire les MotionEvent futures

d'objets.

Les objets MotionEvent prédits ne sont que des estimations. Les événements prévus peuvent réduire

latence perçue, mais les données prédites doivent être remplacées par des MotionEvent réelles

de données une fois reçues.

La bibliothèque de prédiction de mouvement est disponible à partir d'Android 4.4 (niveau d'API 19) et versions ultérieures et sur les appareils ChromeOS équipés d'Android 9 (niveau d'API 28) ou version ultérieure.

Dépendances

La bibliothèque de prédiction de mouvement fournit l'implémentation de la prédiction. La

est ajoutée en tant que dépendance dans le fichier build.gradle du module de l'application:

dependencies {

implementation "androidx.input:input-motionprediction:1.0.0-beta01"

}

Implémentation

La bibliothèque de prédiction de mouvement inclut

MotionEventPredictor

, qui définit les méthodes suivantes:

record(): Stocke les objetsMotionEventpour enregistrer les actions de l'utilisateur.predict(): Affiche une valeurMotionEventprédite.

Déclarer une instance de MotionEventPredictor

Kotlin

var motionEventPredictor = MotionEventPredictor.newInstance(view)

Java

MotionEventPredictor motionEventPredictor = MotionEventPredictor.newInstance(surfaceView);

Transmettre des données au prédicteur

Kotlin

motionEventPredictor.record(motionEvent)

Java

motionEventPredictor.record(motionEvent);

Prédire

Kotlin

when (motionEvent.action) {

MotionEvent.ACTION_MOVE -> {

val predictedMotionEvent = motionEventPredictor?.predict()

if(predictedMotionEvent != null) {

// use predicted MotionEvent to inject a new artificial point

}

}

}Java

switch (motionEvent.getAction()) { case MotionEvent.ACTION_MOVE: { MotionEvent predictedMotionEvent = motionEventPredictor.predict(); if(predictedMotionEvent != null) { // use predicted MotionEvent to inject a new artificial point } } break; }

Bonnes pratiques et pratiques interdites concernant les prédictions de mouvement

Supprimez les points de prédiction lorsqu'un point de prédiction est ajouté.

N'utilisez pas de points de prédiction pour le rendu final.

Applications de prise de notes

ChromeOS permet à votre application de déclarer des actions de prise de notes.

Pour enregistrer une application en tant qu'application de prise de notes sur ChromeOS, consultez l'article Entrée compatibilité.

Pour enregistrer une application en tant qu'application de prise de notes sur Android, consultez l'article Créer une application de prise de notes l'application Nest.

Android 14 (niveau d'API 34) a introduit

ACTION_CREATE_NOTE

qui permet à votre appli de démarrer une activité de prise de notes sur le verrou

l'écran.

Reconnaissance d'encre numérique avec ML Kit

Avec l'encre numérique ML Kit la reconnaissance vocale, votre application peut reconnaître du texte manuscrit sur une surface numérique dans des centaines de langues. Vous pouvez également classer les croquis.

ML Kit fournit

Ink.Stroke.Builder

pour créer des objets Ink pouvant être traités par des modèles de machine learning

pour convertir l'écriture manuscrite en texte.

En plus de la reconnaissance de l'écriture manuscrite, le modèle est capable de reconnaître gestes, comme supprimer et entourer.

Voir Encre numérique reconnaissance pour en savoir plus.