Mit Google Assistant kannst du viele Geräte per Sprachbefehl steuern, z. B. Google Home, dein Smartphone und mehr Es verfügt über eine integrierte Funktion, Medienbefehle verstehen („spiel etwas von Beyoncé“) und unterstützt Mediensteuerelemente (z. B. Pause, Überspringen, Vorspulen, Daumen hoch)

Assistant kommuniziert über Medien mit Android-Medien-Apps Sitzung. Sie kann Intents oder Dienste, um starte deine App und starte die Wiedergabe. Die besten Ergebnisse erzielen Sie, wenn Ihre App um alle auf dieser Seite beschriebenen Funktionen zu implementieren.

Mediensitzung verwenden

Für jede Audio- und Video-App muss ein Mediensitzung damit Assistant die Transport-Steuerelemente, sobald die Wiedergabe gestartet wurde.

Hinweis: Assistant verwendet zwar nur die in diesem Abschnitt aufgeführten Aktionen, die

sollten Sie alle Vorbereitungs- und Wiedergabe-APIs implementieren,

Kompatibilität mit anderen Anwendungen. Für alle Aktionen, die Sie nicht unterstützen,

Mediensitzungs-Callbacks können einfach einen Fehler zurückgeben, indem sie

ERROR_CODE_NOT_SUPPORTED

Aktiviere die Medien- und Transportsteuerung, indem du diese Flags in den Einstellungen deiner App festlegst

Objekt MediaSession:

Kotlin

session.setFlags( MediaSessionCompat.FLAG_HANDLES_MEDIA_BUTTONS or MediaSessionCompat.FLAG_HANDLES_TRANSPORT_CONTROLS )

Java

session.setFlags(MediaSessionCompat.FLAG_HANDLES_MEDIA_BUTTONS | MediaSessionCompat.FLAG_HANDLES_TRANSPORT_CONTROLS);

In der Mediensitzung Ihrer App müssen die unterstützten Aktionen deklariert und die

Mediensitzungs-Callbacks entsprechen. Unterstützte Aktionen deklarieren in

setActions()

Die Universeller Android-Musikplayer Beispielprojekt ist ein gutes Beispiel für das Einrichten einer Mediensitzung.

Wiedergabeaktionen

Zum Starten der Wiedergabe von einem Dienst muss eine Mediensitzung die folgenden PLAY-Aktionen und deren Callbacks enthalten:

| Aktion | Rückruf |

|---|---|

ACTION_PLAY |

onPlay() |

ACTION_PLAY_FROM_SEARCH |

onPlayFromSearch() |

ACTION_PLAY_FROM_URI (*) |

onPlayFromUri() |

In deiner Sitzung sollten auch diese PREPARE-Aktionen und ihre Callbacks implementiert werden:

| Aktion | Rückruf |

|---|---|

ACTION_PREPARE |

onPrepare() |

ACTION_PREPARE_FROM_SEARCH |

onPrepareFromSearch() |

ACTION_PREPARE_FROM_URI (*) |

onPrepareFromUri() |

(*) URI-basierte Aktionen von Google Assistant funktionieren nur für Unternehmen die Google URIs bereitstellen. Weitere Informationen dazu, wie Sie Ihre Medieninhalte gegenüber Google beschreiben Siehe Aktionen für Medien.

Durch die Implementierung der Vorbereitungs-APIs wird die Wiedergabelatenz nach einem Sprachbefehl kann reduziert werden. Medien-Apps, die die Wiedergabelatenz verbessern möchten, können die zusätzliche Zeit, um mit dem Caching von Inhalten und der Vorbereitung der Medienwiedergabe zu beginnen.

Suchanfragen parsen

Wenn ein Nutzer nach einem bestimmten Medienelement sucht, wie zum Beispiel „Spiel Jazz auf

[Name Ihrer App] oder [Titel des Titels] anhören, die

onPrepareFromSearch() oder

onPlayFromSearch()

-Callback-Methode einen Abfrageparameter und ein Extra-Bundle erhalten.

Deine App sollte die Suchanfrage für die Sprachsuche parsen und die Wiedergabe starten, indem du diesen Schritten folgst: Schritte:

- Das Extra-Bundle und den Suchanfragestring verwenden, die von der Sprachsuche zurückgegeben wurden um die Ergebnisse zu filtern.

- Erstellen Sie basierend auf diesen Ergebnissen eine Wiedergabewarteschlange.

- Relevantestes Medienelement aus den Ergebnissen wiedergeben.

Die onPlayFromSearch()

-Methode einen extras-Parameter mit ausführlicheren Informationen aus der Stimme

suchen. Diese Extras helfen Ihnen, die Audioinhalte in Ihrer App für die Wiedergabe zu finden.

Wenn die Suchergebnisse diese Daten nicht bereitstellen können, können Sie Logik implementieren.

um die unformatierte Suchanfrage zu parsen und die entsprechenden Titel basierend auf dem

Abfrage.

Die folgenden Extras werden in Android Automotive OS und Android Auto unterstützt:

Im folgenden Code-Snippet sehen Sie, wie onPlayFromSearch() überschrieben wird.

in Ihrem MediaSession.Callback-Objekt

Implementierung, um die Suchanfrage der Sprachsuche zu parsen und die Wiedergabe zu starten:

Kotlin

override fun onPlayFromSearch(query: String?, extras: Bundle?) { if (query.isNullOrEmpty()) { // The user provided generic string e.g. 'Play music' // Build appropriate playlist queue } else { // Build a queue based on songs that match "query" or "extras" param val mediaFocus: String? = extras?.getString(MediaStore.EXTRA_MEDIA_FOCUS) if (mediaFocus == MediaStore.Audio.Artists.ENTRY_CONTENT_TYPE) { isArtistFocus = true artist = extras.getString(MediaStore.EXTRA_MEDIA_ARTIST) } else if (mediaFocus == MediaStore.Audio.Albums.ENTRY_CONTENT_TYPE) { isAlbumFocus = true album = extras.getString(MediaStore.EXTRA_MEDIA_ALBUM) } // Implement additional "extras" param filtering } // Implement your logic to retrieve the queue var result: String? = when { isArtistFocus -> artist?.also { searchMusicByArtist(it) } isAlbumFocus -> album?.also { searchMusicByAlbum(it) } else -> null } result = result ?: run { // No focus found, search by query for song title query?.also { searchMusicBySongTitle(it) } } if (result?.isNotEmpty() == true) { // Immediately start playing from the beginning of the search results // Implement your logic to start playing music playMusic(result) } else { // Handle no queue found. Stop playing if the app // is currently playing a song } }

Java

@Override public void onPlayFromSearch(String query, Bundle extras) { if (TextUtils.isEmpty(query)) { // The user provided generic string e.g. 'Play music' // Build appropriate playlist queue } else { // Build a queue based on songs that match "query" or "extras" param String mediaFocus = extras.getString(MediaStore.EXTRA_MEDIA_FOCUS); if (TextUtils.equals(mediaFocus, MediaStore.Audio.Artists.ENTRY_CONTENT_TYPE)) { isArtistFocus = true; artist = extras.getString(MediaStore.EXTRA_MEDIA_ARTIST); } else if (TextUtils.equals(mediaFocus, MediaStore.Audio.Albums.ENTRY_CONTENT_TYPE)) { isAlbumFocus = true; album = extras.getString(MediaStore.EXTRA_MEDIA_ALBUM); } // Implement additional "extras" param filtering } // Implement your logic to retrieve the queue if (isArtistFocus) { result = searchMusicByArtist(artist); } else if (isAlbumFocus) { result = searchMusicByAlbum(album); } if (result == null) { // No focus found, search by query for song title result = searchMusicBySongTitle(query); } if (result != null && !result.isEmpty()) { // Immediately start playing from the beginning of the search results // Implement your logic to start playing music playMusic(result); } else { // Handle no queue found. Stop playing if the app // is currently playing a song } }

Detaillierteres Beispiel für die Implementierung der Sprachsuche zur Audiowiedergabe Informationen zum Inhalt Ihrer App finden Sie unter Universal Android Music Player. Stichprobe.

Leere Abfragen verarbeiten

Wenn onPrepare(), onPlay(), onPrepareFromSearch() oder onPlayFromSearch()

aufgerufen werden, sollte Ihre Medien-App die aktuellen

Medien. Wenn keine Medien vorhanden sind, sollte die App versuchen, etwas abzuspielen, z. B.

als Titel aus der aktuellen Playlist oder einer zufälligen Wiedergabeliste. Assistant verwendet

APIs verwendet werden, wenn ein Nutzer fragt, Musik auf [Name Ihrer App] abspielen,

erhalten Sie weitere Informationen.

Wenn ein Nutzer „Musik auf [Name Ihrer App] abspielen“ sagt, werden Android Automotive OS oder

Android Auto versucht, deine App zu starten und Audioinhalte abzuspielen, indem die onPlayFromSearch() deiner App angerufen wird

. Da der Nutzer jedoch den Namen des Medienelements nicht gesagt hat, wird der onPlayFromSearch()

-Methode einen leeren Suchparameter empfängt. In diesen Fällen sollte Ihre App

mit sofortiger Audiowiedergabe reagieren, z. B. eines Titels des letzten Titels

Playlist oder einer zufälligen Wiedergabeliste hinzugefügt.

Alte Unterstützung für Sprachbefehle deklarieren

In den meisten Fällen ermöglicht die Verarbeitung der oben beschriebenen Wiedergabeaktionen Ihrer App die gewünschte Wiedergabefunktionalität bietet. Bei einigen Systemen muss Ihre App jedoch Intent-Filter für die Suche enthalten. Sie sollten die Unterstützung für diesen Intent erklären in den Manifestdateien Ihrer App.

Füge diesen Code in die Manifestdatei einer Telefon-App ein:

<activity>

<intent-filter>

<action android:name=

"android.media.action.MEDIA_PLAY_FROM_SEARCH" />

<category android:name=

"android.intent.category.DEFAULT" />

</intent-filter>

</activity>

Transporteinstellungen

Sobald die Mediensitzung deiner App aktiv ist, kann Assistant Sprachbefehle ausgeben um die Wiedergabe zu steuern und Medienmetadaten zu aktualisieren. Damit dies funktioniert, müssen Ihre sollte die folgenden Aktionen aktiviert und die entsprechenden Callbacks verwenden:

| Aktion | Rückruf | Beschreibung |

|---|---|---|

ACTION_SKIP_TO_NEXT |

onSkipToNext() |

Nächstes Video |

ACTION_SKIP_TO_PREVIOUS |

onSkipToPrevious() |

Vorheriger Titel |

ACTION_PAUSE, ACTION_PLAY_PAUSE |

onPause() |

Pausieren |

ACTION_STOP |

onStop() |

Aufnahme beenden |

ACTION_PLAY |

onPlay() |

Fortsetzen |

ACTION_SEEK_TO |

onSeekTo() |

30 Sekunden zurückspulen |

ACTION_SET_RATING |

onSetRating(android.support.v4.media.RatingCompat) |

Daumen nach oben/unten. |

ACTION_SET_CAPTIONING_ENABLED |

onSetCaptioningEnabled(boolean) |

Schalte die Untertitel ein/aus. |

Hinweis:

- Damit Suchbefehle funktionieren, muss der

PlaybackStateauf dem aktuellen Stand derstate, position, playback speed, and update timesein. Die App musssetPlaybackState()aufrufen, wenn sich der Status ändert. - Außerdem muss die Medien-App die Metadaten der Mediensitzungen aktuell halten. Dies unterstützt Fragen wie „Welcher Song läuft gerade?“. Die App muss

setMetadata()aufrufen, wenn sich die entsprechenden Felder (z. B. Titelname, Künstler und Name) ändern. MediaSession.setRatingType()muss festgelegt werden, um die Art der Altersfreigabe anzugeben, die von der App unterstützt wird. Außerdem muss die ApponSetRating()implementieren. Wenn die App keine Altersfreigabe unterstützt, sollte der Bewertungstyp aufRATING_NONEfestgelegt werden.

Welche Sprachbefehle Sie unterstützen, hängt wahrscheinlich vom Inhaltstyp ab.

| Inhaltstyp | Erforderliche Maßnahmen |

|---|---|

| Musik |

Muss unterstützt werden: „Wiedergabe“, „Pause“, „Stopp“, „Zum Nächsten springen“ und „Zum Vorherigen springen“ Dringende Unterstützung für: Seek To |

| Podcast |

Muss unterstützt: „Wiedergabe“, „Pause“, „Stopp“ und „Spulen zu“ Unterstützung für: springen Sie zum nächsten bzw. vorherigen |

| Hörbuch | Muss unterstützt: „Wiedergabe“, „Pause“, „Stopp“ und „Spulen zu“ |

| Radio | Muss unterstützt: Wiedergabe, Pause und Stopp |

| Nachrichten | Muss unterstützt werden: „Wiedergabe“, „Pause“, „Stopp“, „Zum Nächsten springen“ und „Zum Vorherigen springen“ |

| Video |

Muss unterstützt: Wiedergabe, Pause, Stopp, Springen zu, Zurückspulen und Vorspulen Dringende Unterstützung empfehlen für: „Weiter“ und „Zurück“ |

Sie müssen so viele der oben aufgeführten Aktionen unterstützen wie Ihre Produktangebote. zulassen, aber dennoch auf andere Aktionen angemessen reagieren. Wenn beispielsweise nur können Premium-Nutzer zum vorherigen Artikel zurückkehren, Ein Fehler, wenn ein Nutzer der kostenlosen Stufe Assistant bittet, zum vorherigen Artikel zurückzukehren. Weitere Informationen finden Sie im Abschnitt zur Fehlerbehandlung.

Beispiele für Sprachbefehle zum Ausprobieren

In der folgenden Tabelle finden Sie einige Beispielabfragen, die Sie Ihre Implementierung testen:

| MediaSession-Callback | Die zu verwendende Wortgruppe „Hey Google“ | |

|---|---|---|

onPlay() |

„Abspielen.“ "Fortsetzen." |

|

onPlayFromSearch()

onPlayFromUri() |

Musik |

„Spiel Musik oder Titel auf (App-Name) ab.“ Dies ist eine leere Abfrage. „Spiel (Titel | Interpret | Album | Genre | Playlist) auf (App-Name) ab.“ |

| Radio | „Spiel (Frequenz | Sender) auf (App-Name) ab.“ | |

| Hörbuch |

„Lies mein Hörbuch über (App-Name) vor.“ „Lies (Hörbuch) auf (App-Name).“ |

|

| Podcasts | „Spiel (Podcast) auf (App-Name) ab.“ | |

onPause() |

"Pausieren." | |

onStop() |

"Beenden." | |

onSkipToNext() |

„Nächster (Titel | Folge | Titel).“ | |

onSkipToPrevious() |

„Vorheriger (Song | Folge | Titel).“ | |

onSeekTo() |

„Neu starten.“ „Spule ## Sekunden vor.“ „Geh ## Minuten zurück.“ |

|

–

MediaMetadata

aktualisiert) |

"Was läuft gerade?", | |

Fehler

Assistant verarbeitet Fehler in einer Mediensitzung, wenn sie auftreten, und meldet

an die Nutzenden zu liefern. Achten Sie darauf, dass Ihre Mediensitzung den Transportstatus und

Fehlercode im PlaybackState korrekt, wie unter Arbeiten mit einem

Mediensitzung. Google Assistant

alle Fehlercodes erkennt, die von

getErrorCode()

Häufig falsch behandelte Fälle

Hier sind einige Beispiele für Fehler, die Sie beheben sollten: richtig:

- Nutzer muss sich anmelden

<ph type="x-smartling-placeholder">

- </ph>

- Legen Sie den Fehlercode

PlaybackStateaufERROR_CODE_AUTHENTICATION_EXPIREDfest. - Legen Sie die

PlaybackState-Fehlermeldung fest. - Wenn dies für die Wiedergabe erforderlich ist, setze den Status

PlaybackStateaufSTATE_ERROR. Andernfalls behalten Sie den Rest vonPlaybackStateunverändert bei.

- Legen Sie den Fehlercode

- Nutzer fordert eine nicht verfügbare Aktion an

<ph type="x-smartling-placeholder">

- </ph>

- Lege den Fehlercode

PlaybackStateentsprechend fest. Legen Sie beispielsweisePlaybackStatenachERROR_CODE_NOT_SUPPORTEDAktion wird nicht unterstützt oderERROR_CODE_PREMIUM_ACCOUNT_REQUIREDwenn die Aktion durch Anmeldung geschützt ist. - Legen Sie die

PlaybackState-Fehlermeldung fest. - Lassen Sie den Rest von

PlaybackStateunverändert.

- Lege den Fehlercode

- Der Nutzer fordert Inhalte an, die in der App nicht verfügbar sind

<ph type="x-smartling-placeholder">

- </ph>

- Lege den Fehlercode

PlaybackStateentsprechend fest. Verwenden Sie beispielsweiseERROR_CODE_NOT_AVAILABLE_IN_REGION - Legen Sie die

PlaybackState-Fehlermeldung fest. - Setze den Status

PlaybackSateaufSTATE_ERROR, um die Wiedergabe zu unterbrechen. Andernfalls behalten Sie den Rest vonPlaybackStateunverändert bei.

- Lege den Fehlercode

- Der Nutzer fordert Inhalte an, für die keine genaue Übereinstimmung verfügbar ist. Beispiel:

Nutzer der kostenlosen Stufe fragt nach Inhalten, die nur Nutzern der Premiumstufe zur Verfügung stehen.

- Wir empfehlen, keine Fehler zurückzugeben, und sollten stattdessen Prioritäten setzen der nach etwas Ähnlichem sucht. Assistant kann die meiste Sprache sprechen relevanten Sprachantworten vor der Wiedergabe.

Gezielte Wiedergabe

Assistant kann eine Audio- oder Video-App starten und die Wiedergabe starten, indem er eine mit einem Deeplink.

Der Intent und sein Deeplink können aus verschiedenen Quellen stammen:

- Wenn Assistant eine mobile App gestartet, kann sie über die Google-Suche mit Markup versehene Inhalte abrufen, die eine Watch Action mit einem Link bereitstellt.

- Wenn Assistant eine TV-App startet, sollte deine App eine

TV-Suchanbieter

um URIs für Medieninhalte bereitzustellen. Assistant sendet eine Anfrage an

Der Contentanbieter, der einen Intent mit einem URI für den Deeplink zurückgeben soll

eine optionale Aktion.

Wenn die Abfrage eine Aktion im Intent zurückgibt,

sendet Assistant diese Aktion und die URI zurück an Ihre App.

Wenn der Anbieter keine

Aktion aktiviert, fügt Assistant dem Intent

ACTION_VIEWhinzu.

Assistant fügt die zusätzlichen EXTRA_START_PLAYBACK mit dem Wert true hinzu

an den Intent, den er an Ihre App sendet. Deine App sollte die Wiedergabe starten, sobald sie

erhält einen Intent mit EXTRA_START_PLAYBACK.

Aktive Intents verarbeiten

Nutzer können Assistant bitten, etwas abzuspielen, während deine App noch läuft aus einem früheren Antrag. Das bedeutet, dass Ihre App neue Intents empfangen kann, Wiedergabe starten, während die entsprechende Wiedergabeaktivität bereits gestartet und aktiv ist

Die Aktivitäten, die Intents mit Deeplinks unterstützen, sollten

onNewIntent()

um neue Anfragen zu verarbeiten.

Zu Beginn der Wiedergabe fügt Assistant möglicherweise weitere

Flags

an den Intent, den er an Ihre App sendet. Es kann insbesondere

FLAG_ACTIVITY_CLEAR_TOP oder

FLAG_ACTIVITY_NEW_TASK oder beides. Auch wenn Ihr Code

diese Flags nicht verarbeiten muss, reagiert das Android-System darauf.

Dies kann sich auf das Verhalten deiner App auswirken, wenn eine zweite Wiedergabeanfrage mit einem neuen URI eingeht

während der vorherige URI noch abgespielt wird. In diesem Fall empfiehlt es sich, zu testen, wie Ihre App reagiert. Sie können den Befehl adb verwenden,

Linientool, um die Situation zu simulieren (die Konstante 0x14000000 ist das boolesche bitweise ODER der beiden Flags):

adb shell 'am start -a android.intent.action.VIEW --ez android.intent.extra.START_PLAYBACK true -d "<first_uri>"' -f 0x14000000

adb shell 'am start -a android.intent.action.VIEW --ez android.intent.extra.START_PLAYBACK true -d "<second_uri>"' -f 0x14000000

Wiedergabe von einem Dienst

Wenn Ihre App eine

media browser service

über das Assistant-Verbindungen,

kann Assistant die App starten, indem er mit dem

media session.

Der Medienbrowserdienst sollte niemals eine Aktivität starten.

Assistant startet deine Aktivität basierend auf den von dir festgelegten PendingIntent

mit setSessionActivity()

Legen Sie das Token "MediaSession.Token" fest, wenn Sie Medienbrowserdienst initialisieren Denke daran, die unterstützten Wiedergabeaktionen festzulegen. auch während der Initialisierung. Assistant erwartet deine Medien App, um die Wiedergabeaktionen festzulegen, bevor Assistant die erste Wiedergabe sendet .

Damit Assistant von einem Dienst aus starten kann, implementiert er die Client-APIs für den Medienbrowser. Sie führt TransportControls-Aufrufe aus, die PLAY-Aktions-Callbacks auf Ihrem Gerät auslösen. in der Mediensitzung der App.

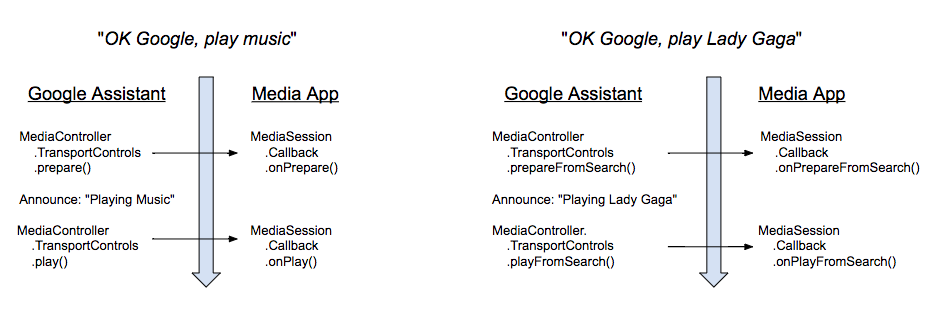

Das folgende Diagramm zeigt die Reihenfolge der Anrufe, die von Assistant und der Mediensitzungs-Callbacks entsprechen. (Die Vorbereitungsrückrufe werden nur sofern Ihre App dies unterstützt.) Alle Aufrufe sind asynchron. Assistant tut Folgendes nicht: warten Sie auf eine Antwort von Ihrer App.

Wenn ein Nutzer einen Sprachbefehl zum Abspielen gibt, antwortet Assistant mit einer kurzen Ansage. Sobald die Ankündigung vollständig ist, führt Assistant eine WIEDERGABE-Aktion aus. Es wartet nicht auf einen bestimmten Wiedergabestatus.

Wenn deine App die Aktionen „ACTION_PREPARE_*“ unterstützt, ruft Assistant die Aktion „PREPARE“ auf, bevor die Ansage gestartet wird.

Verbindung zu einem MediaBrowserService herstellen

Um einen Dienst zum Starten Ihrer App verwenden zu können, muss Assistant eine Verbindung zum MediaBrowserService der App herstellen und

MediaSession.Token abrufen. Verbindungsanfragen werden in der

onGetRoot()

. Es gibt zwei Möglichkeiten, Anfragen zu verarbeiten:

- Alle Verbindungsanfragen akzeptieren

- Verbindungsanfragen nur von der Assistant App annehmen

Alle Verbindungsanfragen akzeptieren

Sie müssen einen BrowserRoot zurückgeben, damit Assistant Befehle an Ihre Mediensitzung senden kann. Am einfachsten ist es, allen MediaBrowser-Apps die Verbindung zu Ihrem MediaBrowserService zu erlauben. Ein BrowserRoot, der nicht null ist, muss zurückgegeben werden. Hier ist der entsprechende Code vom Universal Music Player:

Kotlin

override fun onGetRoot( clientPackageName: String, clientUid: Int, rootHints: Bundle? ): BrowserRoot? { // To ensure you are not allowing any arbitrary app to browse your app's contents, you // need to check the origin: if (!packageValidator.isCallerAllowed(this, clientPackageName, clientUid)) { // If the request comes from an untrusted package, return an empty browser root. // If you return null, then the media browser will not be able to connect and // no further calls will be made to other media browsing methods. Log.i(TAG, "OnGetRoot: Browsing NOT ALLOWED for unknown caller. Returning empty " + "browser root so all apps can use MediaController. $clientPackageName") return MediaBrowserServiceCompat.BrowserRoot(MEDIA_ID_EMPTY_ROOT, null) } // Return browser roots for browsing... }

Java

@Override public BrowserRoot onGetRoot(@NonNull String clientPackageName, int clientUid, Bundle rootHints) { // To ensure you are not allowing any arbitrary app to browse your app's contents, you // need to check the origin: if (!packageValidator.isCallerAllowed(this, clientPackageName, clientUid)) { // If the request comes from an untrusted package, return an empty browser root. // If you return null, then the media browser will not be able to connect and // no further calls will be made to other media browsing methods. LogHelper.i(TAG, "OnGetRoot: Browsing NOT ALLOWED for unknown caller. " + "Returning empty browser root so all apps can use MediaController." + clientPackageName); return new MediaBrowserServiceCompat.BrowserRoot(MEDIA_ID_EMPTY_ROOT, null); } // Return browser roots for browsing... }

Assistant-App-Paket und ‐Signatur akzeptieren

Sie können Assistant explizit erlauben, eine Verbindung zu Ihrem Medienbrowserdienst herzustellen, indem Sie nach dem Paketnamen und der Signatur suchen. Ihre App erhält den Paketnamen in der onGetRoot-Methode Ihres MediaBrowserService. Sie müssen einen BrowserRoot zurückgeben, damit Assistant Befehle an Ihre Mediensitzung senden kann. Die Universeller Musikplayer Beispiel verwaltet eine Liste mit bekannten Paketnamen und Signaturen. Nachstehend finden Sie die Paketnamen und Signaturen, die von Google Assistant verwendet werden.

<signature name="Google" package="com.google.android.googlequicksearchbox">

<key release="false">19:75:b2:f1:71:77:bc:89:a5:df:f3:1f:9e:64:a6:ca:e2:81:a5:3d:c1:d1:d5:9b:1d:14:7f:e1:c8:2a:fa:00</key>

<key release="true">f0:fd:6c:5b:41:0f:25:cb:25:c3:b5:33:46:c8:97:2f:ae:30:f8:ee:74:11:df:91:04:80:ad:6b:2d:60:db:83</key>

</signature>

<signature name="Google Assistant on Android Automotive OS" package="com.google.android.carassistant">

<key release="false">17:E2:81:11:06:2F:97:A8:60:79:7A:83:70:5B:F8:2C:7C:C0:29:35:56:6D:46:22:BC:4E:CF:EE:1B:EB:F8:15</key>

<key release="true">74:B6:FB:F7:10:E8:D9:0D:44:D3:40:12:58:89:B4:23:06:A6:2C:43:79:D0:E5:A6:62:20:E3:A6:8A:BF:90:E2</key>

</signature>