General - Media

- ABR

- Adaptive Bitrate. An ABR algorithm is an algorithm that selects between a number of tracks during playback, where each track presents the same media but at different bitrates.

- Adaptive streaming

- In adaptive streaming, multiple tracks are available that present the same media at different bitrates. The selected track is chosen dynamically during playback using an ABR algorithm.

- Access unit

- A data item within a media container. Generally refers to a small piece of the compressed media bitstream that can be decoded and presented to the user (a video picture or fragment of playable audio).

- AV1

AOMedia Video 1 codec.

For more information, see the Wikipedia page.

- AVC

Advanced Video Coding, also known as the H.264 video codec.

For more information, see the Wikipedia page.

- Codec

This term is overloaded and has multiple meanings depending on the context. The two following definitions are the most-commonly used:

- Hardware or software component for encoding or decoding access units.

- Audio or video sample format specification.

- Container

A media container format such as MP4 and Matroska. Such formats are called container formats because they contain one or more tracks of media, where each track uses a particular codec (for example, AAC audio and H.264 video in an MP4 file). Note that some media formats are both a container format and a codec (for example, MP3).

- DASH

Dynamic Adaptive Streaming over HTTP. An industry driven adaptive streaming protocol. It is defined by ISO/IEC 23009, which can be found on the ISO Publicly Available Standards page.

- DRM

Digital Rights Management.

For more information, see the Wikipedia page.

- Gapless playback

Process by which the end of a track and/or the beginning of the next track are skipped to avoid a silent gap between tracks.

For more information, see the Wikipedia page.

- HEVC

High Efficiency Video Coding, also known as the H.265 video codec.

- HLS

HTTP Live Streaming. Apple's adaptive streaming protocol.

For more information, see the Apple documentation.

- Manifest

A file that defines the structure and location of media in adaptive streaming protocols. Examples include DASH MPD files, HLS multivariant playlist files and Smooth Streaming manifest files. Not to be confused with an AndroidManifest XML file.

- MPD

Media Presentation Description. The manifest file format used in the DASH adaptive streaming protocol.

- PCM

Pulse-Code Modulation.

For more information, see the Wikipedia page.

- Smooth Streaming

Microsoft's adaptive streaming protocol.

For more information, see the Microsoft documentation.

- Track

A single audio, video, text, or metadata stream within a piece of media. A media file will often contain multiple tracks. For example, a video track and an audio track in a video file, or multiple audio tracks in different languages. In adaptive streaming, there are also multiple tracks containing the same content at different bitrates.

General - Android

- AudioTrack

An Android API for playing audio.

For more information, see the Javadoc.

- CDM

Content Decryption Module. A component in the Android platform responsible for decrypting DRM-protected content. CDMs are accessed using Android's

MediaDrmAPI.For more information, see the Javadoc.

- IMA

Interactive Media Ads. IMA is an SDK that makes it easy to integrate multimedia ads into an app.

For more information, see the IMA documentation.

- MediaCodec

An Android API for accessing media codecs (i.e. encoder and decoder components) in the platform.

For more information, see the Javadoc.

- MediaDrm

An Android API for accessing CDMs in the platform.

For more information, see the Javadoc.

- Audio offload

The ability to send compressed audio directly to a digital signal processor (DSP) provided by the device. Audio offload functionality is useful for low power audio playback.

For more information, see the Android interaction documentation.

- Passthrough

The ability to send compressed audio directly over HDMI, without decoding it first. This is for example used to play 5.1 surround sound on an Android TV.

For more information, see the Android interaction documentation.

- Surface

See the Javadoc and the Android graphics documentation.

- Tunneling

Process by which the Android framework receives compressed video and either compressed or PCM audio data and assumes the responsibility for decoding, synchronizing and rendering it, taking over some tasks usually handled by the application. Tunneling may improve audio-to-video (AV) synchronization, may smooth video playback and can reduce the load on the application processor. It is mostly used on Android TVs.

For more information, see the Android interaction documentation and the ExoPlayer article.

ExoPlayer

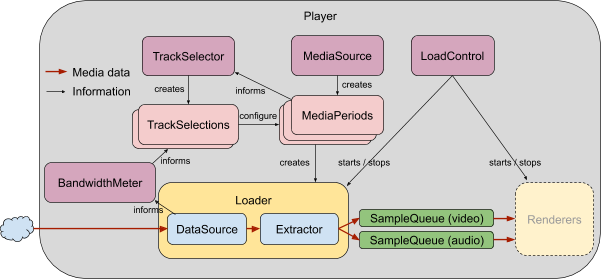

- BandwidthMeter

Component that estimates the network bandwidth, for example by listening to data transfers. In adaptive streaming, bandwidth estimates can be used to select between different bitrate tracks during playback.

For more information, see the component Javadoc.

- DataSource

Component for requesting data (which may be over HTTP, from a local file, etc).

For more information, see the component Javadoc.

- Extractor

Component that parses a media container format, outputting track information and individual access units belonging to each track suitable for consumption by a decoder.

For more information, see the component Javadoc.

- LoadControl

Component that decides when to start and stop loading, and when to start playback.

For more information, see the component Javadoc.

- MediaSource

Provides high-level information about the structure of media (as a

Timeline) and createsMediaPeriodinstances (corresponding to periods of theTimeline) for playback.For more information, see the component Javadoc.

- MediaPeriod

Loads a single piece of media (such as an audio file, an ad, content interleaved between two ads, etc.), and allows the loaded media to be read (typically by

Renderers). The decisions about which tracks within the media are loaded and when loading starts and stops are made by theTrackSelectorand theLoadControlrespectively.For more information, see the component Javadoc.

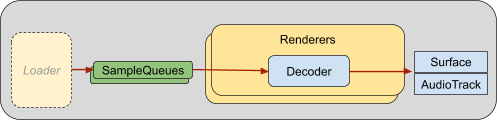

- Renderer

Component that reads, decodes, and renders media samples.

SurfaceandAudioTrackare the standard Android platform components to which video and audio data are rendered.For more information, see the component Javadoc.

- Timeline

Represents the structure of media, from simple cases like a single media file through to complex compositions of media such as playlists and streams with inserted ads.

For more information, see the component Javadoc.

- TrackGroup

Group containing one or more representations of the same video, audio, or text content, normally at different bitrates for adaptive streaming.

For more information, see the component Javadoc.

- TrackSelection

A selection consisting of a static subset of tracks from a

TrackGroupand a possibly varying selected track from the subset. For adaptive streaming, theTrackSelectionis responsible for selecting the appropriate track whenever a new media chunk starts being loaded.For more information, see the component Javadoc.

- TrackSelector

Selects tracks for playback. Given track information for the

MediaPeriodto be played, along with the capabilities of the player'sRenderers, aTrackSelectorwill generate aTrackSelectionfor eachRenderer.For more information, see the component Javadoc.