AAudio to nowy interfejs API Androida C wprowadzony w wersji Androida O. Jest on przeznaczony do wydajnych aplikacji audio, które wymagają niskiego opóźnienia. Aplikacje komunikują się z AAudio, odczytując i zapisując dane do strumieni.

Interfejs AAudio API jest minimalny z założenia. Nie wykonuje tych funkcji:

- Wyliczenie urządzeń audio

- Automatyczne kierowanie między punktami końcowymi audio

- Wejście-wyjście pliku

- dekodowanie skompresowanego dźwięku;

- Automatyczne wyświetlanie wszystkich wejść/strumienienia w jednym wywołaniu zwrotnym.

Pierwsze kroki

Funkcji AAudio możesz używać z poziomu kodu C++. Aby dodać do aplikacji zestaw funkcji AAudio, dołącz plik nagłówka AAudio.h:

#include <aaudio/AAudio.h>

Strumienie audio

AAudio przesyła dane audio między aplikacją a wejściami i wyjściami audio na urządzeniu z Androidem. Aplikacja przesyła i odbiera dane, odczytując i zapisując je do strumieni audio, reprezentowanych przez strukturę AAudioStream. Wywołania odczytu/zapisu mogą być blokujące lub nieblokujące.

Strumień jest definiowany przez:

- Urządzenie audio, które jest źródłem lub odbiorcą danych w strumieniu.

- Tryb udostępniania, który określa, czy strumień ma wyłączny dostęp do urządzenia audio, które w przeciwnym razie mogłoby być udostępniane wielu strumieniom.

- Format danych audio w strumieniu.

Urządzenie audio

Każdy strumień jest przypisany do jednego urządzenia audio.

Urządzenie audio to interfejs sprzętowy lub wirtualny punkt końcowy, który działa jako źródło lub odbiornik ciągłego strumienia cyfrowych danych audio. Nie myl urządzenia audio (wbudowanego mikrofonu lub zestawu słuchawkowego Bluetooth) z urządzeniem z Androidem (telefonem lub zegarkiem), na którym działa Twoja aplikacja.

Aby wykryć urządzenia audio dostępne na urządzeniu z Androidem, możesz użyć metody AudioManager getDevices(). Metoda zwraca informacje o type każdego urządzenia.

Każde urządzenie audio ma unikalny identyfikator na urządzeniu z Androidem. Możesz użyć tego identyfikatora, aby powiązać strumień audio z konkretnym urządzeniem audio. W większości przypadków możesz jednak pozwolić AAudio na wybranie domyślnego urządzenia głównego zamiast samodzielnie je określać.

Urządzenie audio połączone ze strumieniem określa, czy strumień jest przeznaczony do wejścia czy wyjścia. Strumień może przenosić dane tylko w jednym kierunku. Podczas definiowania strumienia określasz też jego kierunek. Gdy otwierasz strumień, Android sprawdza, czy urządzenie audio i kierunek strumienia są zgodne.

Tryb udostępniania

Transmisja ma tryb udostępniania:

AAUDIO_SHARING_MODE_EXCLUSIVEoznacza, że strumień ma wyłączny dostęp do urządzenia audio; urządzenie nie może być używane przez żaden inny strumień audio. Jeśli urządzenie audio jest już używane, strumieniowanie może nie mieć wyłącznego dostępu. Transmisje z wyłączniej transmisji mają zwykle mniejsze opóźnienie, ale łatwiej je też przerwać. Wyłącz strumienie na wyłączność, gdy ich już nie potrzebujesz, aby inne aplikacje mogły uzyskać dostęp do urządzenia. Strumienie z wyłącznością zapewniają możliwie najniższe opóźnienie.AAUDIO_SHARING_MODE_SHAREDpozwala AAudio na miksowanie dźwięku. AAudio miksuje wszystkie współdzielone strumienie przypisane do tego samego urządzenia.

Podczas tworzenia strumienia możesz wyraźnie ustawić tryb udostępniania. Domyślnie tryb udostępniania to SHARED.

Format dźwięku

Dane przekazywane przez strumień mają typowe atrybuty cyfrowego dźwięku. Są to:

- Format przykładowych danych

- Liczba kanałów (próbki na klatkę)

- Częstotliwość próbkowania

AAudio obsługuje te formaty próbek:

| aaudio_format_t | Typ danych C | Uwagi |

|---|---|---|

| AAUDIO_FORMAT_PCM_I16 | int16_t | zwykłe próbki 16-bitowe, format Q0.15 |

| AAUDIO_FORMAT_PCM_FLOAT | zmiennoprzecinkowa | -1,0 do +1,0 |

| AAUDIO_FORMAT_PCM_I24_PACKED | uint8_t w grupach po 3 elementy | skompresowane próbki 24-bitowe w formacie Q0.23 |

| AAUDIO_FORMAT_PCM_I32 | int32_t | typowe próbki 32-bitowe w formacie Q0.31 |

| AAUDIO_FORMAT_IEC61937 | uint8_t | skompresowany dźwięk zapakowany w IEC61937 do przesyłania przez HDMI lub S/PDIF, |

Jeśli poprosisz o określony format próbki, strumień będzie używać tego formatu, nawet jeśli nie jest on optymalny dla urządzenia. Jeśli nie określisz formatu próbkowania, AAudio wybierze optymalny. Po otwarciu strumienia musisz przesłać zapytanie o przykładowy format danych, a potem w razie potrzeby przekonwertować dane, jak w tym przykładzie:

aaudio_format_t dataFormat = AAudioStream_getDataFormat(stream);

//... later

if (dataFormat == AAUDIO_FORMAT_PCM_I16) {

convertFloatToPcm16(...)

}

Tworzenie strumienia audio

Biblioteka AAudio korzysta z wzorca konstruktora i zawiera klasę AAudioStreamBuilder.

- Tworzenie obiektu AAudioStreamBuilder:

AAudioStreamBuilder *builder; aaudio_result_t result = AAudio_createStreamBuilder(&builder); - W kreatorze użyj funkcji kreatora odpowiadających parametrom strumienia, aby ustawić konfigurację strumienia audio. Dostępne są te opcjonalne funkcje zestawu:

AAudioStreamBuilder_setDeviceId(builder, deviceId); AAudioStreamBuilder_setDirection(builder, direction); AAudioStreamBuilder_setSharingMode(builder, mode); AAudioStreamBuilder_setSampleRate(builder, sampleRate); AAudioStreamBuilder_setChannelCount(builder, channelCount); AAudioStreamBuilder_setFormat(builder, format); AAudioStreamBuilder_setBufferCapacityInFrames(builder, frames);Pamiętaj, że te metody nie zgłaszają błędów, takich jak niezdefiniowana stała lub wartość poza zakresem.

Jeśli nie określisz identyfikatora urządzenia, domyślnie zostanie wybrane podstawowe urządzenie wyjściowe. Jeśli nie określisz kierunku strumienia, domyślnie będzie to strumień wyjściowy. W przypadku wszystkich pozostałych parametrów możesz ustawić wartość jawnie lub pozwolić systemowi przypisać optymalną wartość, nie podając go wcale lub ustawiając na

AAUDIO_UNSPECIFIED.Aby mieć pewność, że wszystko działa prawidłowo, po utworzeniu strumienia audio sprawdź jego stan, wykonując czynności opisane w kroku 4 poniżej.

- Po skonfigurowaniu obiektu AAudioStreamBuilder możesz użyć go do utworzenia strumienia:

AAudioStream *stream; result = AAudioStreamBuilder_openStream(builder, &stream); - Po utworzeniu strumienia sprawdź jego konfigurację. Jeśli ustawisz format próbkowania, częstotliwość próbkowania lub próbki na klatkę, nie ulegną one zmianie. Jeśli określisz tryb udostępniania lub pojemność bufora, mogą one ulec zmianie w zależności od możliwości urządzenia audio strumienia i urządzenia z Androidem, na którym jest ono uruchomione. W ramach dobrej strategii obronnej przed atakami musisz sprawdzić konfigurację strumienia przed jego użyciem. Istnieją funkcje służące do pobierania ustawień strumienia odpowiadających poszczególnym ustawieniom kreatora:

- Możesz zapisać kreatora i wykorzystać go w przyszłości do tworzenia kolejnych strumieni. Jeśli jednak nie zamierzasz już z niego korzystać, usuń go.

AAudioStreamBuilder_delete(builder);

Korzystanie ze strumienia audio

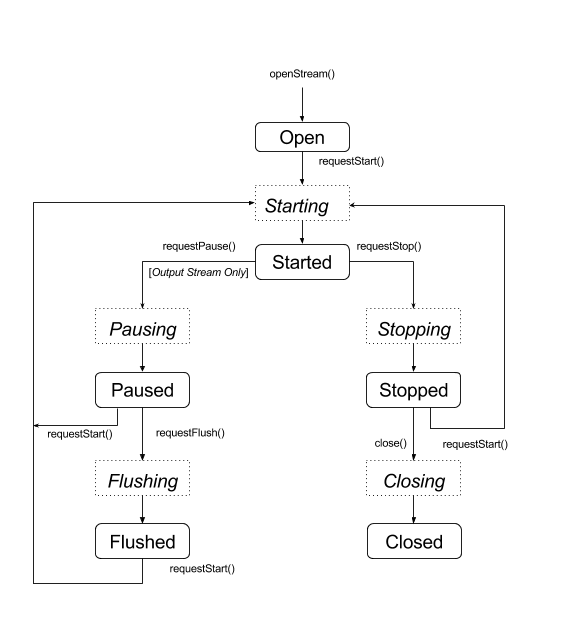

Przejścia

Strumień AAudio zwykle znajduje się w jednym z 5 stabilnych stanów (stan błędu „Rozłączony” jest opisany na końcu tej sekcji):

- Otwórz

- Rozpoczęto

- Wstrzymano

- Rumieniec

- Zatrzymano

Dane przepływają przez strumień tylko wtedy, gdy jest on w stanie Started (Rozpoczęto). Aby przenieść strumień między stanami, użyj jednej z funkcji, które wymagają przejścia ze stanu:

aaudio_result_t result;

result = AAudioStream_requestStart(stream);

result = AAudioStream_requestStop(stream);

result = AAudioStream_requestPause(stream);

result = AAudioStream_requestFlush(stream);

Pamiętaj, że możesz poprosić o wstrzymanie lub opróżnienie tylko strumienia wyjściowego:

Te funkcje są asynchroniczne, a zmiana stanu nie następuje natychmiast. Gdy żądasz zmiany stanu, strumień przechodzi przez jeden z odpowiednich stanów przejściowych:

- Uruchamiam

- Wstrzymywanie

- Płukanie

- Zatrzymuję

- Zamykanie

Na diagramie stanów poniżej stabilne stany są oznaczone zaokrąglonymi prostokątami, a stany przejściowe – kropkowanymi prostokątami.

Mimo że nie jest to widoczne, możesz zadzwonić do close() z dowolnego stanu.

AAudio nie wywołuje funkcji zwrotnej, aby powiadomić o zmianach stanu. Aby oczekiwać na zmianę stanu, możesz użyć specjalnej funkcji AAudioStream_waitForStateChange(stream, inputState, nextState, timeout).

Funkcja nie wykrywa zmian stanu samodzielnie ani nie czeka na określony stan. Czeka, aż bieżący stan będzie inny od określonego przez Ciebie stanu inputState.

Na przykład po wysłaniu żądania wstrzymania strumienia powinien on natychmiast przejść do przejściowego stanu Wstrzymywanie, a później do stanu Wstrzymany. Nie ma jednak gwarancji, że tak się stanie.

Ponieważ nie możesz czekać na stan Wstrzymana, użyj waitForStateChange(), aby czekać na dowolny stan inny niż Wstrzymanie. Oto jak to zrobić:

aaudio_stream_state_t inputState = AAUDIO_STREAM_STATE_PAUSING;

aaudio_stream_state_t nextState = AAUDIO_STREAM_STATE_UNINITIALIZED;

int64_t timeoutNanos = 100 * AAUDIO_NANOS_PER_MILLISECOND;

result = AAudioStream_requestPause(stream);

result = AAudioStream_waitForStateChange(stream, inputState, &nextState, timeoutNanos);

Jeśli stan strumienia nie jest Wstrzymywanie (inputState, który został założony jako bieżący stan w momencie wywołania funkcji), funkcja zwraca wartość natychmiast. W przeciwnym razie blokuje się, dopóki stan nie zmieni się na inny niż Wstrzymanie lub nie upłynie limit czasu. Gdy funkcja zwraca wartość, parametr nextState wskazuje bieżący stan strumienia.

Tej samej techniki możesz użyć po wywołaniu metody request start, stop lub flush, używając odpowiedniego stanu przejściowego jako inputState. Nie dzwoń do waitForStateChange() po połączeniu z AAudioStream_close(), ponieważ strumień zostanie usunięty zaraz po zamknięciu. Nie wywołuj funkcji AAudioStream_close(), gdy funkcja waitForStateChange() jest wykonywana w innym wątku.

Odczyt i zapis strumienia audio

Dane w strumieniach można przetwarzać na 2 sposoby:

- Użyj połączenia zwrotnego o wysokim priorytecie.

- Użyj funkcji

AAudioStream_read(stream, buffer, numFrames, timeoutNanos)iAAudioStream_write(stream, buffer, numFrames, timeoutNanos). do odczytu lub zapisu strumienia.

W przypadku blokującego odczytu lub zapisu, który przenosi określoną liczbę klatek, ustaw timeoutNanos na wartość większą niż 0. W przypadku wywołania nieblokującego ustaw timeoutNanos na 0. W tym przypadku wynikiem jest rzeczywista liczba przesłanych klatek.

Podczas odczytu danych wejściowych należy sprawdzić, czy odczytano prawidłową liczbę klatek. W przeciwnym razie bufor może zawierać nieznane dane, które mogą spowodować zakłócenie dźwięku. Możesz wypełnić bufor zerami, aby utworzyć cichy wycinek:

aaudio_result_t result =

AAudioStream_read(stream, audioData, numFrames, timeout);

if (result < 0) {

// Error!

}

if (result != numFrames) {

// pad the buffer with zeros

memset(static_cast<sample_type*>(audioData) + result * samplesPerFrame, 0,

sizeof(sample_type) * (numFrames - result) * samplesPerFrame);

}

Przed rozpoczęciem przesyłania strumienia możesz zainicjować bufor, zapisując w nim dane lub ciszę. Musisz to zrobić w nieblokującym wywołaniu z wartością timeoutNanos równą 0.

Dane w buforze muszą być zgodne z formatem danych zwracanym przez funkcję AAudioStream_getDataFormat().

Zamknij strumień audio

Po zakończeniu korzystania ze strumienia zamknij go:

AAudioStream_close(stream);

Po zamknięciu strumienia nie można używać wskaźnika strumienia w żadnej funkcji AAudio opartej na strumieniu.

Zamknięcie strumienia nie jest bezpieczne dla wątku. Nie zamykaj strumienia w jednym wątku, gdy używasz go w innym. Jeśli używasz wielu wątków, muszą być one dokładnie zsynchronizowane. Możesz umieścić cały kod obsługi strumienia w jednym wątku, a potem wysłać polecenia za pomocą atomowej kolejki.

Odłączony strumień audio

Strumień audio może zostać odłączony w każdej chwili, jeśli wystąpi jedno z tych zdarzeń:

- powiązane urządzenie audio nie jest już połączone (np. gdy słuchawki są odłączone);

- Wystąpił błąd wewnętrzny.

- Urządzenie audio nie jest już głównym urządzeniem audio.

Gdy strumień jest rozłączony, ma stan „Disconnected” (Rozłączony) i wszelkie próby wykonania funkcji AAudioStream_write() lub innych funkcji zwracają błąd. Niezależnie od kodu błędu zawsze musisz zatrzymać i zamknąć utracony strumień.

Jeśli używasz wywołania zwrotnego danych (w przeciwieństwie do jednej z metod bezpośredniego odczytu/zapisu), nie otrzymasz żadnego kodu zwrotnego, gdy strumień zostanie odłączony. Aby otrzymywać powiadomienia o takich zdarzeniach, napisz funkcję AAudioStream_errorCallback i zarejestruj ją za pomocą funkcji AAudioStreamBuilder_setErrorCallback().

Jeśli otrzymasz powiadomienie o rozłączeniu w wątku wywołania błędu, zatrzymanie i zamknięcie strumienia musi zostać wykonane w innym wątku. W przeciwnym razie może wystąpić impas.

Pamiętaj, że jeśli otworzysz nową transmisję, może ona mieć inne ustawienia niż oryginalna transmisja (np. framesPerBurst):

void errorCallback(AAudioStream *stream,

void *userData,

aaudio_result_t error) {

// Launch a new thread to handle the disconnect.

std::thread myThread(my_error_thread_proc, stream, userData);

myThread.detach(); // Don't wait for the thread to finish.

}

Optymalizacja skuteczności

Możesz optymalizować wydajność aplikacji audio, dostosowując jej wewnętrzne bufory i korzystając ze specjalnych wątków o wysokim priorytecie.

Dostosowywanie buforów w celu zminimalizowania opóźnień

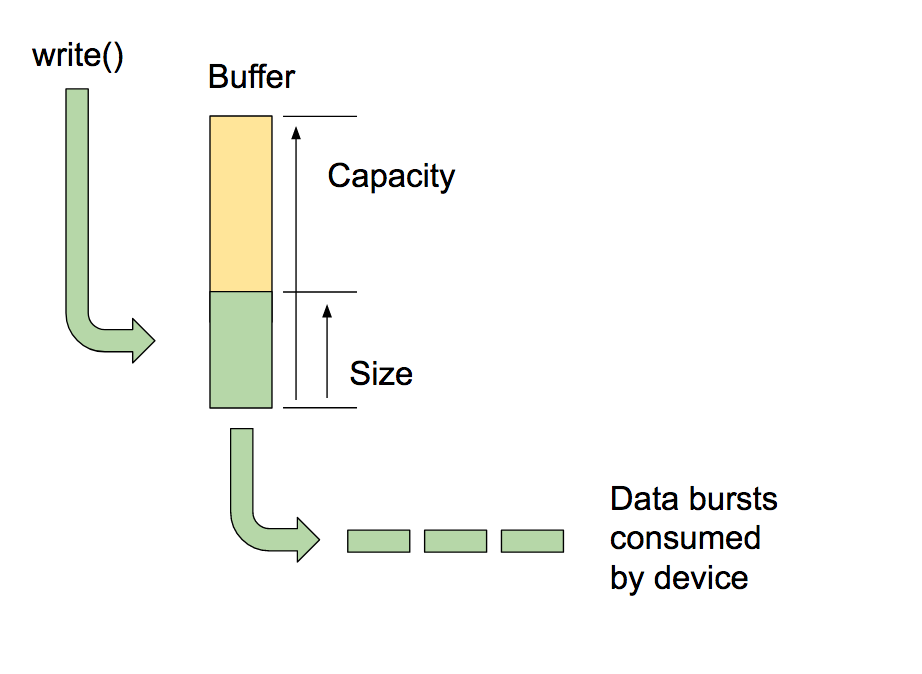

AAudio przesyła dane do i z buforów wewnętrznych, które obsługuje, po jednym dla każdego urządzenia audio.

Pojemność bufora to łączna ilość danych, które może on pomieścić. Aby ustawić pojemność, możesz zadzwonić pod numer AAudioStreamBuilder_setBufferCapacityInFrames(). Ta metoda ogranicza ilość pamięci, którą możesz przydzielić, do maksymalnej wartości dozwolonej przez urządzenie. Aby sprawdzić rzeczywistą pojemność bufora, użyj AAudioStream_getBufferCapacityInFrames().

Aplikacja nie musi używać całej pojemności bufora. AAudio wypełnia bufor do rozmiaru, który możesz ustawić. Rozmiar bufora nie może być większy niż jego pojemność, a często jest mniejszy. Dzięki kontroli rozmiaru bufora możesz określić liczbę wysyłek potrzebnych do jego wypełnienia, a tym samym kontrolować opóźnienie. Aby pracować z rozmiarem bufora, używaj metod AAudioStreamBuilder_setBufferSizeInFrames() i AAudioStreamBuilder_getBufferSizeInFrames().

Gdy aplikacja odtwarza dźwięk, zapisuje go w buforze i blokuje się, dopóki zapis nie zostanie ukończony. AAudio odczytuje dane z bufora w określonych odstępach. Każdy burst zawiera wielokrotność ramek audio i jest zwykle mniejszy niż rozmiar odczytywanego bufora. System kontroluje rozmiar i szybkość wysyłania strumienia, a te właściwości są zwykle określane przez obwody urządzenia audio. Nie możesz zmienić rozmiaru ani szybkości serii, ale możesz ustawić rozmiar wewnętrznego bufora zgodnie z liczbą serii, które zawiera. Zazwyczaj najniższe opóźnienie występuje, gdy rozmiar bufora AAudioStream jest wielokrotnością zgłaszanego rozmiaru strumienia.

Jednym ze sposobów optymalizacji rozmiaru bufora jest rozpoczęcie od dużego bufora i stopniowe zmniejszanie go, aż do momentu, gdy zaczną się pojawiać niedobory, a następnie zwiększenie go. Możesz też zacząć od małego rozmiaru bufora, a jeśli to spowoduje niedobór, zwiększyć rozmiar bufora, aż wyjście będzie znów płynne.

Proces ten może przebiegać bardzo szybko, być może jeszcze przed odtworzeniem pierwszego dźwięku. Możesz najpierw określić rozmiar początkowego bufora, używając ciszy, aby użytkownik nie słyszał żadnych zakłóceń dźwięku. Wydajność systemu może się zmieniać z czasem (np. użytkownik może wyłączyć tryb samolotowy). Dostosowanie bufora powoduje bardzo niewielki narzut, więc aplikacja może to robić nieprzerwanie, gdy odczytuje lub zapisuje dane w strumieniach.

Oto przykład pętli optymalizacji bufora:

int32_t previousUnderrunCount = 0;

int32_t framesPerBurst = AAudioStream_getFramesPerBurst(stream);

int32_t bufferSize = AAudioStream_getBufferSizeInFrames(stream);

int32_t bufferCapacity = AAudioStream_getBufferCapacityInFrames(stream);

while (go) {

result = writeSomeData();

if (result < 0) break;

// Are we getting underruns?

if (bufferSize < bufferCapacity) {

int32_t underrunCount = AAudioStream_getXRunCount(stream);

if (underrunCount > previousUnderrunCount) {

previousUnderrunCount = underrunCount;

// Try increasing the buffer size by one burst

bufferSize += framesPerBurst;

bufferSize = AAudioStream_setBufferSize(stream, bufferSize);

}

}

}

Korzystanie z tej metody w celu optymalizacji rozmiaru bufora dla strumienia wejściowego nie ma sensu. Strumienie danych wejściowych są odtwarzane tak szybko, jak to możliwe, starając się ograniczyć ilość danych buforowanych do minimum, a potem wypełniają się, gdy aplikacja jest zastępowana.

Korzystanie z połączenia zwrotnego o wysokim priorytecie

Jeśli aplikacja odczytuje lub zapisze dane audio z zwykłego wątku, może zostać przerwana lub wystąpić opóźnienie. Może to powodować zakłócenia dźwięku. Korzystanie z większych buforów może zapobiegać takim problemom, ale duży bufor powoduje też dłuższe opóźnienie dźwięku. W przypadku aplikacji, które wymagają niskiego opóźnienia, strumień audio może używać asynchronicznej funkcji wywołania zwrotnego do przesyłania danych do aplikacji i z niej. AAudio wykonuje wywołanie zwrotne w wątku o wyższym priorytecie, który ma lepszą wydajność.

Funkcja wywołania zwrotnego ma ten prototyp:

typedef aaudio_data_callback_result_t (*AAudioStream_dataCallback)(

AAudioStream *stream,

void *userData,

void *audioData,

int32_t numFrames);

Użyj tworzenia strumienia, aby zarejestrować wywołanie zwrotne:

AAudioStreamBuilder_setDataCallback(builder, myCallback, myUserData);

W najprostszym przypadku strumień okresowo wykonuje funkcję wywołania zwrotnego, aby pobrać dane na potrzeby następnego wysyłania.

Funkcja wywołania zwrotnego nie powinna wykonywać operacji odczytu ani zapisu na strumieniu, który ją wywołał. Jeśli wywołanie zwrotne należy do strumienia wejściowego, Twój kod powinien przetworzyć dane podane w buforze audioData (określonym jako trzeci argument). Jeśli wywołanie zwrotne należy do strumienia wyjściowego, Twój kod powinien umieścić dane w buforze.

Możesz na przykład użyć funkcji wywołania zwrotnego, aby stale generować sygnał sinusoidalny:

aaudio_data_callback_result_t myCallback(

AAudioStream *stream,

void *userData,

void *audioData,

int32_t numFrames) {

int64_t timeout = 0;

// Write samples directly into the audioData array.

generateSineWave(static_cast<float *>(audioData), numFrames);

return AAUDIO_CALLBACK_RESULT_CONTINUE;

}

Za pomocą AAudio można przetwarzać więcej niż 1 strumień. Możesz użyć jednego strumienia jako głównego i przekazać wskaźniki do innych strumieni w danych użytkownika. Zarejestruj wywołanie zwrotne dla głównego strumienia. Następnie użyj nieblokującego wejścia/wyjścia w przypadku innych strumieni. Oto przykład wywołania zwrotnego z obiegiem dwukierunkowym, które przekazuje strumień wejściowy do strumienia wyjściowego. Główny strumień wywołania jest strumieniem wyjściowym. Strumień wejściowy jest uwzględniany w danych użytkownika.

Funkcja wywołania z powrotem wykonuje odczyt nieblokujący ze strumienia wejściowego, umieszczając dane w buforze strumienia wyjściowego:

aaudio_data_callback_result_t myCallback(

AAudioStream *stream,

void *userData,

void *audioData,

int32_t numFrames) {

AAudioStream *inputStream = (AAudioStream *) userData;

int64_t timeout = 0;

aaudio_result_t result =

AAudioStream_read(inputStream, audioData, numFrames, timeout);

if (result == numFrames)

return AAUDIO_CALLBACK_RESULT_CONTINUE;

if (result >= 0) {

memset(static_cast<sample_type*>(audioData) + result * samplesPerFrame, 0,

sizeof(sample_type) * (numFrames - result) * samplesPerFrame);

return AAUDIO_CALLBACK_RESULT_CONTINUE;

}

return AAUDIO_CALLBACK_RESULT_STOP;

}

W tym przykładzie zakładamy, że strumienie wejściowe i wyjściowe mają taką samą liczbę kanałów, format i częstotliwość próbkowania. Format strumieni może być niepasujący, o ile kod prawidłowo obsługuje tłumaczenia.

Konfigurowanie trybu wydajności

Każdy strumień AAudio ma tryb wydajności, który ma duży wpływ na działanie aplikacji. Dostępne są 3 tryby:

AAUDIO_PERFORMANCE_MODE_NONEto tryb domyślny. Używa podstawowego strumienia, który równoważy opóźnienie i oszczędność energii.AAUDIO_PERFORMANCE_MODE_LOW_LATENCYużywa mniejszych buforów i zoptymalizowanej ścieżki danych, aby skrócić czas oczekiwania.AAUDIO_PERFORMANCE_MODE_POWER_SAVINGużywa większych buforów wewnętrznych i ścieżki danych, która powoduje zmniejszenie opóźnienia kosztem mniejszego poboru mocy.

Tryb wydajności możesz wybrać, wywołując metodę setPerformanceMode(), a aby poznać bieżący tryb, wywołaj metodę getPerformanceMode().

Jeśli w Twojej aplikacji mniejsze opóźnienie jest ważniejsze niż oszczędność energii, użyj opcji AAUDIO_PERFORMANCE_MODE_LOW_LATENCY.

Jest to przydatne w przypadku aplikacji bardzo interaktywnych, takich jak gry czy syntezatory klawiaturowe.

Jeśli oszczędzanie energii jest dla Ciebie ważniejsze niż niskie opóźnienie w aplikacji, użyj AAUDIO_PERFORMANCE_MODE_POWER_SAVING.

Jest to typowe dla aplikacji, które odtwarzają wcześniej wygenerowaną muzykę, takich jak odtwarzacze strumieniowe lub odtwarzacze plików MIDI.

Aby uzyskać jak najniższe opóźnienie, w obecnej wersji AAudio musisz użyć trybu wydajności AAUDIO_PERFORMANCE_MODE_LOW_LATENCY oraz wywołania zwrotnego o wysokim priorytecie. Skorzystaj z tego przykładu:

// Create a stream builder

AAudioStreamBuilder *streamBuilder;

AAudio_createStreamBuilder(&streamBuilder);

AAudioStreamBuilder_setDataCallback(streamBuilder, dataCallback, nullptr);

AAudioStreamBuilder_setPerformanceMode(streamBuilder, AAUDIO_PERFORMANCE_MODE_LOW_LATENCY);

// Use it to create the stream

AAudioStream *stream;

AAudioStreamBuilder_openStream(streamBuilder, &stream);

Bezpieczeństwo wątków

Interfejs AAudio API nie jest całkowicie odporny na wątki. Niektórych funkcji AAudio nie można wywoływać jednocześnie z większej liczby wątków. Dzieje się tak, ponieważ AAudio nie używa blokad, które mogą powodować wymuszanie wątków i zakłócenia.

Na wszelki wypadek nie wywołuj funkcji AAudioStream_waitForStateChange() ani nie czytaj ani nie zapisuj danych w tym samym strumieniu z 2 różnych wątków. Podobnie nie zamykaj strumienia w jednym wątku, gdy czytasz lub zapisujesz go w innym wątku.

Wywołania zwracające ustawienia strumienia, takie jak AAudioStream_getSampleRate() i AAudioStream_getChannelCount(), są bezpieczne w wątkach.

Te wywołania są również bezpieczne w wątku:

AAudio_convert*ToText()AAudio_createStreamBuilder()AAudioStream_get*()z wyjątkiemAAudioStream_getTimestamp()

Znane problemy

- Opóźnienie dźwięku jest wysokie w przypadku blokowania funkcji write(), ponieważ wersja Androida O DP2 nie korzysta z ścieżki FAST. Aby zmniejszyć opóźnienie, użyj wywołania zwrotnego.

Dodatkowe materiały

Więcej informacji znajdziesz w tych materiałach: