Metrics are the main type of information extracted from your benchmarks. They

are passed to the measureRepeated

function as a List, which lets you specify

multiple measured metrics at once. At least one type of metric is required for

the benchmark to run.

The following code snippet captures frame timing and custom trace section metrics:

Kotlin

benchmarkRule.measureRepeated( packageName = TARGET_PACKAGE, metrics = listOf( FrameTimingMetric(), TraceSectionMetric("RV CreateView"), TraceSectionMetric("RV OnBindView"), ), iterations = 5, // ... )

Java

benchmarkRule.measureRepeated( TARGET_PACKAGE, // packageName Arrays.asList( // metrics new StartupTimingMetric(), new TraceSectionMetric("RV CreateView"), new TraceSectionMetric("RV OnBindView"), ), 5, // Iterations // ... );

In this example, RV CreateView

and RV OnBindView

are the IDs of traceable blocks that are defined in

RecyclerView. The

source code for the createViewHolder()

method is an example of how you can define traceable blocks within your own

code.

StartupTimingMetric, TraceSectionMetric, FrameTimingMetric,

and PowerMetric, are covered in detail later in this document.

For a full list of metrics, check out subclasses of Metric.

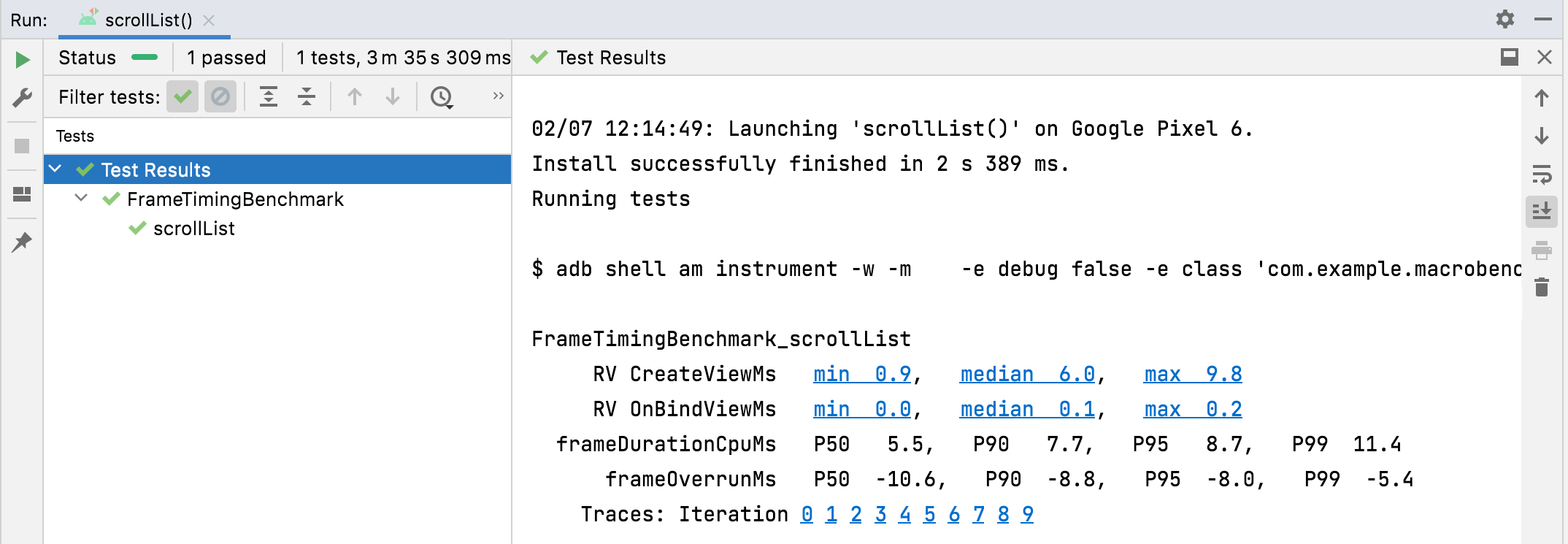

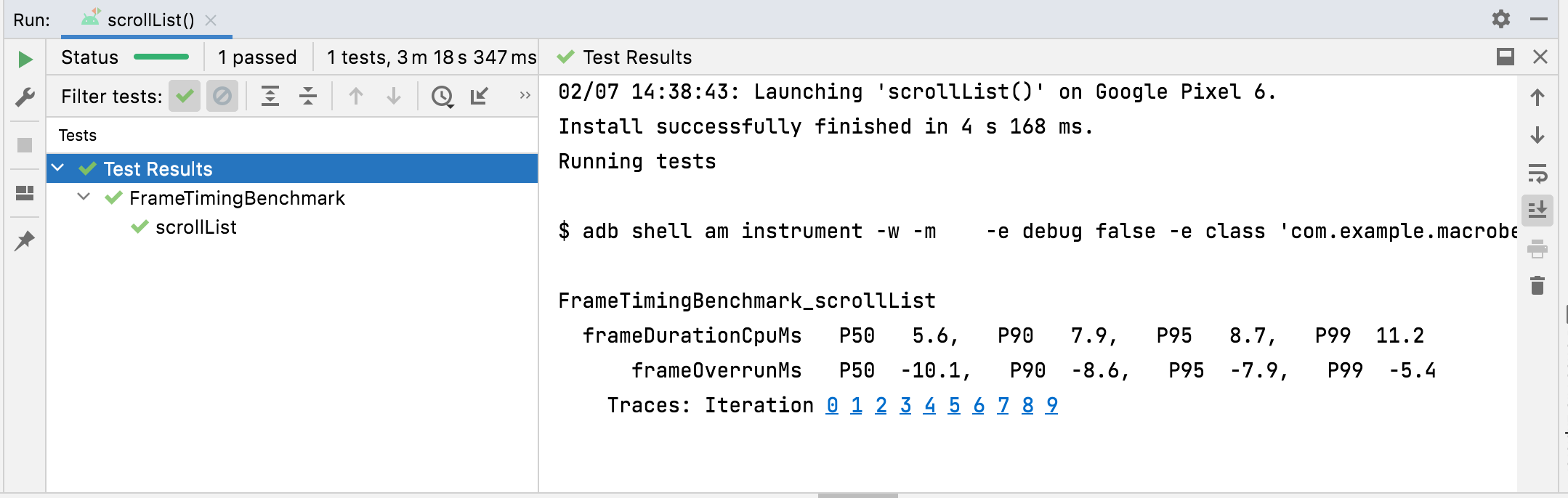

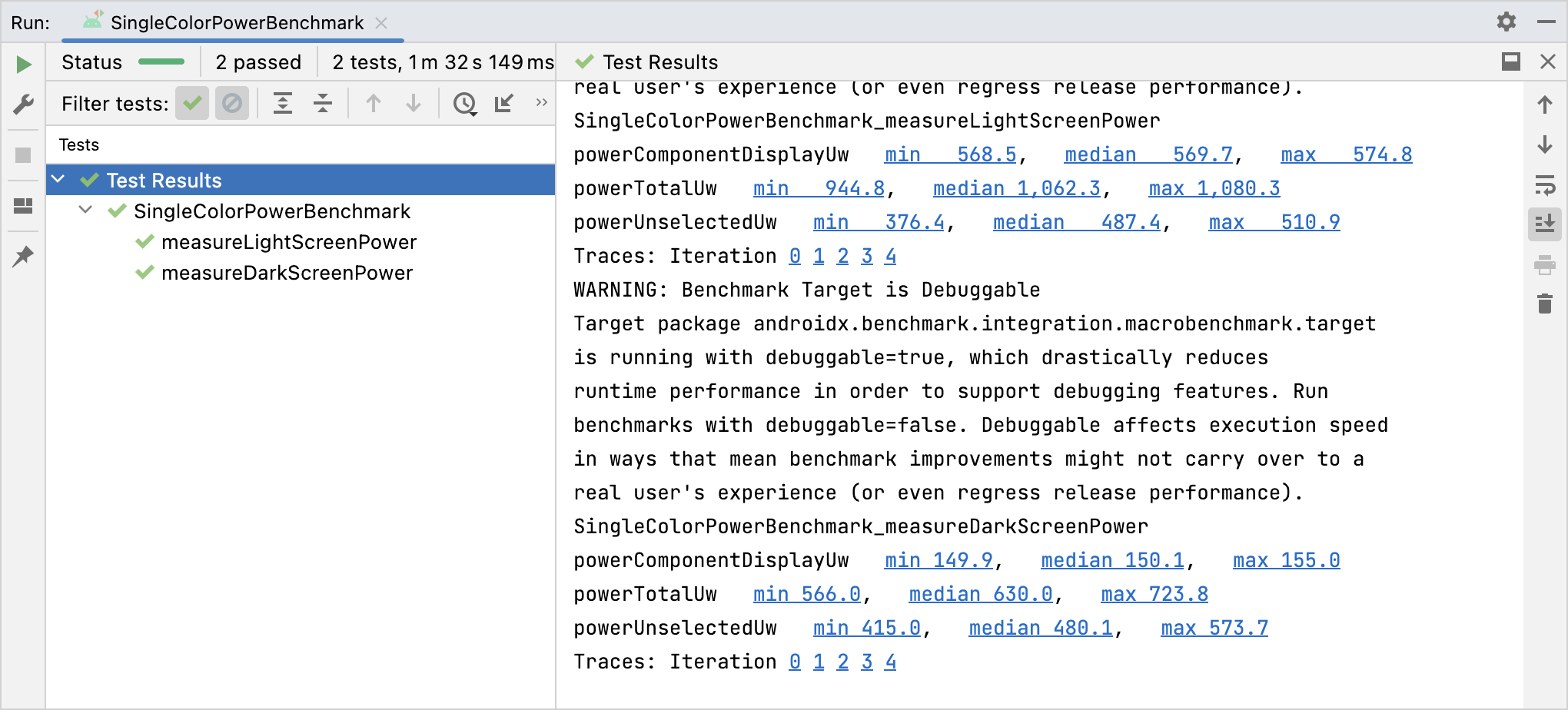

Benchmark results are output to Android Studio, as shown in figure 1. If multiple metrics are defined, all of them are combined in the output.

TraceSectionMetric and

FrameTimingMetric.StartupTimingMetric

StartupTimingMetric

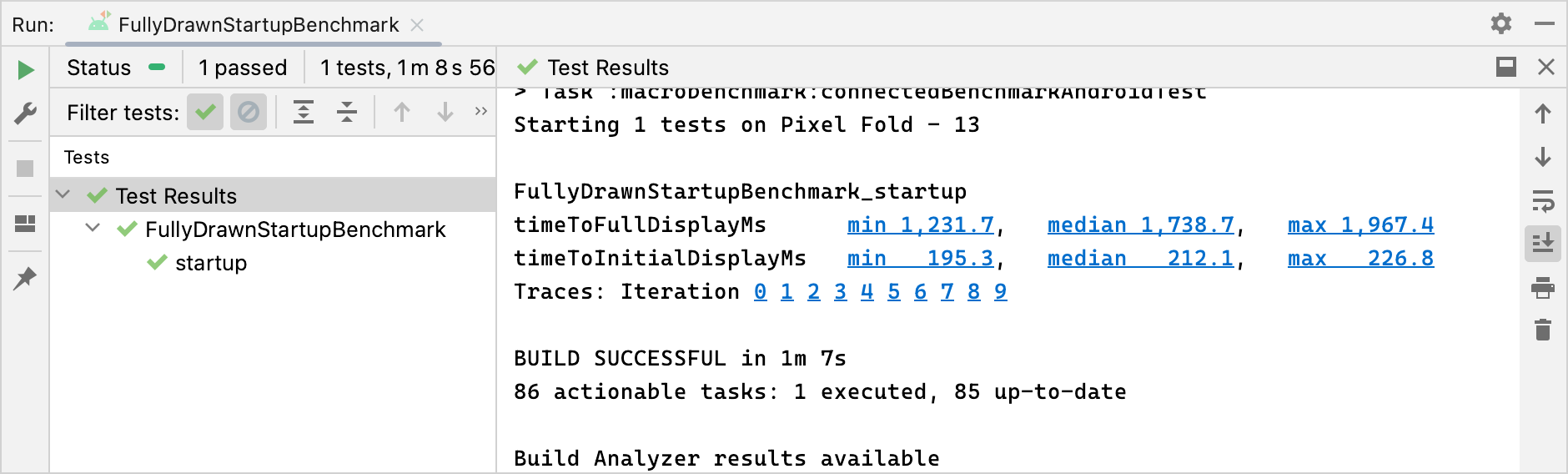

captures app startup timing metrics with the following values:

timeToInitialDisplayMs: The amount of time from when the system receives a launch intent to when it renders the first frame of the destinationActivity.timeToFullDisplayMs: The amount of time from when the system receives a launch intent to when the app reports fully drawn using thereportFullyDrawn()method. The measurement stops at the completion of rendering the first frame after—or containing—thereportFullyDrawn()call. This measurement might not be available on Android 10 (API level 29) and earlier.

StartupTimingMetric outputs the min, median, and max values from the startup

iterations. To assess startup improvement you should focus on median values,

since they provide the best estimate of the typical startup time. For more

information about what contributes to app startup time, see App startup

time.

StartupTimingMetric results.FrameTimingMetric

FrameTimingMetric

captures timing information from frames produced by a benchmark, such as a

scrolling or animation, and outputs the following values:

frameOverrunMs: the amount of time a given frame misses its deadline by. Positive numbers indicate a dropped frame and visible jank or stutter. Negative numbers indicate how much faster a frame is than the deadline. Note: This is available only on Android 12 (API level 31) and higher.frameDurationCpuMs: the amount of time the frame takes to be produced on the CPU on both the UI thread and theRenderThread.

These measurements are collected in a distribution of 50th, 90th, 95th, and 99th percentile.

For more information on how to identify and improve slow frames, see Slow rendering.

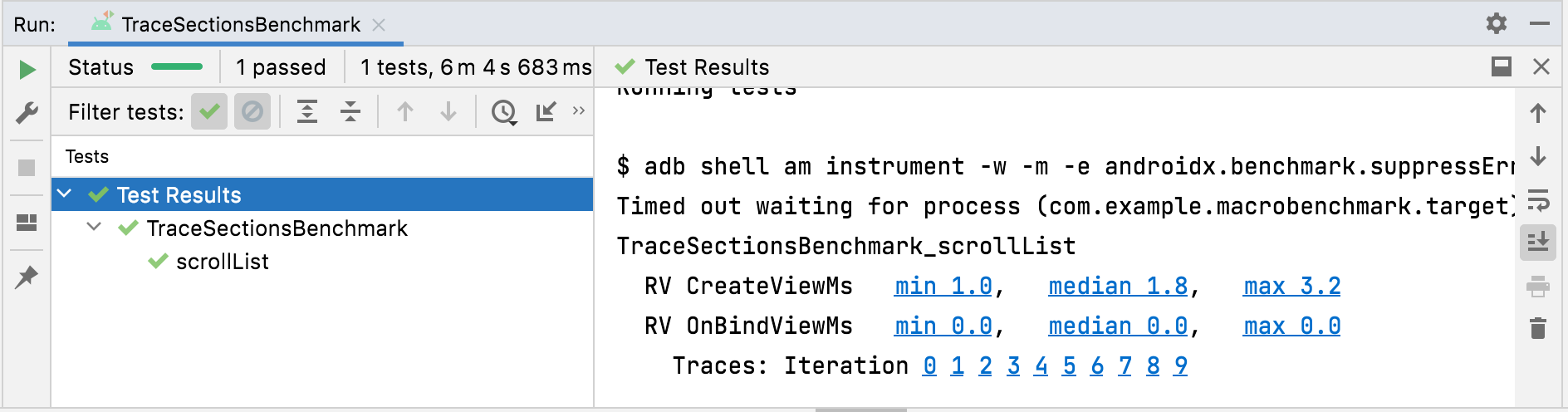

FrameTimingMetric results.TraceSectionMetric

TraceSectionMetric

captures the number of times a trace section matching the provided sectionName

occurs and the amount of time it takes. For the time, it outputs the minimum,

median, and maximum times in milliseconds. The trace section is defined either

by the function call

trace(sectionName)

or the code between

Trace.beginSection(sectionName)

and

Trace.endSection() or

their async variants. It always selects the first instance of a trace section

captured during a measurement. It only outputs trace sections from your package

by default; to include processes outside your package, set

targetPackageOnly = false.

For more information about tracing, see Overview of system tracing and Define custom events.

TraceSectionMetric results.PowerMetric

PowerMetric captures

the change in power or energy over the duration of your test for the provided

power categories.

Each selected category is broken down into its measurable subcomponents, and

unselected categories are added to the "unselected" metric.

These metrics measure system-wide consumption, not the consumption on a per-app basis, and are limited to Pixel 6, Pixel 6 Pro, and later devices:

power<category>Uw: the amount of power consumed over the duration of your test in this category.energy<category>Uws: the amount of energy transferred per unit of time for the duration of your test in this category.

Categories include the following:

CPUDISPLAYGPUGPSMEMORYMACHINE_LEARNINGNETWORKUNCATEGORIZED

With some categories, like CPU, it might be difficult to separate work done by

other processes from work done by your own app. To minimize the interference,

remove or restrict unnecessary apps and accounts.

PowerMetric results.Recommended for you

- Note: link text is displayed when JavaScript is off

- Create Baseline Profiles {:#creating-profile-rules}

- Writing a Macrobenchmark

- App startup analysis and optimization {:#app-startup-analysis-optimization}