אפשר להגדיר את ההתנהגות של Microbenchmark באמצעות הארגומנטים הבאים של האינסטרומנטציה. אפשר להוסיף אותם להגדרות של Gradle או להפעיל אותם ישירות כשמריצים את האינסטרומנטציה משורת הפקודה. כדי להגדיר את הארגומנטים האלה לכל ההרצות של בדיקות ב-Android Studio ובשורת הפקודה, מוסיפים אותם ל-testInstrumentationRunnerArguments:

android {

defaultConfig {

// ...

testInstrumentationRunnerArguments["androidx.benchmark.dryRunMode.enable"] = "true"

}

}

אפשר גם להגדיר ארגומנטים של אינסטרומנטציה כשמריצים את בדיקות ההשוואה מ-Android Studio. כדי לשנות את הארגומנטים:

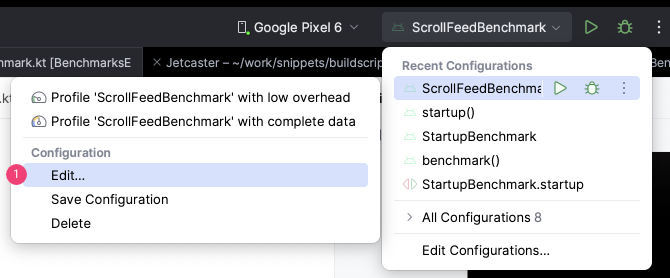

- לוחצים על עריכה ובוחרים את הגדרת ההרצה שרוצים לערוך.

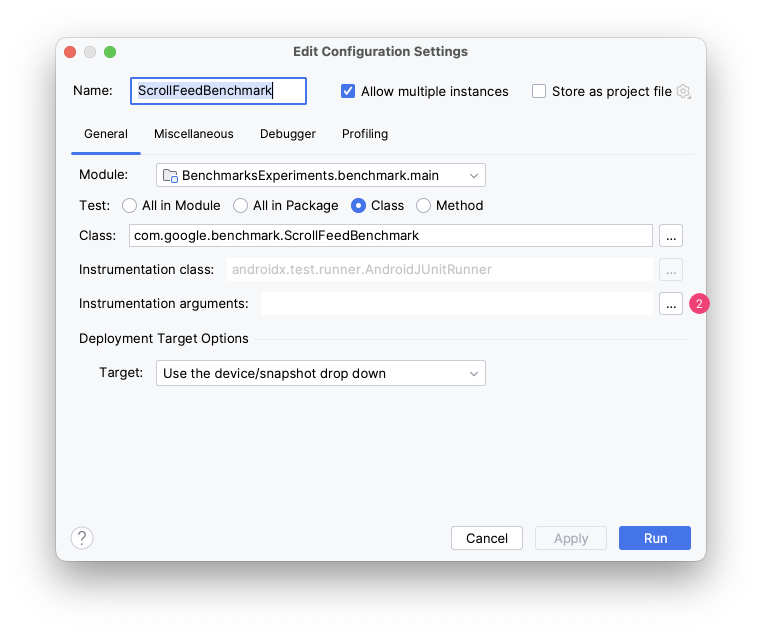

איור 1. עורכים את הגדרות ההרצה. - כדי לערוך את הארגומנטים של האינסטרומנטציה, לוחצים על לצד השדה Instrumentation arguments.

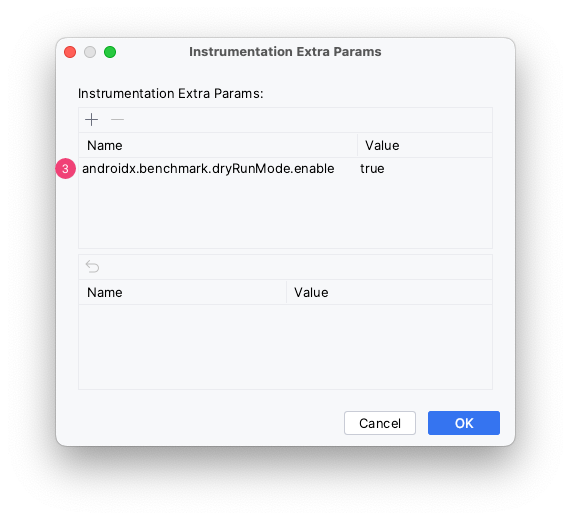

איור 2. עורכים את ארגומנט האינסטרומנטציה. - לוחצים על ומוסיפים את הארגומנט הנדרש.

איור 3. מוסיפים את ארגומנט האינסטרומנטציה.

אם מריצים את הבדיקה משורת הפקודה, משתמשים ב--P

android.testInstrumentationRunnerArguments.[name of the argument]:

./gradlew :benchmark:connectedAndroidTest -P android.testInstrumentationRunnerArguments.androidx.benchmark.profiling.mode=StackSamplingכדי להפעיל פקודה של כלי ישירות (אפשרי בסביבות בדיקה של CI), מעבירים את הארגומנט אל am instrument באמצעות -e:

adb shell am instrument -e androidx.benchmark.profiling.mode StackSampling -w com.example.macrobenchmark/androidx.benchmark.junit4.AndroidBenchmarkRunnerמידע נוסף על הגדרה של נקודות השוואה ב-CI זמין במאמר בנושא השוואה ב-CI

androidx.benchmark.cpuEventCounter.enable (ניסיוני)

סופר את אירועי המעבד (CPU) שצוינו ב-androidx.benchmark.cpuEventCounter.events.

נדרשת גישת root.

- סוג הארגומנט: בוליאני

- ברירת המחדל: false

androidx.benchmark.cpuEventCounter.events (ניסיוני)

מציין אילו סוגים של אירועי CPU ייספרו. כדי להשתמש בארגומנט הזה, צריך להגדיר את androidx.benchmark.cpuEventCounter.enable ל-true.

- סוג הארגומנט: רשימה של מחרוזות שמופרדות בפסיקים

- האפשרויות הזמינות:

InstructionsCPUCyclesL1DReferencesL1DMissesBranchInstructionsBranchMissesL1IReferencesL1IMisses

- ברירת המחדל:

Instructions,CpuCycles,BranchMisses

androidx.benchmark.dryRunMode.enable

מאפשר להריץ בדיקות השוואה בלולאה יחידה כדי לוודא שהן פועלות כמו שצריך.

כלומר:

- שגיאות בהגדרות לא נאכפות (לדוגמה, כדי להקל על הרצה עם בדיקות רגילות של תקינות באמולטורים)

- ההשוואה לביצועים מתבצעת רק בלולאה אחת, בלי ריצת הכנה

- המדידות והעקבות לא נרשמות כדי לצמצם את זמן הריצה

האופטימיזציה מתבצעת כדי לשפר את התפוקה של הבדיקה ולאמת את הלוגיקה של מדד ההשוואה במהלך ה-build, ולא כדי לשפר את הדיוק של המדידה.

- סוג הארגומנט: בוליאני

- ברירת המחדל:

false

androidx.benchmark.iterations

הגדרת מספר המדידות. הערך הזה לא מגדיר ישירות את מספר הלולאות שבוצעו, כי כל מדידה מריצה בדרך כלל הרבה לולאות, שמוגדרות באופן דינמי על סמך זמן הריצה במהלך החימום.

- סוג הארגומנט: מספר שלם

- ברירת המחדל:

50

androidx.benchmark.killExistingPerfettoRecordings

כברירת מחדל, שימוש במיקרו-בנצ'מרק מפסיק הקלטות קיימות של Perfetto (עקבות המערכת) כשמתחילים מעקב חדש, כדי לצמצם הפרעות. כדי להשבית את ההתנהגות הזו, קובעים (pass) את הערך false.

- סוג הארגומנט: בוליאני

- ברירת המחדל:

true

androidx.benchmark.output.enable

מאפשר לכתוב את קובץ ה-JSON שנוצר להתקן אחסון חיצוני.

- סוג הארגומנט: בוליאני

- ברירת המחדל:

true

androidx.benchmark.profiling.mode

מאפשר לצלם קובצי מעקב בזמן הפעלת הבדיקות. במאמר תיעוד של Microbenchmark מפורטות האפשרויות הזמינות.

הערה: חלק מהגרסאות של Android OS לא מאפשרות תיעוד method בלי שהמדידות הבאות יושפעו. כדי למנוע את זה, Microbenchmark מקפיץ הודעת שגיאה (throw), ולכן צריך להשתמש בארגומנט ברירת המחדל לתיעוד method רק כשזה בטוח. אפשר לעיין בבעיה מספר 316174880.

- סוג הארגומנט: מחרוזת

- האפשרויות הזמינות:

MethodTracingStackSamplingNone

- ברירת מחדל: גרסה בטוחה של

MethodTracingשמתעדת תיעוד method רק אם המכשיר יכול לעשות זאת בלי להשפיע על המדידות.

androidx.benchmark.suppressErrors

מקבל רשימה של שגיאות שמופרדות בפסיקים, כדי להפוך אותן לאזהרות.

- סוג הארגומנט: רשימה של מחרוזות

- האפשרויות הזמינות:

DEBUGGABLELOW-BATTERYEMULATORCODE-COVERAGEUNLOCKEDSIMPLEPERFACTIVITY-MISSING

- ברירת המחדל: רשימה ריקה

additionalTestOutputDir

הגדרת המיקום שבו יישמרו במכשיר תוצאות של תיעוד ודוחות השוואה (benchmark) בפורמט JSON.

- סוג הארגומנט: מחרוזת של נתיב קובץ

- ברירת המחדל היא: ספרייה חיצונית של קובץ ה-APK של הבדיקה

listener

יכול להיות שהתוצאות לא יהיו עקביות אם פעילות לא קשורה תתבצע ברקע בזמן שבדיקת ההשוואה פועלת.

כדי להשבית פעילות ברקע במהלך בדיקת ביצועים, מגדירים את סוג ארגומנט המדידה listener

לערך androidx.benchmark.junit4.SideEffectRunListener.

- סוג הארגומנט: מחרוזת

- האפשרויות הזמינות:

androidx.benchmark.junit4.SideEffectRunListener

- ברירת מחדל: לא צוין

מומלץ בשבילכם

- הערה: טקסט הקישור מוצג כש-JavaScript מושבת

- ארגומנטים של אינסטרומנטציה להשוואה

- תיעוד של Microbenchmark

- יצירת פרופיל Baseline {:#creating-profile-rules}