Android Developer Challenge Winners

Eskke

Developer: David Kathoh

Location: Goma, Democratic Republic of Congo

Unodogs

Developer: Chinmay Mishra

Location: New Delhi, India

Agrifarm

Developer: Mirwise Khan

Location: Balochisan, Pakistan

Stila

Developer: Yingding Wang

Location: Munich, Germany

Snore and Cough

Developer: Ethan Fan

Location: Mountain View, CA, USA

Leepi

Developer: Prince Patel

Location: Bengaluru, India

Mixpose

Developer: Peter Ma

Location: San Francisco, CA, USA

Pathfinder

Developer: Colin Shelton

Location: Addison, Texas, USA

Trashly

Developer: Elvin Rakhmankulov

Location: Chicago, Illinois, USA

Agrodoc

Developer: Navneet Krishna

Location: Kochi, India

Building the Future With Helpful Innovation

Words by Luke Dormehl

Illustration by Hannah Perry

"Breakthroughs in machine learning are already making our daily lives easier and richer."

An unblinking red eye stares back at its user. The system speaks in a cold, unemotional voice. “I’m sorry, Dave,” it says. “I’m afraid I can’t do that.” In this iconic scene from 2001: A Space Odyssey, the protagonist faces off against the world’s least helpful assistant, HAL 9000. In the name of self-preservation, the AI system overrides its orders, leading to the crew’s demise. This isn’t the future anyone wants to see. People want computers that assist humanity, not replace or impede it. They hope for a utopian, not dystopian, world in which technology helps find solutions, not more problems. Technology today is more powerful than ever - so the way we build and use it should be equally important to how it works. By putting humanity front and center, we can build technology that changes our future for the better.

Not that we have to wait until then, of course. Numerous assistive technologies powered by breakthroughs in machine learning are already making our daily lives easier and richer. Self-driving cars that have the potential to reduce congestion, pollution, and traffic accidents are beginning to surface on the horizon. While other technologies, like ML-aided translation tools, medical diagnostic software, and context-aware devices are part of many people’s daily routines. Features including Smart Compose in Gmail, which makes suggestions as users type messages; Live Transcribe in Android, which can help the deaf or hard-of-hearing receive instant speech-to-text captions in over 70 languages and dialects; or the ever-supportive Google Assistant, which helps millions of people stay on top of their daily schedules, showcase Google’s vision for creating a better future with technology.

Nowhere is this idea of helpful innovation more important than on mobile devices. Since Android launched in 2008, it has become the world’s most popular mobile platform. Advances in image recognition with machine learning mean that users can point their smartphone camera at text and have it live-translated into 88 different languages through Google Translate. And with mobile phones becoming the device of choice around the globe, especially in fast-growing markets in the developing world, it’s crucial that new tools are built with human-centered applications in mind. Helpful innovation has the potential to change the way we access, use, and interpret information, making it available when we need it, where we need it most.

This means forecasting floods and delivering warnings directly to those affected. Or even snapping a quick photo of an item, like a coffee cup, and getting directions to a nearby recycling point. Developing new technologies is not a straightforward path. It relies on advances in hardware, new discoveries in software, and the developers who build these new experiences. By focusing on “Helpful Innovation,” the Android Developer Challenge sets real-world examples of machine learning into action for users and inspires the next wave of developers to unlock what’s possible with this new technology.

Designing a Human-Centered Experience

Words by Christopher Katsaros

Interview by Joanna Goodman

Illustration by Ori Toor

If you’re just graduating college, a mouse is that annoying creature that sometimes creeps around your dorm at night. You’ve only ever used a computer mouse a few times, and it’s been connected to an out-of-date system in the basement of your school’s library. And yet, if you can believe it, the computer mouse was a radical step towards personalizing computing, making computers easier to interact with.

At a time when the founder of Digital Equipment Corporation, a major American computer producer from the 1960s to the 1990s, said “there is no reason anyone would want a computer in their home,” Steve Jobs pioneered the graphical user interface – with the mouse at its heart – ushering in personal computing and a new era in design. Since then, computing design has gone through two more important revolutions, each one a stepchange towards making our relationship with devices feel closer, more personal, and more human.

Android and iOS ushered in the first important change, with the wave of mobile. At first, mobile meant less: smaller screens, less power, fewer features. But developers quickly realized it was more. Swipe, tap, touch, poke–the lingo of mobile– made it more by thinking beyond the screen; no longer is our canvas the borders of a monitor, now there are layers upon layers of information, ready and waiting to serve you. And with the context of location, identity, Since then, computing design has gone through two more important revolutions, each one a stepchange towards making our relationship with devices feel closer, more personal, and more human.

Android and iOS ushered in the first important change, with the wave of mobile. At first, mobile meant less: smaller screens, less power, fewer features. But developers quickly realized it was more. Swipe, tap, touch, poke–the lingo of mobile– made it more by thinking beyond the screen; no longer is our canvas the borders of a monitor, now there are layers upon layers of information, ready and waiting to serve you. And with the context of location, identity, movement, the human experiences unlocked through mobile dwarfed that which desktop could ever provide. Machine learning, the next important change, has brought the evolution towards human-centered design sharply into focus.

If it was bold to suggest that computers could ever reside in your home, it is surely bolder that you could have a conversation with them. Or even point your camera at a beautiful flower, use Google Lens to identify what type of flower that is, and then set a reminder to order a bouquet for Mom “Google Lens is able to use computer vision models to expand and speed up search,” says Jess Holbrook, a Senior Staff User Researcher at Google and Co-Lead of the People + AI Research team. “You don’t always need the camera to search, but it is useful – if you forget the name of something or have to stop and type a long description. It’s so much quicker to use your camera.”

Solving human problems

Words by Luke Dormehl

Illustration by Manshen Lo

Yossi Matias is Vice President of Engineering at Google, founding Managing Director of Google's R&D Center in Israel,and Co-Lead of AI for Social Good.

As a prominent thought leader for all things AI, Matias speaks about the potential of on-device machine learning, smart environments, and using AI for the good of humanity

Q. What drives your work and interest in AI?

A. I’m interested in developing technology and using it to solve hard problems in ways that make an impact. Projects I’m working on include conversational AI efforts such as Google Duplex, an automated system that uses natural-sounding voices to carry out tasks over the phone like making restaurant reservations; Read It, which enables Google Assistant to read web articles out loud from your phone; and ondevice technologies such as Call Screen and Live Caption. I’m also very interested in the general use of AI for social good. Examples of this include carrying out better flood forecasting using machine learning, cloud computing, hydraulic simulations, and other technologies.

Q. How was the AI for Social Good initiative formed?

A. One beautiful attribute that I see in the culture of Google is that many people care about finding ways to solve important problems using technology. AI for social good can take place in many areas: health, biodiversity, helping with accessibility, crisis response, sustainability, and so on. A few of us at Google got together and identified problems, which, if we’re able to help solve them, could really benefit people’s lives and society in a substantial way. So, we created AI for Social Good to support anyone – inside or outside of Google – working on initiatives related to social good. Today’s machine learning technologies, which are available through the cloud, make it possible for many around the world to get the tools to identify, and potentially solve, real societal issues. That’s unparalleled in history.

Q. What roles can on-device technologies play?

Today’s mobile devices are increasingly powerful. This gives us the opportunity to leverage machine learning techniques that can run on-device. This is important for several reasons, like being able to access certain applications instantly and not be dependent on connectivity. It’s also important in situations when you might be dealing with personal data, where you don’t want anything to leave your device. Call Screen, Live Caption and Live Relay are examples of how using conversational AI on-device can help people have better control over their incoming calls, let people who have hearing difficulties see live captions of what’s being said, and even have phone conversations.

Q. Why is ambient intelligence such a game-changer?

A. The power of helpful technology emerges when it is so embedded in our environment that it just works without us having to pay attention to it. Many technologies initially surprise us, but soon we take them for granted. Conversational AI removes barriers of modality and languages, and allows for better interaction. By getting machines to understand us better and speak to us in a natural way – practically becoming ambient – users can remove the cognitive load of having to explicitly ask for something to be done and interact more naturally.

Q. Why is it so vital that machine learning tools are made accessible?

A. The Android Developer Challenge shows the importance of opening up the cloud and on-device technology. We love seeing innovations coming from everywhere and everyone. We want to be able to encourage, support, inspire, and advise wherever we can. I'm really excited about what I've seen from those who participated in this program. If we can help them scale their passion globally, and leverage cutting-edge technologies, we're going to see amazing and innovative results, which can absolutely be helpful to people.

TensorFlow Lite: The Foundation Layer

Words by Luke Dormehl

Illustration by Sarah Maxwell

How do you take advantage of revolutionary machine learning tools and capabilities on a mobile? The answer is TensorFlow Lite. This powerful machine learning framework can help run machine learning models on Android and iOS devices that would never normally be able to support them. Today, TensorFlow Lite is active on billions of devices globally. And its set of tools can be used for all kinds of powerful neural network-related applications, from image detection to speech recognition, bringing the latest cutting-edge technology to the devices we carry around with us wherever we go.

TensorFlow Lite allows much of the machine learning processing to be carried out on the devices themselves, using less computationally intensive models, which don’t have to depend on a server or data center. These models run faster, offer potential privacy improvements, demand less power (connectivity can be a battery hog), and crucially, in some cases, don’t require an internet connection. On Android, TensorFlow Lite accesses specialist mobile accelerators through the Neural Network API providing even better performance while reducing power usage.

“TensorFlow Lite enables use cases which weren’t possible before because the round-trip latencies to the server made those applications a non-starter,” says Sarah Sirajuddin, Engineering Director for TensorFlow Lite. “Examples of this are on-device speech recognition, real-time video interactive features, and real-time enhancements at the time of photo capture.” “Innovation in this space has been tremendous and there’s more to come,” she continues. “The other exciting aspect of this is that it makes machine learning easier, helping empower creativity and talent.”

Words by Luke Dormehl

Illustration by Sarah Maxwell

ML Kit brings Google’s on-device machine learning technologies to mobile app developers, so they can build customized and interactive experiences into their apps. This includes tools such as language translation, text recognition, object detection and more. ML Kit makes it possible to identify, analyze, and to some extent, understand visual and text data in real-time, and in a user privacy centric way, since data stays on the device. “It makes machine learning much more approachable,” says Brahim Elbouchikhi, Director of Product Management.

“We make Google’s best-in-class ML models available as a simple set of tools, so developers no longer need to be ML experts to create apps powered by ML. All the complexity is hidden, so developers can focus on their core product.” For example, tools like Language ID help you identify the language of a string of text, and Object Detection and Tracking can help localize and track in real time one or more objects in an image or live camera feed.

It's what helps winning apps in the Android Developer Challenge like Trashly differentiate between recyclable and non-recyclable materials,or UnoDogs help differentiate between healthy and unhealthy dogs. And what about the future? The goal is for the technology to disappear into the background and for devices to better understand our needs, says Elbouchikhi. “ML Kit helps us deliver on that promise, enabling developers to create experiences that are intuitive and adaptive, in ways that promote user privacy and trust.”

Words by Joanna Goodman

Illustration by Tor Brandt

Eskke

Developers: David Mumbere Kathoh, Nicole Mbambu Musimbi

Location: Goma, Democratic Republic of Congo

Today, over 400 million people use a service called mobile money on a daily basis, which lets you send money, pay for utility bills or withdraw cash from mobile money kiosks using USSD – those quick codes you send on your phone. While mobile money is used by people throughout the world, it’s particularly useful for people in a country like the Democratic Republic of Congo (DRC), where 46% of the population live in rural areas without traditional banks or stable internet access. Unfortunately, the process is time-consuming and hard to use, especially for people that have difficulties reading and working with numbers. One wrong move and they have to begin again.

Or if a wrong code is used, money can be sent to the wrong person. Esske streamlines the experience, making it more intuitive and accessible. Within the app, users can even read and track their live transactions.They can also transfer money, pay bills, buy subscriptions, and essential airtime for sending SMS, using data, and making calls. While most mobile banking services require users to manually input their mobile phone’s USSD code, Eskke’s Quick Withdraw function processes this information automatically.

Tools such as offline text recognition and barcode scanning from ML Kit make it possible for users to simply scan the QR code at a mobile money kiosk and quickly withdraw money. The app is available to users in the DRC and will expand to support mobile money operators in other African countries.

Words by Arielle Bier

Illustration by Frances Haszard

arrow_upwardTrashly

Developers: Elvin Rakhmankulov, Arthur Dickerson, Gabor Daniel Vass, Yury Ulasenka

Location: Chicago, U.S.A.

As climate change is being felt around the world, people are looking to reduce their carbon footprint and send less waste to landfills.

While most cities provide recycling services, many places have different rules, restrictions, and regulations. Because of poor labeling and inconsistent policies, non-recyclable objects fill over 25% of recycling bins.

Trashly makes recycling easier for consumers. Just point the on-device camera at an item, and through object detection, the app identifies and classifies plastic and paper cups, bags, bottles, etc. After analyzing this information through a custom TensorFlow Lite model, it reports if the object is recyclable and how to recycle it – depending on local rules – and shares details on nearby recycling bins.

Currently active in Illinois, Pennsylvania, and California, Trashly allows finding 1000 recycling centers using the “Near Me” feature, and plans to expand to other states and countries in the future, helping support responsible habits that make a difference.

Words by Arielle Bier

Illustration by Aless Mc

arrow_upwardUnodogs

Developer: Chinmay Mishra

Location: New Delhi, India

“We want to use ML to empower each dog owner to accurately judge their dog’s general health and work on it.”

Dogs depend on their owners for daily exercise, food, and care. Yet, despite their best intentions, many beloved dogs end up overweight, reducing the animal’s lifespan by up to 25%. UnoDogs helps owners better support their pet’s wellness, providing customized information and fitness programs. By tracking and measuring a dog’s health and giving accurate advice, UnoDogs tackles health problems before they start.

Using the Google Cloud Platform AutoML Vision capability to train an object detection model for analyzing live images, UnoDogs is able to calculate the dog’s Body Condition Score and give recommendations for ideal weight and size. In future versions,more ML-powered features will be available, like food recommendation, Agility tests and fitness plans.

Weight and exercise tracking details are then combined with real-time analysis, giving easy to follow meal and exercise programs, designed to keep the owner on target and inspired so their dog can lead its best life.

Words by Arielle Bier

Illustration by Choi Haeryung

arrow_upwardAgrifarm

Developers: Mirwise Khan, Samina Ismail, Ehtisham Ahmed, Hassaan Khalid

Location: Balochisan, Pakistan

“We’re helping connect farmers and make use of AI for their productivity.”

Crop disease poses a constant threat to farmers around the world. And the impacts of food insecurity on health, society, and the economy can be devastating. AgriFarm helps farmers detect plant diseases, preventing major damage. To make this possible, the deep neural network classifier, used to identify the type of disease, is hosted on the Google Cloud AI Platform.

Further features include weather reports, video recommendations, and price predictions. Designed for use in remote rural areas, with limited internet access, AgriFarm covers fruits and vegetables such as tomatoes, corn and potatoes, and is expanding the dataset to function globally.

Words by Arielle Bier

Illustration by Buba Viedma

arrow_upwardAgroDoc

Developer: Navneet Krishna

Location: Kochi, India

“AgroDoc could really help such people with less farming experience.”

Based on a crowdsourcing model, AgroDoc helps collect data from farmers with similar geographical locations and climates to diagnose plant diseases and make treatment plans. With the app, an infected leaf is scanned by an on-device camera and the TensorFlow Lite library helps detect the type of disease.

The data is analyzed in combination with key symptoms and simple steps are given for improving the plant’s health.

Words by Arielle Bier

Illustration by Buba Viedma

arrow_upwardStila

Developer: Yingding Wang

Location: Munich, Germany

“In modern society, things are changing so fast and work pressure is so high that our body reacts to these challenges like being hunted by a tiger.”

Stress exists in many forms, both positive and negative. Luckily, our bodies are built to self-regulate and adapt to changing circumstances. But when extreme events or conditions cause high levels of stress, negative effects can build-up, leading to anxiety, depression, and long-term health damage. Stila (stress tracking in learning activities), monitors and tracks the body’s stress levels, so users can better understand and manage stress in their lives. To make this possible, the smartphone app works together with a wearable device, like the Fitbit wristband or a device running on Wear OS by Google, for recording their biofeedback.

A Firebase Custom Model detects and categorizes stress, while the TensorFlow Lite Interpreter allows information to be processed offline. The app tracks the body’s stress levels and is combined with brief reports of the user’s life events and environment. Then, a stress-level score is calculated, helping estimate stress during certain activities. Because every person reacts to stressors and stimuli in different ways, Stila learns from these reports and adapts how it works. It then gives feedback depending on each user’s rhythms and needs.

Individual traits can be used to further help personalize the user’s experience through transfer learning. By detecting and monitoring stress levels over time, users have the chance to better manage stress in their lives.

Words by Arielle Bier

Illustration by Linn Fritz

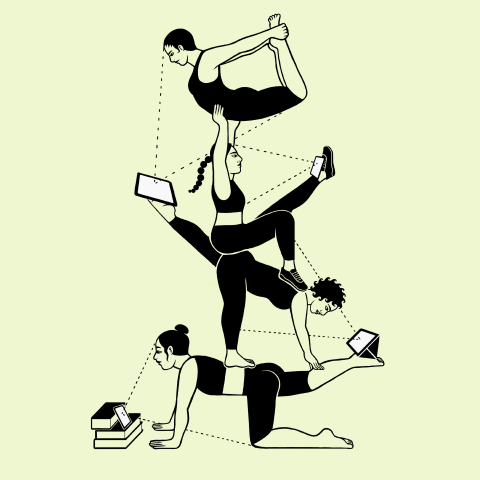

arrow_upwardMixpose

Developers: Peter Ma, Sarah Han

Location: San Francisco, U.S.A.

“We are all regular yoga practitioners and we are all software developers. We wanted to explore how technologies can help to make yoga and fitness experiences better.”

MixPose is a live streaming platform that gives yoga teachers and fitness professionals the opportunity to teach, track alignment, and give feedback in real-time. Static fitness videos only share information in one direction. But with this app, teachers can customize their lessons and directly engage with their student’s needs. Pose tracking detects each user’s movements and positions are classified using the ML Kit and PoseNet.

Then, live sensors and feedback systems inform them about their alignment. Added output to video features, like Chromecast, easily connect to larger screens for more immersive viewing. Designed with the 37 million Americans who practice yoga in mind, MixPose is enlisting over 100 yoga teachers for its launch. By innovating through AI at the Edge, 5G, and smart TV, this platform is bringing interactive courses directly to users in the comfort of their home.

Words by Arielle Bier

Illustration by Rachel Levit Ruiz

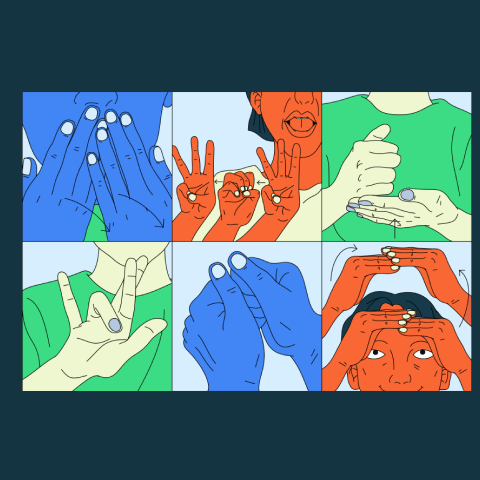

arrow_upwardLeepi

Developers: Prince Patel, Aman Arora, Aditya Narayan

Location: Bengaluru, India

“Around 7 million people in India are differently-abled in hearing and speaking.”

Over seven million people in India are differently-abled in hearing and speaking but few have access to sign language education. Because the range of languages and dialects are so diverse, creating a standard form of communication is nearly impossible.

With Leepi, students can learn hand gestures and symbols for American Sign Language. The app uses letter, symbol, facial, and intent recognition, with interactive exercises and real-time feedback. The TensorFlow Lite library and MediaPipe framework were used to make on-device processing more accurate and streamlined. And importantly, it is designed for offline use, so more students can learn barrier-free.

Words by Arielle Bier

Illustration by Xuetong Wang

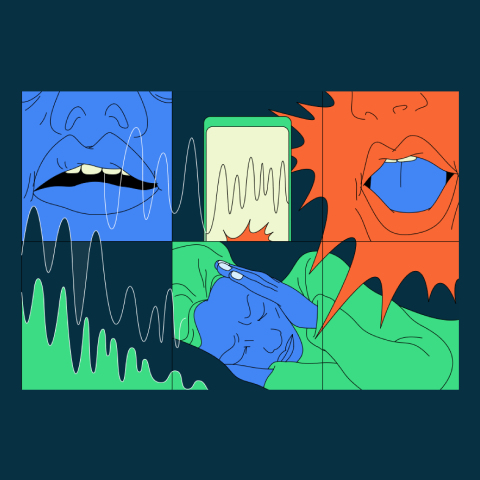

arrow_upwardSnore and Cough

Developer: Ethan Fan

Location: Mountain View, CA, USA

“Our solution is to use audio classification through deep learning.”

Good sleep is vital for resting and repairing the human body. Yet 25% of adults snore on a regular basis, which could lead to sleep disruptions and potentially chronic health problems.

Through capturing, analyzing, and classifying audio with TensorFlow Lite, the Snore and Cough app identifies snoring and coughing, to help users seeking assistance from a medical professional.

Words by Arielle Bier

Illustration by Xuetong Wang

arrow_upwardPath Finder

Developers: Colin Shelton, Jing Chang, Sam Grogan, Eric Emery

Location: Addison, Texas, U.S.A

“We wanted to leverage machine learning to achieve a public good.”

When traveling through public environments, like a shopping mall or crowded street, moving obstacles constantly shift and change in unexpected ways. Sensory experiences like sight, sound, and touch can help avoid collisions and prevent accidents. But for the visually impaired, navigating through public environments means facing a series of unknowns. Path Finder could help people with visual impairments navigate through such complex situations by identifying and calculating the trajectories of objects moving in their path.

Custom alerts then inform the user of how to avoid these obstacles and suggest actions they can safely make. This app uses object detection from TensorFlow Lite to calculate distances of surrounding objects. It is designed to augment the user’s experience, share information, and give support, not overwhelm them in difficult situations. For this reason, Path Finder’s setup process is made to be conversational and is customized for users with limited vision, as well as the people helping them.

Both audible and haptic feedback are part of the obstacle alert system, while a range of pitches and frequencies communicate each object’s distance and direction. Audio patterns, like Morse Code, are then layered and combined for sharing further information. Path Finder can help visually impaired users gain the advantage of foresight, making public environments easier to navigate.

Words by Arielle Bier

Illustration by Sonya Korshenboym

arrow_upward