1. Introduction

Machine learning has become an important toolset in mobile development, enabling many smart capabilities in modern mobile apps. If you are a mobile developer who is new to machine learning and want a quick introduction about the machine learning techniques that you can integrate to your mobile app, this is the codelab for you!

In this codelab, you will experience the end-to-end process of training a machine learning model that can recognize handwritten digit images with TensorFlow and deploy it to an Android app.

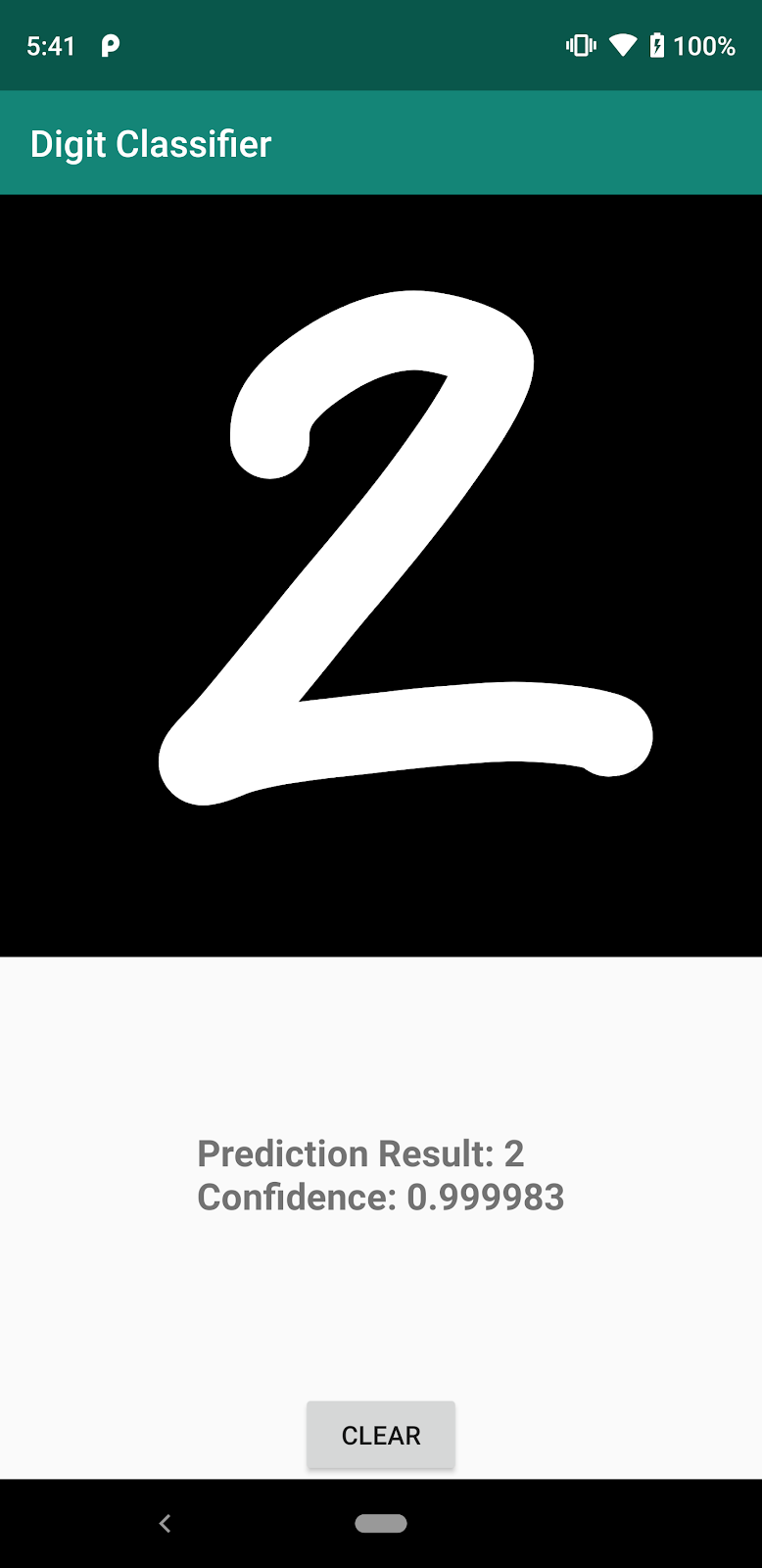

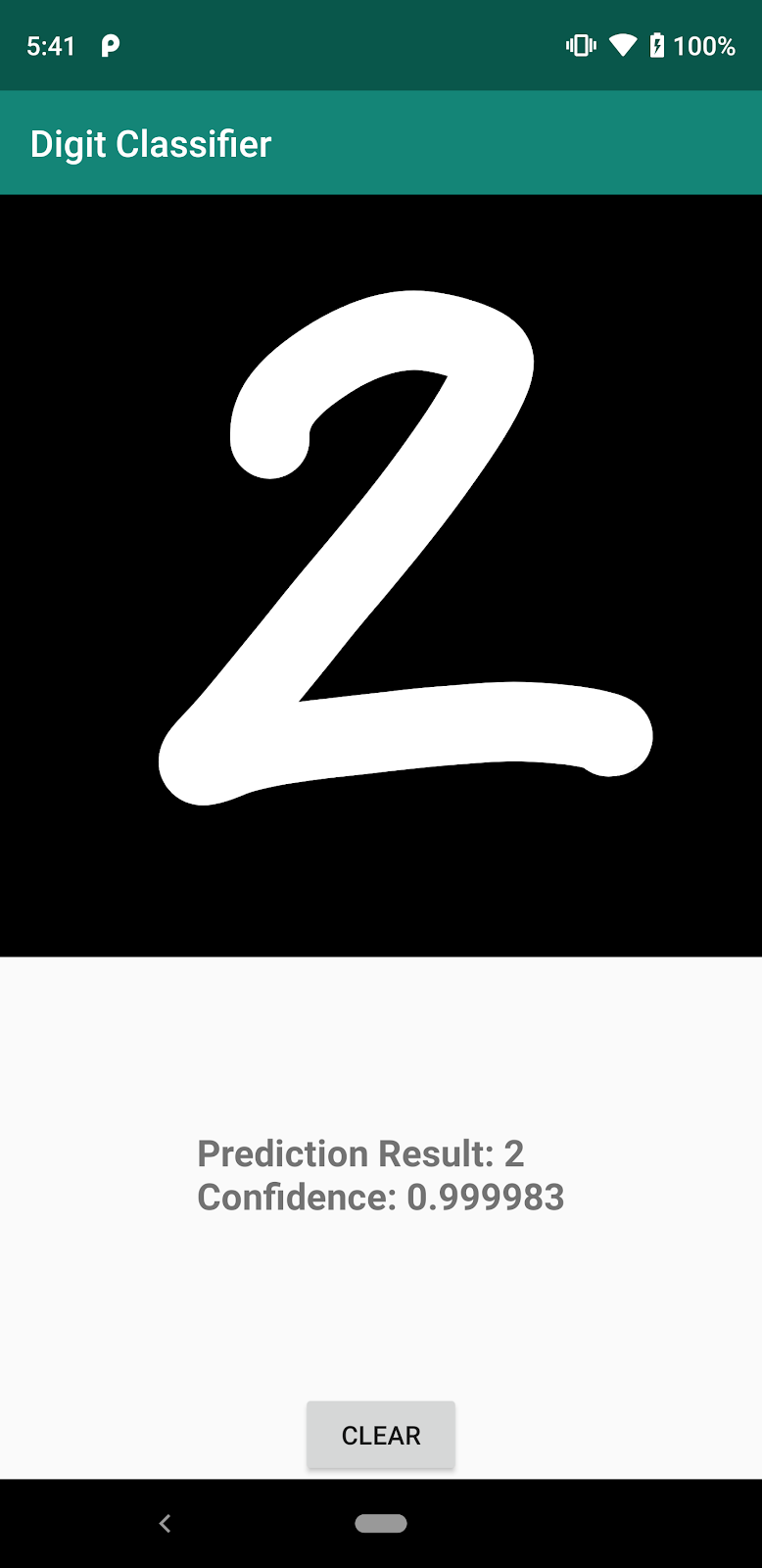

After finishing the codelab, we will have a working Android app that can recognize handwritten digits that you write.

If you run into any issues (code bugs, grammatical errors, unclear wording, etc.) as you work through this codelab, please report the issue via the Report a mistake link in the lower left corner of the codelab.

What is TensorFlow?

TensorFlow is an end-to-end open source platform for machine learning. It has a comprehensive, flexible ecosystem of tools, libraries, and community resources that lets researchers push the state-of-the-art in machine learning and helps developers easily build and deploy machine learning powered applications.

TensorFlow Lite is a product in the TensorFlow ecosystem to help developers run TensorFlow models on mobile, embedded, and IoT devices. It enables on-device machine learning inference with low latency and a small binary size.

What you'll learn

- How to train a handwritten digit classifier model using TensorFlow.

- How to convert a TensorFlow model to TensorFlow Lite.

- How to deploy a TensorFlow Lite model to an Android app.

What you'll need

- Access to Google Colab or a Python environment with TensorFlow 2.0+

- A recent version of Android Studio (v4.1+)

- Android Studio Emulator or a physical Android device (v5.0+)

- Basic knowledge of Android development in Kotlin

2. Train a machine learning model

We will start by using TensorFlow to define and train our machine learning model that can recognize handwritten digits, known in machine learning terms as a digit classifier model. Next, we will convert the trained TensorFlow model to TensorFlow Lite to get ready for deployment.

This step is presented as a Python notebook that you can open in Google Colab.

Open in Colab

After finishing this step, you will have a TensorFlow Lite digit classifier model that is ready for deployment to a mobile app.

3. Add TensorFlow Lite to the Android app

Download the Android skeleton app

Download a zip archive that contains the source code of the Android app used in this codelab. Extract the archive in your local machine.

Import the app to Android Studio

- Open Android Studio.

- Click

Import project (Gradle, Eclipse ADT, etc.). - Choose the

lite/codelabs/digit_classifier/android/start/folder from where you extracted the archive to. - Wait for the import processes to finish.

Add the TensorFlow Lite model to assets folder

- Copy the TensorFlow Lite model mnist.tflite that you trained earlier to assets folder at

lite/codelabs/digit_classifier/android/start/app/src/main/assets/.

Update build.gradle

- Go to

build.gradleof theappmodule and find this block.

dependencies {

...

// TODO: Add TF Lite

...

}

- Add TensorFlow Lite to the app's dependencies.

implementation 'org.tensorflow:tensorflow-lite:2.5.0'

- Then find this code block.

android {

...

// TODO: Add an option to avoid compressing TF Lite model file

...

}

- Add the following lines to this code block to prevent Android from compressing TensorFlow Lite model files when generating the app binary. You must add this option for the model to work.

aaptOptions {

noCompress "tflite"

}

- Click

Sync Nowto apply the changes.

4. Initialize TensorFlow Lite interpreter

org.tensorflow.lite.Interpreter is the class that allows you to run your TensorFlow Lite model in your Android app. We will start by initializing an Interpreter instance with our model.

- Open

DigitClassifier.kt. This is where we will add TensorFlow Lite code. - First, add a field to the

DigitClassifierclass.

class DigitClassifier(private val context: Context) {

private var interpreter: Interpreter? = null

// ...

}

- Android Studio now raises an error:

Unresolved reference: Interpreter. Follow its suggestion and importorg.tensorflow.lite.Interpreterto fix the error. - Next, find this code block.

private fun initializeInterpreter() {

// TODO: Load the TF Lite model from file and initialize an interpreter.

// ...

}

- Then add these lines to initialize a TensorFlow Lite interpreter instance using the

mnist.tflitemodel from theassetsfolder.

// Load the TF Lite model from the asset folder.

val assetManager = context.assets

val model = loadModelFile(assetManager, "mnist.tflite")

val interpreter = Interpreter(model)

- Add these lines right below to read the model input shape from the model.

// Read input shape from model file

val inputShape = interpreter.getInputTensor(0).shape()

inputImageWidth = inputShape[1]

inputImageHeight = inputShape[2]

modelInputSize = FLOAT_TYPE_SIZE * inputImageWidth * inputImageHeight * PIXEL_SIZE

// Finish interpreter initialization

this.interpreter = interpreter

- After we have finished using the TensorFlow Lite interpreter, we should close it to free up resources. In this sample, we synchronize the interpreter lifecycle to the activity

MainActivitylifecycle, and we will close the interpreter when the activity is going to be destroyed. Let's find this comment block inDigitClassifier#close()method.

// TODO: close the TF Lite interpreter here

- Then add this line.

interpreter?.close()

5. Run inference with TensorFlow Lite

Our TensorFlow Lite interpreter is set up, so let's write code to recognize the digit in the input image. We will need to do the following:

- Pre-process the input: convert a

Bitmapinstance to aByteBufferinstance containing the pixel values of all pixels in the input image. We useByteBufferbecause it is faster than a Kotlin native float multidimensional array. - Run inference.

- Post-processing the output: convert the probability array to a human-readable string.

Let's write some code.

- Find this code block in

DigitClassifier.kt.

private fun classify(bitmap: Bitmap): String {

// ...

// TODO: Add code to run inference with TF Lite.

// ...

}

- Add code to convert the input

Bitmapinstance to aByteBufferinstance to feed to the model.

// Preprocessing: resize the input image to match the model input shape.

val resizedImage = Bitmap.createScaledBitmap(

bitmap,

inputImageWidth,

inputImageHeight,

true

)

val byteBuffer = convertBitmapToByteBuffer(resizedImage)

- Then run inference with the preprocessed input.

// Define an array to store the model output.

val output = Array(1) { FloatArray(OUTPUT_CLASSES_COUNT) }

// Run inference with the input data.

interpreter?.run(byteBuffer, output)

- Then identify the digit with the highest probability from the model output, and return a human readable string that contains the prediction result and confidence. Replace the return statement that is in the starting code block.

// Post-processing: find the digit that has the highest probability

// and return it a human-readable string.

val result = output[0]

val maxIndex = result.indices.maxBy { result[it] } ?: -1

val resultString = "Prediction Result: %d\nConfidence: %2f".

format(maxIndex, result[maxIndex])

return resultString

6. Deploy and test the app

Let's deploy the app to an Android Emulator or a physical Android device to test it.

- Click Run (

) in the Android Studio toolbar to run the app.

) in the Android Studio toolbar to run the app. - Draw any digit to the drawing pad and see if the app can recognize it.

7. Advanced: Improve accuracy

You may have noticed that the app did not perform well at recognizing the digits you drew as you would expected. In this step, we will learn how machine learning models could perform well in testing but badly in production. Then we will explore how to improve accuracy with data augmentation.

Open in Colab

After finishing this step, you will have an improved TensorFlow Lite digit classifier model that you can redeploy to the mobile app.

8. Congratulations!

You have gone through an end-to-end journey of training a digit classification model on MNIST dataset using TensorFlow, and you have deployed the model to a mobile app that uses TensorFlow Lite.

Next steps

- TensorFlow Lite also supports iOS devices, IoT devices running Linux, and microcontrollers. Learn more about the iOS version of digit classifier.

- For a better understanding about machine learning and TensorFlow, please consider taking our training course.

- Also check out our collection of pre-trained TensorFlow Lite models that can help you with many on-device machine learning use cases such as image segmentation and style transfer.