1. Introduction

Why use Vulkan in my game?

Vulkan is the primary low-level graphics API on Android. Vulkan enables reaching higher performance for games that implement their own game engine and renderer.

Vulkan is available on Android from Android 7.0 (API level 24). Vulkan 1.1 support is a requirement for new 64-bit Android devices beginning with Android 10.0. The 2022 Android Baseline Profile also sets a minimum Vulkan API version of 1.1.

Games that have lots of draw calls and that use OpenGL ES can see significant driver overhead due to the high cost of making draw calls within OpenGL ES. These games can become CPU bound from spending large portions of their frame time in the graphics driver. These games can also see significant reductions in CPU and power use by switching from OpenGL ES to Vulkan. This is especially applicable if the game has complex scenes that can't effectively use instancing to reduce draw calls.

What you'll build

In this codelab, you're going to take a basic C++ Android App and add code to set up the Vulkan rendering pipeline. You will then implement code that uses Vulkan to render a textured, rotating triangle on the screen.

What you'll need

- Android Studio Iguana or later.

- An Android device running Android 10.0 or later, connected to your computer, that has Developer options and USB debugging enabled.

2. Getting set up

Setting up your development environment

If you have not previously worked with native projects in Android Studio, you may need to install Android NDK and CMake. If you already have them installed, proceed to Setting up the project.

Checking that the SDK, NDK and CMake is installed

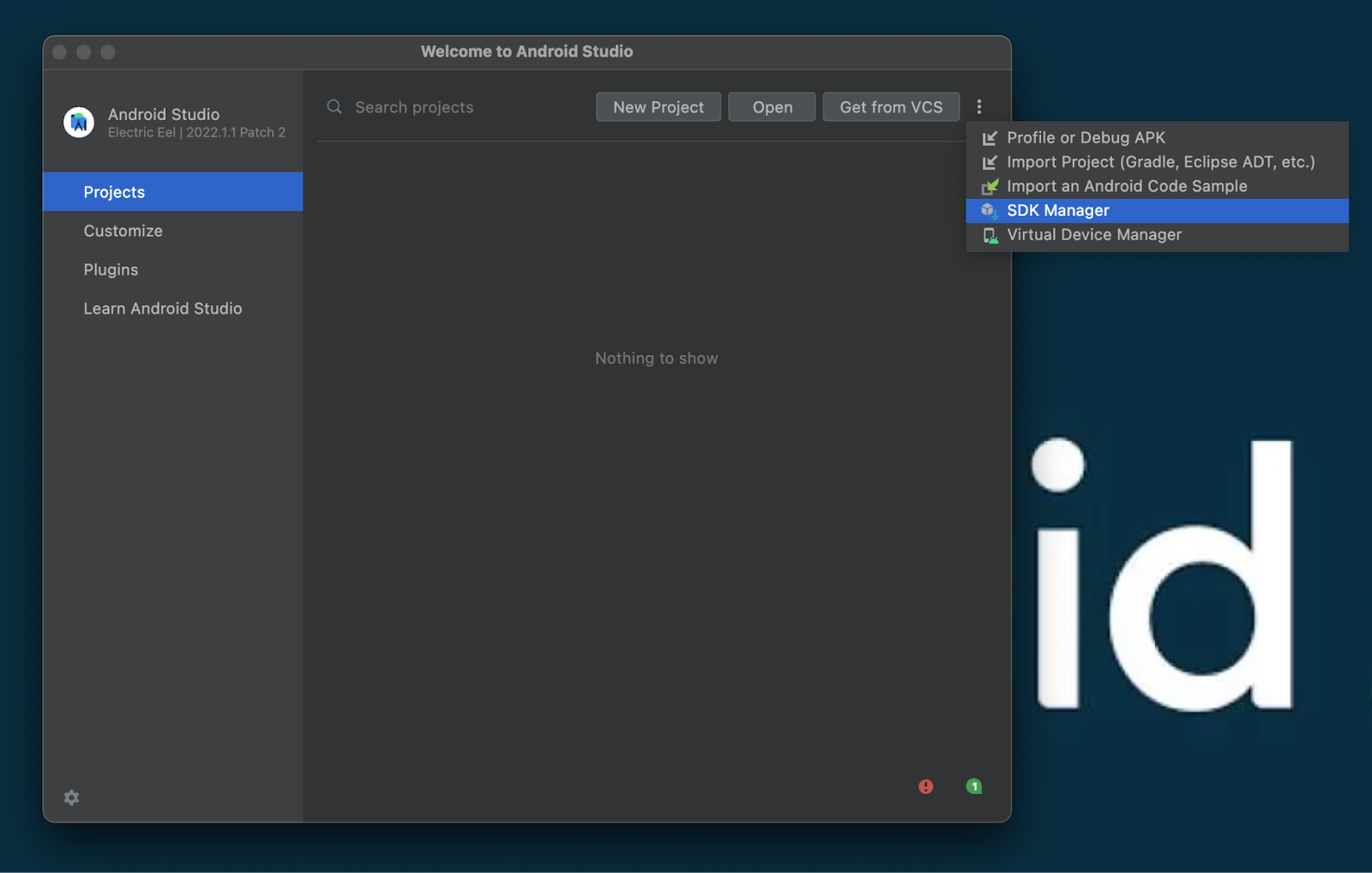

Launch Android Studio. When the Welcome to Android Studio window is displayed, open the Configure dropdown menu and select the SDK Manager option.

If you already have an existing project opened, you can instead open the SDK Manager via the Tools menu. Click on Tools menu and select SDK Manager, the SDK Manager window will open.

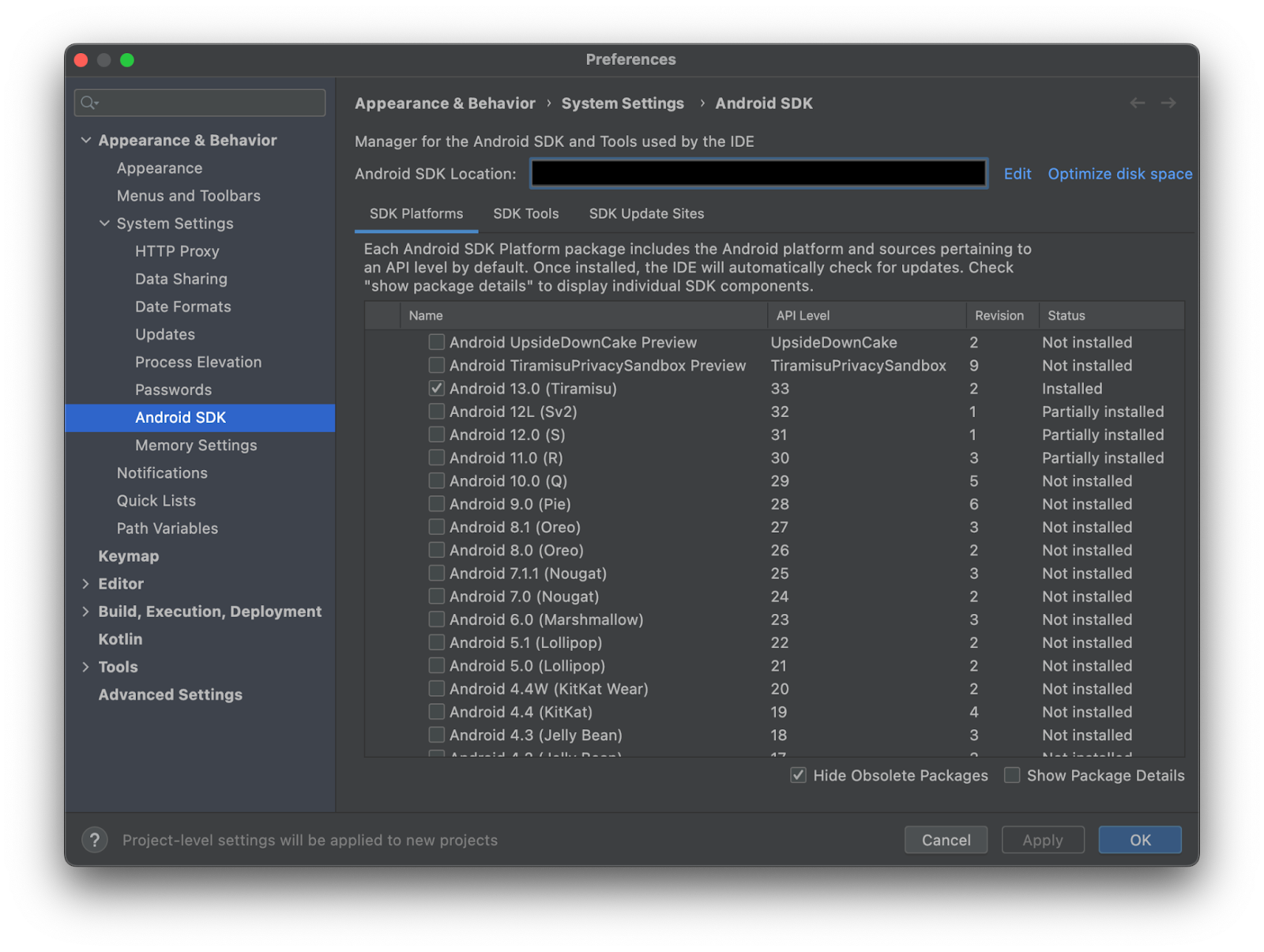

In the sidebar, select in order: Appearance & Behavior > System Settings > Android SDK. Select the SDK Platforms tab in the Android SDK pane to display a list of installed tool options. Ensure Android SDK 12.0 or later is installed.

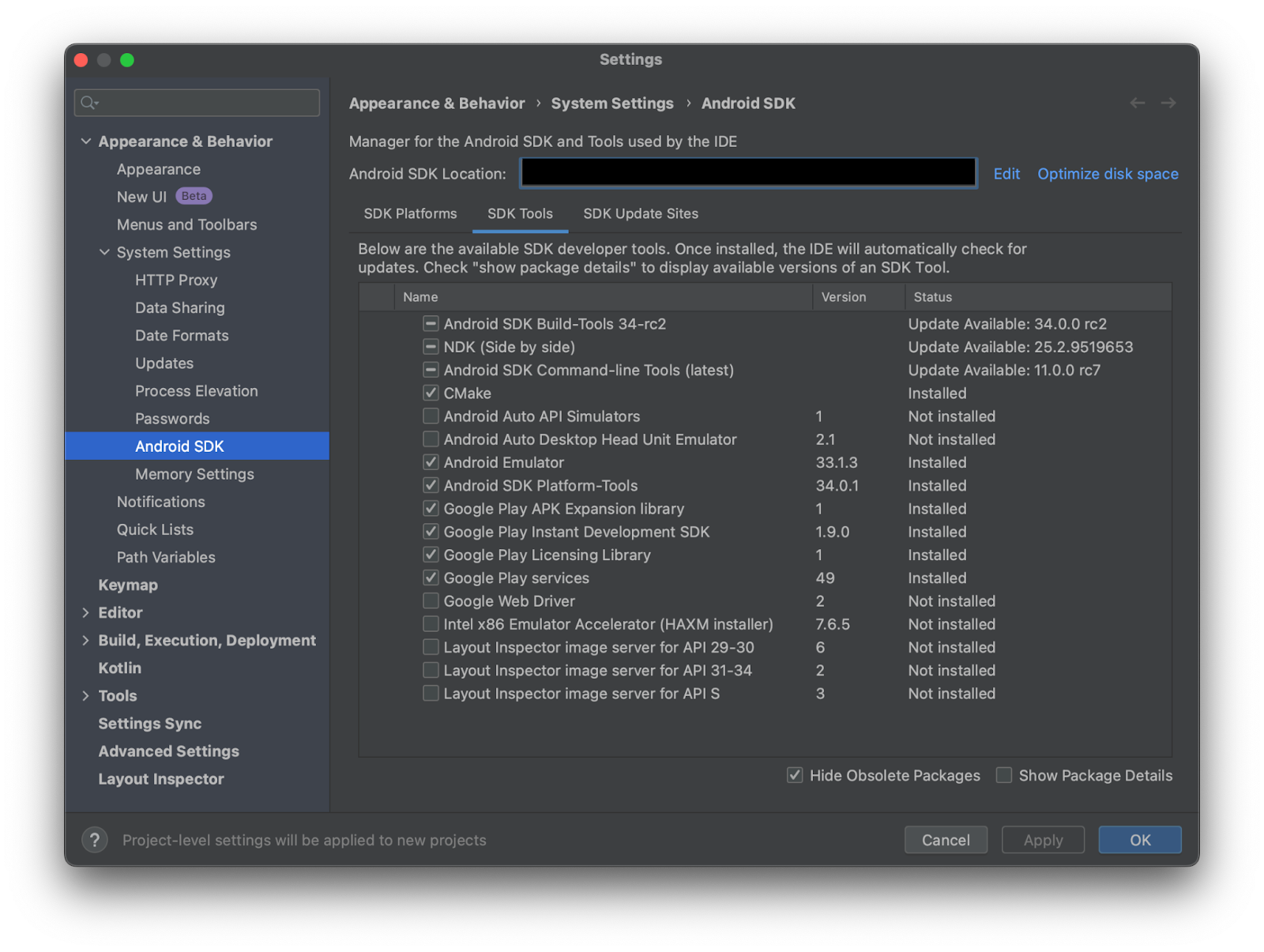

Next, select the SDK Tools tab and ensure NDK and CMake are installed.

Note: The exact version shouldn't matter as long as they're reasonably new, but we're currently on NDK 26.1.10909125 and CMake 3.22.1. The version of the NDK being installed by default will change over time with subsequent NDK releases. If you need to install a specific version of the NDK, follow the instructions in the Android Studio reference for installing the NDK under the section "Install a specific version of the NDK".

Once all the required tools are checked, click the Apply button at the bottom of the window to install them. You may then close the Android SDK window by clicking the OK button.

Setting up the project

A starting project derived from the C++ template has been set up for you in a git repository. The starting project implements app initialization and event handling, but does not yet do any graphics setup or rendering.

Cloning the repo

From the command line, change to the directory you wish to contain the root project directory and clone it from GitHub:

git clone -b codelab/start https://github.com/android/getting-started-with-vulkan-on-android-codelab.git --recurse-submodules

Make sure that you're starting from the initial commit of the repo titled [codelab] start: empty app.

Open the project with Android Studio, build the project, then run it on an attached device. The project will launch to an empty black screen, you will be adding graphics rendering in the following sections.

3. Create a Vulkan instance and device

The first step in initializing the Vulkan API for use is to create a Vulkan instance object (VkInstance).

The VkInstance object represents an application's instance of the Vulkan runtime. It is the root object of the Vulkan API and is used to retrieve information about and instantiate Vulkan device objects and any layers it wants to activate.

When an application creates a VkInstance, it must provide information about itself, such as its name, version, and the Vulkan instance extensions it needs.

The Vulkan API design includes a layer system that provides a mechanism for intercepting and processing API calls before they reach the GPU driver. The application can designate layers to activate when creating a VkInstance. The most commonly used layer is the Vulkan validation layer, which provides runtime analysis of API usage for errors or suboptimal performance practices.

Once a VkInstance has been created, the application can use it to query for the available physical devices on the system, create logical devices, and create surfaces to render to.

A VkInstance is typically created once at the start of the application and destroyed at the end. However, it is possible to create multiple VkInstances within the same application, for example, if the application needs to use multiple GPUs or create multiple windows.

// CODELAB: hellovk.h

void HelloVK::createInstance() {

VkApplicationInfo appInfo{};

appInfo.sType = VK_STRUCTURE_TYPE_APPLICATION_INFO;

appInfo.pApplicationName = "Hello Triangle";

appInfo.applicationVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.pEngineName = "No Engine";

appInfo.engineVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.apiVersion = VK_API_VERSION_1_0;

VkInstanceCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO;

createInfo.pApplicationInfo = &appInfo;

createInfo.enabledExtensionCount = (uint32_t)requiredExtensions.size();

createInfo.ppEnabledExtensionNames = requiredExtensions.data();

createInfo.pApplicationInfo = &appInfo;

createInfo.enabledLayerCount = 0;

createInfo.pNext = nullptr;

VK_CHECK(vkCreateInstance(&createInfo, nullptr, &instance));

}

}

A VkPhysicalDevice is a Vulkan object that represents a physical Vulkan device on the system. Most Android devices will only have return a single VkPhysicalDevice representing the GPU. However a PC or Android device could enumerate multiple physical devices. For example, a computer than contains both discrete GPU and an integrated GPU.

VkPhysicalDevices can be queried for their properties, such as their name, vendor, driver version, and supported features. This information can be used to choose the best physical device for a particular application.

Once a VkPhysicalDevice has been chosen, the application can create a logical device from it. A logical device is a representation of the physical device that is specific to the application. It has its own state and resources, and it is independent of other logical devices that may be created from the same physical device.

There are different types of queues that originate from different Queue Families and each family of queues allows only a subset of commands. For example, there could be a queue family that only allows processing of compute commands or one that only allows memory transfer related commands.

A VkPhysicalDevice can enumerate all available types of Queue Families. We are only interested in the graphics queue here, but there may also be additional queues that support only COMPUTE or TRANSFER. A Queue Family does not have its own type. Instead, it is represented by numeric index type uint32_t inside their parent object (VkPhysicalDevice).

A VkPhysicalDevice can have multiple logical devices created from it. This is useful for applications that need to use multiple GPUs or create multiple windows.

A VkDevice is a Vulkan object that represents a logical Vulkan device. It is a thin abstraction over the physical device, and provides all of the functionality needed to create and manage Vulkan resources, such as buffers, images, and shaders.

A VkDevice is created from a VkPhysicalDevice, and it is specific to the application that created it. It has its own state and resources, and it is independent of other logical devices that may be created from the same physical device.

A VkSurfaceKHR object represents a surface that can be the target of rendering operations. To display graphics on the device screen, you will create a surface using a reference to the application window object. Once a VkSurfaceKHR object has been created, the application can use it to create a VkSwapchainKHR object.

VkSwapchainKHR object represents an infrastructure that owns the buffers we will render to before we can visualize them on the screen. It is essentially a queue of images that are waiting to be presented to the screen. We will acquire such an image to draw to it, and then return it to the queue. How exactly the queue works and the conditions for presenting an image from the queue depends on how the swap chain is set up, but the general purpose of the swap chain is to synchronize the presentation of images with the refresh rate of the screen.

// CODELAB: hellovk.h - Data Types

struct QueueFamilyIndices {

std::optional<uint32_t> graphicsFamily;

std::optional<uint32_t> presentFamily;

bool isComplete() {

return graphicsFamily.has_value() && presentFamily.has_value();

}

};

struct SwapChainSupportDetails {

VkSurfaceCapabilitiesKHR capabilities;

std::vector<VkSurfaceFormatKHR> formats;

std::vector<VkPresentModeKHR> presentModes;

};

struct ANativeWindowDeleter {

void operator()(ANativeWindow *window) { ANativeWindow_release(window); }

};

You can set up validation layer support if you need to debug your application. You can also check specific extensions your game may need.

// CODELAB: hellovk.h

bool HelloVK::checkValidationLayerSupport() {

uint32_t layerCount;

vkEnumerateInstanceLayerProperties(&layerCount, nullptr);

std::vector<VkLayerProperties> availableLayers(layerCount);

vkEnumerateInstanceLayerProperties(&layerCount, availableLayers.data());

for (const char *layerName : validationLayers) {

bool layerFound = false;

for (const auto &layerProperties : availableLayers) {

if (strcmp(layerName, layerProperties.layerName) == 0) {

layerFound = true;

break;

}

}

if (!layerFound) {

return false;

}

}

return true;

}

std::vector<const char *> HelloVK::getRequiredExtensions(

bool enableValidationLayers) {

std::vector<const char *> extensions;

extensions.push_back("VK_KHR_surface");

extensions.push_back("VK_KHR_android_surface");

if (enableValidationLayers) {

extensions.push_back(VK_EXT_DEBUG_UTILS_EXTENSION_NAME);

}

return extensions;

}

Once you've found the appropriate setup and create the VkInstance, create the VkSurface which represents the window to render to.

// CODELAB: hellovk.h

void HelloVK::createSurface() {

assert(window != nullptr); // window not initialized

const VkAndroidSurfaceCreateInfoKHR create_info{

.sType = VK_STRUCTURE_TYPE_ANDROID_SURFACE_CREATE_INFO_KHR,

.pNext = nullptr,

.flags = 0,

.window = window.get()};

VK_CHECK(vkCreateAndroidSurfaceKHR(instance, &create_info,

nullptr /* pAllocator */, &surface));

}

Enumerate the physical device (GPUs) available and pick the first suitable device available.

// CODELAB: hellovk.h

void HelloVK::pickPhysicalDevice() {

uint32_t deviceCount = 0;

vkEnumeratePhysicalDevices(instance, &deviceCount, nullptr);

assert(deviceCount > 0); // failed to find GPUs with Vulkan support!

std::vector<VkPhysicalDevice> devices(deviceCount);

vkEnumeratePhysicalDevices(instance, &deviceCount, devices.data());

for (const auto &device : devices) {

if (isDeviceSuitable(device)) {

physicalDevice = device;

break;

}

}

assert(physicalDevice != VK_NULL_HANDLE); // failed to find a suitable GPU!

}

To check whether the device is suitable, we need to find one that supports the GRAPHICS queue.

// CODELAB: hellovk.h

bool HelloVK::isDeviceSuitable(VkPhysicalDevice device) {

QueueFamilyIndices indices = findQueueFamilies(device);

bool extensionsSupported = checkDeviceExtensionSupport(device);

bool swapChainAdequate = false;

if (extensionsSupported) {

SwapChainSupportDetails swapChainSupport = querySwapChainSupport(device);

swapChainAdequate = !swapChainSupport.formats.empty() &&

!swapChainSupport.presentModes.empty();

}

return indices.isComplete() && extensionsSupported && swapChainAdequate;

}

// CODELAB: hellovk.h

bool HelloVK::checkDeviceExtensionSupport(VkPhysicalDevice device) {

uint32_t extensionCount;

vkEnumerateDeviceExtensionProperties(device, nullptr, &extensionCount,

nullptr);

std::vector<VkExtensionProperties> availableExtensions(extensionCount);

vkEnumerateDeviceExtensionProperties(device, nullptr, &extensionCount,

availableExtensions.data());

std::set<std::string> requiredExtensions(deviceExtensions.begin(),

deviceExtensions.end());

for (const auto &extension : availableExtensions) {

requiredExtensions.erase(extension.extensionName);

}

return requiredExtensions.empty();

}

// CODELAB: hellovk.h

QueueFamilyIndices HelloVK::findQueueFamilies(VkPhysicalDevice device) {

QueueFamilyIndices indices;

uint32_t queueFamilyCount = 0;

vkGetPhysicalDeviceQueueFamilyProperties(device, &queueFamilyCount, nullptr);

std::vector<VkQueueFamilyProperties> queueFamilies(queueFamilyCount);

vkGetPhysicalDeviceQueueFamilyProperties(device, &queueFamilyCount,

queueFamilies.data());

int i = 0;

for (const auto &queueFamily : queueFamilies) {

if (queueFamily.queueFlags & VK_QUEUE_GRAPHICS_BIT) {

indices.graphicsFamily = i;

}

VkBool32 presentSupport = false;

vkGetPhysicalDeviceSurfaceSupportKHR(device, i, surface, &presentSupport);

if (presentSupport) {

indices.presentFamily = i;

}

if (indices.isComplete()) {

break;

}

i++;

}

return indices;

}

Once we've figured out the PhysicalDevice to use, create a logical device (known as VkDevice). This represents an initialized Vulkan device that is ready to create all other objects to be used by your application.

// CODELAB: hellovk.h

void HelloVK::createLogicalDeviceAndQueue() {

QueueFamilyIndices indices = findQueueFamilies(physicalDevice);

std::vector<VkDeviceQueueCreateInfo> queueCreateInfos;

std::set<uint32_t> uniqueQueueFamilies = {indices.graphicsFamily.value(),

indices.presentFamily.value()};

float queuePriority = 1.0f;

for (uint32_t queueFamily : uniqueQueueFamilies) {

VkDeviceQueueCreateInfo queueCreateInfo{};

queueCreateInfo.sType = VK_STRUCTURE_TYPE_DEVICE_QUEUE_CREATE_INFO;

queueCreateInfo.queueFamilyIndex = queueFamily;

queueCreateInfo.queueCount = 1;

queueCreateInfo.pQueuePriorities = &queuePriority;

queueCreateInfos.push_back(queueCreateInfo);

}

VkPhysicalDeviceFeatures deviceFeatures{};

VkDeviceCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_DEVICE_CREATE_INFO;

createInfo.queueCreateInfoCount =

static_cast<uint32_t>(queueCreateInfos.size());

createInfo.pQueueCreateInfos = queueCreateInfos.data();

createInfo.pEnabledFeatures = &deviceFeatures;

createInfo.enabledExtensionCount =

static_cast<uint32_t>(deviceExtensions.size());

createInfo.ppEnabledExtensionNames = deviceExtensions.data();

if (enableValidationLayers) {

createInfo.enabledLayerCount =

static_cast<uint32_t>(validationLayers.size());

createInfo.ppEnabledLayerNames = validationLayers.data();

} else {

createInfo.enabledLayerCount = 0;

}

VK_CHECK(vkCreateDevice(physicalDevice, &createInfo, nullptr, &device));

vkGetDeviceQueue(device, indices.graphicsFamily.value(), 0, &graphicsQueue);

vkGetDeviceQueue(device, indices.presentFamily.value(), 0, &presentQueue);

}

At the end of this step, you can only see a black window without anything rendered as this is still in the middle of the setup process. If anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: create instance and device.

4. Create Swapchain and sync objects

A VkSwapchain is a Vulkan object that represents a queue of images that can be presented to the display. It is used to implement double buffering or triple buffering, which can reduce tearing and improve performance.

To create a VkSwapchain, an application must first create a VkSurfaceKHR object. We have already created our VkSurfaceKHR object when we set up the window during the create instance step.

The VkSwapchainKHR object will have many associated images. These images are used to store the rendered scene. The application can acquire an image from the VkSwapchainKHR object, render to it, and then present it to the display.

Once an image has been presented to the display, it is no longer available to the application. The application must acquire another image from the VkSwapchainKHR object before it can render again.

VkSwapchains are typically created once at the start of the application and destroyed at the end. However, it is possible to create and destroy multiple VkSwapchains within the same application, for example, if the application needs to use multiple GPUs or create multiple windows.

Sync objects are objects used for synchronization. Vulkan has VkFence, VkSemaphore, and VkEvent which are used to control resource access across multiple queues. These objects are needed if you're using multiple queues and render passes but for our simple example, we wouldn't be using it.

// CODELAB: hellovk.h

void HelloVK::createSyncObjects() {

imageAvailableSemaphores.resize(MAX_FRAMES_IN_FLIGHT);

renderFinishedSemaphores.resize(MAX_FRAMES_IN_FLIGHT);

inFlightFences.resize(MAX_FRAMES_IN_FLIGHT);

VkSemaphoreCreateInfo semaphoreInfo{};

semaphoreInfo.sType = VK_STRUCTURE_TYPE_SEMAPHORE_CREATE_INFO;

VkFenceCreateInfo fenceInfo{};

fenceInfo.sType = VK_STRUCTURE_TYPE_FENCE_CREATE_INFO;

fenceInfo.flags = VK_FENCE_CREATE_SIGNALED_BIT;

for (size_t i = 0; i < MAX_FRAMES_IN_FLIGHT; i++) {

VK_CHECK(vkCreateSemaphore(device, &semaphoreInfo, nullptr,

&imageAvailableSemaphores[i]));

VK_CHECK(vkCreateSemaphore(device, &semaphoreInfo, nullptr,

&renderFinishedSemaphores[i]));

VK_CHECK(vkCreateFence(device, &fenceInfo, nullptr, &inFlightFences[i]));

}

}

// CODELAB: hellovk.h

void HelloVK::createSwapChain() {

SwapChainSupportDetails swapChainSupport =

querySwapChainSupport(physicalDevice);

auto chooseSwapSurfaceFormat =

[](const std::vector<VkSurfaceFormatKHR> &availableFormats) {

for (const auto &availableFormat : availableFormats) {

if (availableFormat.format == VK_FORMAT_B8G8R8A8_SRGB &&

availableFormat.colorSpace == VK_COLOR_SPACE_SRGB_NONLINEAR_KHR) {

return availableFormat;

}

}

return availableFormats[0];

};

VkSurfaceFormatKHR surfaceFormat =

chooseSwapSurfaceFormat(swapChainSupport.formats);

// Please check

// https://registry.khronos.org/vulkan/specs/1.3-extensions/man/html/VkPresentModeKHR.html

// for a discourse on different present modes.

//

// VK_PRESENT_MODE_FIFO_KHR = Hard Vsync

// This is always supported on Android phones

VkPresentModeKHR presentMode = VK_PRESENT_MODE_FIFO_KHR;

uint32_t imageCount = swapChainSupport.capabilities.minImageCount + 1;

if (swapChainSupport.capabilities.maxImageCount > 0 &&

imageCount > swapChainSupport.capabilities.maxImageCount) {

imageCount = swapChainSupport.capabilities.maxImageCount;

}

pretransformFlag = swapChainSupport.capabilities.currentTransform;

VkSwapchainCreateInfoKHR createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_SWAPCHAIN_CREATE_INFO_KHR;

createInfo.surface = surface;

createInfo.minImageCount = imageCount;

createInfo.imageFormat = surfaceFormat.format;

createInfo.imageColorSpace = surfaceFormat.colorSpace;

createInfo.imageExtent = displaySizeIdentity;

createInfo.imageArrayLayers = 1;

createInfo.imageUsage = VK_IMAGE_USAGE_COLOR_ATTACHMENT_BIT;

createInfo.preTransform = pretransformFlag;

QueueFamilyIndices indices = findQueueFamilies(physicalDevice);

uint32_t queueFamilyIndices[] = {indices.graphicsFamily.value(),

indices.presentFamily.value()};

if (indices.graphicsFamily != indices.presentFamily) {

createInfo.imageSharingMode = VK_SHARING_MODE_CONCURRENT;

createInfo.queueFamilyIndexCount = 2;

createInfo.pQueueFamilyIndices = queueFamilyIndices;

} else {

createInfo.imageSharingMode = VK_SHARING_MODE_EXCLUSIVE;

createInfo.queueFamilyIndexCount = 0;

createInfo.pQueueFamilyIndices = nullptr;

}

createInfo.compositeAlpha = VK_COMPOSITE_ALPHA_INHERIT_BIT_KHR;

createInfo.presentMode = presentMode;

createInfo.clipped = VK_TRUE;

createInfo.oldSwapchain = VK_NULL_HANDLE;

VK_CHECK(vkCreateSwapchainKHR(device, &createInfo, nullptr, &swapChain));

vkGetSwapchainImagesKHR(device, swapChain, &imageCount, nullptr);

swapChainImages.resize(imageCount);

vkGetSwapchainImagesKHR(device, swapChain, &imageCount,

swapChainImages.data());

swapChainImageFormat = surfaceFormat.format;

swapChainExtent = displaySizeIdentity;

}

// CODELAB: hellovk.h

SwapChainSupportDetails HelloVK::querySwapChainSupport(

VkPhysicalDevice device) {

SwapChainSupportDetails details;

vkGetPhysicalDeviceSurfaceCapabilitiesKHR(device, surface,

&details.capabilities);

uint32_t formatCount;

vkGetPhysicalDeviceSurfaceFormatsKHR(device, surface, &formatCount, nullptr);

if (formatCount != 0) {

details.formats.resize(formatCount);

vkGetPhysicalDeviceSurfaceFormatsKHR(device, surface, &formatCount,

details.formats.data());

}

uint32_t presentModeCount;

vkGetPhysicalDeviceSurfacePresentModesKHR(device, surface, &presentModeCount,

nullptr);

if (presentModeCount != 0) {

details.presentModes.resize(presentModeCount);

vkGetPhysicalDeviceSurfacePresentModesKHR(

device, surface, &presentModeCount, details.presentModes.data());

}

return details;

}

You'll also need to prepare for swap chain recreation after the device loses context. For example, when the user switches to another application.

// CODELAB: hellovk.h

void HelloVK::reset(ANativeWindow *newWindow, AAssetManager *newManager) {

window.reset(newWindow);

assetManager = newManager;

if (initialized) {

createSurface();

recreateSwapChain();

}

}

void HelloVK::recreateSwapChain() {

vkDeviceWaitIdle(device);

cleanupSwapChain();

createSwapChain();

}

At the end of this step, you can only see a black window without anything rendered as this is still in the middle of the setup process. If anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: create swapchain and sync objects.

5. Create Renderpass and Framebuffer

A VkImageView is a Vulkan object that describes how to access a VkImage. It specifies the subresource range of the image to access, the pixel format to use, and the swizzle to apply to the channels.

A VkRenderPass is a Vulkan object that describes how the GPU should render a scene. It specifies the attachments that will be used, the order in which they will be rendered to, and how they will be used at each stage of the rendering pipeline.

A VkFramebuffer is a Vulkan object that represents a set of image views that will be used as attachments during execution of a render pass. In other words, it binds the actual image attachments to the render pass.

// CODELAB: hellovk.h

void HelloVK::createImageViews() {

swapChainImageViews.resize(swapChainImages.size());

for (size_t i = 0; i < swapChainImages.size(); i++) {

VkImageViewCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_IMAGE_VIEW_CREATE_INFO;

createInfo.image = swapChainImages[i];

createInfo.viewType = VK_IMAGE_VIEW_TYPE_2D;

createInfo.format = swapChainImageFormat;

createInfo.components.r = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.components.g = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.components.b = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.components.a = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.subresourceRange.aspectMask = VK_IMAGE_ASPECT_COLOR_BIT;

createInfo.subresourceRange.baseMipLevel = 0;

createInfo.subresourceRange.levelCount = 1;

createInfo.subresourceRange.baseArrayLayer = 0;

createInfo.subresourceRange.layerCount = 1;

VK_CHECK(vkCreateImageView(device, &createInfo, nullptr,

&swapChainImageViews[i]));

}

}

Attachment in Vulkan is what is usually known as render target, which is usually an image used as output for rendering. Only the format needs to be described here, for example, the render pass may output a specific color format or depth stencil format. You also need to specify whether your attachment should have its content preserved, discarded, or cleared at the beginning of the pass.

// CODELAB: hellovk.h

void HelloVK::createRenderPass() {

VkAttachmentDescription colorAttachment{};

colorAttachment.format = swapChainImageFormat;

colorAttachment.samples = VK_SAMPLE_COUNT_1_BIT;

colorAttachment.loadOp = VK_ATTACHMENT_LOAD_OP_CLEAR;

colorAttachment.storeOp = VK_ATTACHMENT_STORE_OP_STORE;

colorAttachment.stencilLoadOp = VK_ATTACHMENT_LOAD_OP_DONT_CARE;

colorAttachment.stencilStoreOp = VK_ATTACHMENT_STORE_OP_DONT_CARE;

colorAttachment.initialLayout = VK_IMAGE_LAYOUT_UNDEFINED;

colorAttachment.finalLayout = VK_IMAGE_LAYOUT_PRESENT_SRC_KHR;

VkAttachmentReference colorAttachmentRef{};

colorAttachmentRef.attachment = 0;

colorAttachmentRef.layout = VK_IMAGE_LAYOUT_COLOR_ATTACHMENT_OPTIMAL;

VkSubpassDescription subpass{};

subpass.pipelineBindPoint = VK_PIPELINE_BIND_POINT_GRAPHICS;

subpass.colorAttachmentCount = 1;

subpass.pColorAttachments = &colorAttachmentRef;

VkSubpassDependency dependency{};

dependency.srcSubpass = VK_SUBPASS_EXTERNAL;

dependency.dstSubpass = 0;

dependency.srcStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT;

dependency.srcAccessMask = 0;

dependency.dstStageMask = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT;

dependency.dstAccessMask = VK_ACCESS_COLOR_ATTACHMENT_WRITE_BIT;

VkRenderPassCreateInfo renderPassInfo{};

renderPassInfo.sType = VK_STRUCTURE_TYPE_RENDER_PASS_CREATE_INFO;

renderPassInfo.attachmentCount = 1;

renderPassInfo.pAttachments = &colorAttachment;

renderPassInfo.subpassCount = 1;

renderPassInfo.pSubpasses = &subpass;

renderPassInfo.dependencyCount = 1;

renderPassInfo.pDependencies = &dependency;

VK_CHECK(vkCreateRenderPass(device, &renderPassInfo, nullptr, &renderPass));

}

Framebuffer represents the link to actual images that can be used for attachments (render target). Create a Framebuffer object by specifying the renderpass and the set of imageviews.

// CODELAB: hellovk.h

void HelloVK::createFramebuffers() {

swapChainFramebuffers.resize(swapChainImageViews.size());

for (size_t i = 0; i < swapChainImageViews.size(); i++) {

VkImageView attachments[] = {swapChainImageViews[i]};

VkFramebufferCreateInfo framebufferInfo{};

framebufferInfo.sType = VK_STRUCTURE_TYPE_FRAMEBUFFER_CREATE_INFO;

framebufferInfo.renderPass = renderPass;

framebufferInfo.attachmentCount = 1;

framebufferInfo.pAttachments = attachments;

framebufferInfo.width = swapChainExtent.width;

framebufferInfo.height = swapChainExtent.height;

framebufferInfo.layers = 1;

VK_CHECK(vkCreateFramebuffer(device, &framebufferInfo, nullptr,

&swapChainFramebuffers[i]));

}

}

At the end of this step, you can only see a black window without anything rendered as this is still in the middle of the setup process. If anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: create renderpass and framebuffer.

6. Create Shader and Pipeline

A VkShaderModule is a Vulkan object representing a programmable shader. Shaders are used to perform various operations on graphics data, such as transforming vertices, shading pixels, and computing global effects.

A VkPipeline is a Vulkan object that represents a programmable graphics pipeline. It is a set of state objects that describe how the GPU should render a scene.

A VkDescriptorSetLayout is the template for a VkDescriptorSet, which in turn is a group of descriptors. Descriptors are the handle that enable shaders to access resources (such as Buffers, Images, or Samplers).

// CODELAB: hellovk.h

void HelloVK::createDescriptorSetLayout() {

VkDescriptorSetLayoutBinding uboLayoutBinding{};

uboLayoutBinding.binding = 0;

uboLayoutBinding.descriptorType = VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER;

uboLayoutBinding.descriptorCount = 1;

uboLayoutBinding.stageFlags = VK_SHADER_STAGE_VERTEX_BIT;

uboLayoutBinding.pImmutableSamplers = nullptr;

VkDescriptorSetLayoutCreateInfo layoutInfo{};

layoutInfo.sType = VK_STRUCTURE_TYPE_DESCRIPTOR_SET_LAYOUT_CREATE_INFO;

layoutInfo.bindingCount = 1;

layoutInfo.pBindings = &uboLayoutBinding;

VK_CHECK(vkCreateDescriptorSetLayout(device, &layoutInfo, nullptr,

&descriptorSetLayout));

}

Define the createShaderModule function to load in the shaders into VkShaderModule objects

// CODELAB: hellovk.h

VkShaderModule HelloVK::createShaderModule(const std::vector<uint8_t> &code) {

VkShaderModuleCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_SHADER_MODULE_CREATE_INFO;

createInfo.codeSize = code.size();

// Satisfies alignment requirements since the allocator

// in vector ensures worst case requirements

createInfo.pCode = reinterpret_cast<const uint32_t *>(code.data());

VkShaderModule shaderModule;

VK_CHECK(vkCreateShaderModule(device, &createInfo, nullptr, &shaderModule));

return shaderModule;

}

Creates the graphics pipeline loading a simple vertex and fragment shader.

// CODELAB: hellovk.h

void HelloVK::createGraphicsPipeline() {

auto vertShaderCode =

LoadBinaryFileToVector("shaders/shader.vert.spv", assetManager);

auto fragShaderCode =

LoadBinaryFileToVector("shaders/shader.frag.spv", assetManager);

VkShaderModule vertShaderModule = createShaderModule(vertShaderCode);

VkShaderModule fragShaderModule = createShaderModule(fragShaderCode);

VkPipelineShaderStageCreateInfo vertShaderStageInfo{};

vertShaderStageInfo.sType =

VK_STRUCTURE_TYPE_PIPELINE_SHADER_STAGE_CREATE_INFO;

vertShaderStageInfo.stage = VK_SHADER_STAGE_VERTEX_BIT;

vertShaderStageInfo.module = vertShaderModule;

vertShaderStageInfo.pName = "main";

VkPipelineShaderStageCreateInfo fragShaderStageInfo{};

fragShaderStageInfo.sType =

VK_STRUCTURE_TYPE_PIPELINE_SHADER_STAGE_CREATE_INFO;

fragShaderStageInfo.stage = VK_SHADER_STAGE_FRAGMENT_BIT;

fragShaderStageInfo.module = fragShaderModule;

fragShaderStageInfo.pName = "main";

VkPipelineShaderStageCreateInfo shaderStages[] = {vertShaderStageInfo,

fragShaderStageInfo};

VkPipelineVertexInputStateCreateInfo vertexInputInfo{};

vertexInputInfo.sType =

VK_STRUCTURE_TYPE_PIPELINE_VERTEX_INPUT_STATE_CREATE_INFO;

vertexInputInfo.vertexBindingDescriptionCount = 0;

vertexInputInfo.pVertexBindingDescriptions = nullptr;

vertexInputInfo.vertexAttributeDescriptionCount = 0;

vertexInputInfo.pVertexAttributeDescriptions = nullptr;

VkPipelineInputAssemblyStateCreateInfo inputAssembly{};

inputAssembly.sType =

VK_STRUCTURE_TYPE_PIPELINE_INPUT_ASSEMBLY_STATE_CREATE_INFO;

inputAssembly.topology = VK_PRIMITIVE_TOPOLOGY_TRIANGLE_LIST;

inputAssembly.primitiveRestartEnable = VK_FALSE;

VkPipelineViewportStateCreateInfo viewportState{};

viewportState.sType = VK_STRUCTURE_TYPE_PIPELINE_VIEWPORT_STATE_CREATE_INFO;

viewportState.viewportCount = 1;

viewportState.scissorCount = 1;

VkPipelineRasterizationStateCreateInfo rasterizer{};

rasterizer.sType = VK_STRUCTURE_TYPE_PIPELINE_RASTERIZATION_STATE_CREATE_INFO;

rasterizer.depthClampEnable = VK_FALSE;

rasterizer.rasterizerDiscardEnable = VK_FALSE;

rasterizer.polygonMode = VK_POLYGON_MODE_FILL;

rasterizer.lineWidth = 1.0f;

rasterizer.cullMode = VK_CULL_MODE_BACK_BIT;

rasterizer.frontFace = VK_FRONT_FACE_CLOCKWISE;

rasterizer.depthBiasEnable = VK_FALSE;

rasterizer.depthBiasConstantFactor = 0.0f;

rasterizer.depthBiasClamp = 0.0f;

rasterizer.depthBiasSlopeFactor = 0.0f;

VkPipelineMultisampleStateCreateInfo multisampling{};

multisampling.sType =

VK_STRUCTURE_TYPE_PIPELINE_MULTISAMPLE_STATE_CREATE_INFO;

multisampling.sampleShadingEnable = VK_FALSE;

multisampling.rasterizationSamples = VK_SAMPLE_COUNT_1_BIT;

multisampling.minSampleShading = 1.0f;

multisampling.pSampleMask = nullptr;

multisampling.alphaToCoverageEnable = VK_FALSE;

multisampling.alphaToOneEnable = VK_FALSE;

VkPipelineColorBlendAttachmentState colorBlendAttachment{};

colorBlendAttachment.colorWriteMask =

VK_COLOR_COMPONENT_R_BIT | VK_COLOR_COMPONENT_G_BIT |

VK_COLOR_COMPONENT_B_BIT | VK_COLOR_COMPONENT_A_BIT;

colorBlendAttachment.blendEnable = VK_FALSE;

VkPipelineColorBlendStateCreateInfo colorBlending{};

colorBlending.sType =

VK_STRUCTURE_TYPE_PIPELINE_COLOR_BLEND_STATE_CREATE_INFO;

colorBlending.logicOpEnable = VK_FALSE;

colorBlending.logicOp = VK_LOGIC_OP_COPY;

colorBlending.attachmentCount = 1;

colorBlending.pAttachments = &colorBlendAttachment;

colorBlending.blendConstants[0] = 0.0f;

colorBlending.blendConstants[1] = 0.0f;

colorBlending.blendConstants[2] = 0.0f;

colorBlending.blendConstants[3] = 0.0f;

VkPipelineLayoutCreateInfo pipelineLayoutInfo{};

pipelineLayoutInfo.sType = VK_STRUCTURE_TYPE_PIPELINE_LAYOUT_CREATE_INFO;

pipelineLayoutInfo.setLayoutCount = 1;

pipelineLayoutInfo.pSetLayouts = &descriptorSetLayout;

pipelineLayoutInfo.pushConstantRangeCount = 0;

pipelineLayoutInfo.pPushConstantRanges = nullptr;

VK_CHECK(vkCreatePipelineLayout(device, &pipelineLayoutInfo, nullptr,

&pipelineLayout));

std::vector<VkDynamicState> dynamicStateEnables = {VK_DYNAMIC_STATE_VIEWPORT,

VK_DYNAMIC_STATE_SCISSOR};

VkPipelineDynamicStateCreateInfo dynamicStateCI{};

dynamicStateCI.sType = VK_STRUCTURE_TYPE_PIPELINE_DYNAMIC_STATE_CREATE_INFO;

dynamicStateCI.pDynamicStates = dynamicStateEnables.data();

dynamicStateCI.dynamicStateCount =

static_cast<uint32_t>(dynamicStateEnables.size());

VkGraphicsPipelineCreateInfo pipelineInfo{};

pipelineInfo.sType = VK_STRUCTURE_TYPE_GRAPHICS_PIPELINE_CREATE_INFO;

pipelineInfo.stageCount = 2;

pipelineInfo.pStages = shaderStages;

pipelineInfo.pVertexInputState = &vertexInputInfo;

pipelineInfo.pInputAssemblyState = &inputAssembly;

pipelineInfo.pViewportState = &viewportState;

pipelineInfo.pRasterizationState = &rasterizer;

pipelineInfo.pMultisampleState = &multisampling;

pipelineInfo.pDepthStencilState = nullptr;

pipelineInfo.pColorBlendState = &colorBlending;

pipelineInfo.pDynamicState = &dynamicStateCI;

pipelineInfo.layout = pipelineLayout;

pipelineInfo.renderPass = renderPass;

pipelineInfo.subpass = 0;

pipelineInfo.basePipelineHandle = VK_NULL_HANDLE;

pipelineInfo.basePipelineIndex = -1;

VK_CHECK(vkCreateGraphicsPipelines(device, VK_NULL_HANDLE, 1, &pipelineInfo,

nullptr, &graphicsPipeline));

vkDestroyShaderModule(device, fragShaderModule, nullptr);

vkDestroyShaderModule(device, vertShaderModule, nullptr);

}

At the end of this step, you can only see a black window without anything rendered as this is still in the middle of the setup process. If anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: create shader and pipeline.

7. DescriptorSet and Uniform Buffer

A VkDescriptorSet is a Vulkan object that represents a collection of descriptor resources. Descriptor resources are used to provide shader inputs, such as uniform buffers, image samplers, and storage buffers. To create the VkDescriptorSets, we will need to create a VkDescriptorPool.

A VkBuffer is a memory buffer used for sharing data between the GPU and CPU. When utilized as a Uniform buffer, it passes data to shaders as uniform variables. Uniform variables are constants that can be accessed by all shaders in a pipeline.

// CODELAB: hellovk.h

void HelloVK::createDescriptorPool() {

VkDescriptorPoolSize poolSize{};

poolSize.type = VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER;

poolSize.descriptorCount = static_cast<uint32_t>(MAX_FRAMES_IN_FLIGHT);

VkDescriptorPoolCreateInfo poolInfo{};

poolInfo.sType = VK_STRUCTURE_TYPE_DESCRIPTOR_POOL_CREATE_INFO;

poolInfo.poolSizeCount = 1;

poolInfo.pPoolSizes = &poolSize;

poolInfo.maxSets = static_cast<uint32_t>(MAX_FRAMES_IN_FLIGHT);

VK_CHECK(vkCreateDescriptorPool(device, &poolInfo, nullptr, &descriptorPool));

}

Create VkDescriptorSets allocated from the VkDescriptorPool specified.

// CODELAB: hellovk.h

void HelloVK::createDescriptorSets() {

std::vector<VkDescriptorSetLayout> layouts(MAX_FRAMES_IN_FLIGHT,

descriptorSetLayout);

VkDescriptorSetAllocateInfo allocInfo{};

allocInfo.sType = VK_STRUCTURE_TYPE_DESCRIPTOR_SET_ALLOCATE_INFO;

allocInfo.descriptorPool = descriptorPool;

allocInfo.descriptorSetCount = static_cast<uint32_t>(MAX_FRAMES_IN_FLIGHT);

allocInfo.pSetLayouts = layouts.data();

descriptorSets.resize(MAX_FRAMES_IN_FLIGHT);

VK_CHECK(vkAllocateDescriptorSets(device, &allocInfo, descriptorSets.data()));

for (size_t i = 0; i < MAX_FRAMES_IN_FLIGHT; i++) {

VkDescriptorBufferInfo bufferInfo{};

bufferInfo.buffer = uniformBuffers[i];

bufferInfo.offset = 0;

bufferInfo.range = sizeof(UniformBufferObject);

VkWriteDescriptorSet descriptorWrite{};

descriptorWrite.sType = VK_STRUCTURE_TYPE_WRITE_DESCRIPTOR_SET;

descriptorWrite.dstSet = descriptorSets[i];

descriptorWrite.dstBinding = 0;

descriptorWrite.dstArrayElement = 0;

descriptorWrite.descriptorType = VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER;

descriptorWrite.descriptorCount = 1;

descriptorWrite.pBufferInfo = &bufferInfo;

vkUpdateDescriptorSets(device, 1, &descriptorWrite, 0, nullptr);

}

}

Specify our Uniform Buffer struct and create the uniform buffers. Remember to allocate the memory from the VkDeviceMemory using vkAllocateMemory and bind the buffer to the memory using vkBindBufferMemory.

// CODELAB: hellovk.h

struct UniformBufferObject {

std::array<float, 16> mvp;

};

void HelloVK::createUniformBuffers() {

VkDeviceSize bufferSize = sizeof(UniformBufferObject);

uniformBuffers.resize(MAX_FRAMES_IN_FLIGHT);

uniformBuffersMemory.resize(MAX_FRAMES_IN_FLIGHT);

for (size_t i = 0; i < MAX_FRAMES_IN_FLIGHT; i++) {

createBuffer(bufferSize, VK_BUFFER_USAGE_UNIFORM_BUFFER_BIT,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT |

VK_MEMORY_PROPERTY_HOST_COHERENT_BIT,

uniformBuffers[i], uniformBuffersMemory[i]);

}

}

// CODELAB: hellovk.h

void HelloVK::createBuffer(VkDeviceSize size, VkBufferUsageFlags usage,

VkMemoryPropertyFlags properties, VkBuffer &buffer,

VkDeviceMemory &bufferMemory) {

VkBufferCreateInfo bufferInfo{};

bufferInfo.sType = VK_STRUCTURE_TYPE_BUFFER_CREATE_INFO;

bufferInfo.size = size;

bufferInfo.usage = usage;

bufferInfo.sharingMode = VK_SHARING_MODE_EXCLUSIVE;

VK_CHECK(vkCreateBuffer(device, &bufferInfo, nullptr, &buffer));

VkMemoryRequirements memRequirements;

vkGetBufferMemoryRequirements(device, buffer, &memRequirements);

VkMemoryAllocateInfo allocInfo{};

allocInfo.sType = VK_STRUCTURE_TYPE_MEMORY_ALLOCATE_INFO;

allocInfo.allocationSize = memRequirements.size;

allocInfo.memoryTypeIndex =

findMemoryType(memRequirements.memoryTypeBits, properties);

VK_CHECK(vkAllocateMemory(device, &allocInfo, nullptr, &bufferMemory));

vkBindBufferMemory(device, buffer, bufferMemory, 0);

}

Helper function to find the correct memory type.

// CODELAB: hellovk.h

/*

* Finds the index of the memory heap which matches a particular buffer's memory

* requirements. Vulkan manages these requirements as a bitset, in this case

* expressed through a uint32_t.

*/

uint32_t HelloVK::findMemoryType(uint32_t typeFilter,

VkMemoryPropertyFlags properties) {

VkPhysicalDeviceMemoryProperties memProperties;

vkGetPhysicalDeviceMemoryProperties(physicalDevice, &memProperties);

for (uint32_t i = 0; i < memProperties.memoryTypeCount; i++) {

if ((typeFilter & (1 << i)) && (memProperties.memoryTypes[i].propertyFlags &

properties) == properties) {

return i;

}

}

assert(false); // failed to find a suitable memory type!

return -1;

}

At the end of this step, you can only see a black window without anything rendered as this is still in the middle of the setup process. If anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: descriptorset and uniform buffer.

8. Command Buffer - create, record and Draw

VkCommandPool is a simple object that is used to allocate CommandBuffers. It is connected to a specific Queue Family.

A VkCommandBuffer is a Vulkan object that represents a list of commands that the GPU will execute. It is a low-level object that provides fine-grained control over the GPU.

// CODELAB: hellovk.h

void HelloVK::createCommandPool() {

QueueFamilyIndices queueFamilyIndices = findQueueFamilies(physicalDevice);

VkCommandPoolCreateInfo poolInfo{};

poolInfo.sType = VK_STRUCTURE_TYPE_COMMAND_POOL_CREATE_INFO;

poolInfo.flags = VK_COMMAND_POOL_CREATE_RESET_COMMAND_BUFFER_BIT;

poolInfo.queueFamilyIndex = queueFamilyIndices.graphicsFamily.value();

VK_CHECK(vkCreateCommandPool(device, &poolInfo, nullptr, &commandPool));

}

// CODELAB: hellovk.h

void HelloVK::createCommandBuffer() {

commandBuffers.resize(MAX_FRAMES_IN_FLIGHT);

VkCommandBufferAllocateInfo allocInfo{};

allocInfo.sType = VK_STRUCTURE_TYPE_COMMAND_BUFFER_ALLOCATE_INFO;

allocInfo.commandPool = commandPool;

allocInfo.level = VK_COMMAND_BUFFER_LEVEL_PRIMARY;

allocInfo.commandBufferCount = commandBuffers.size();

VK_CHECK(vkAllocateCommandBuffers(device, &allocInfo, commandBuffers.data()));

}

At the end of this step, you can only see a black window without anything rendered as this is still in the middle of the setup process. If anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: create command pool and command buffer.

Update uniform buffer, record command buffer & draw

Commands in Vulkan, like drawing operations and memory transfers, are not executed directly using function calls. Instead, all pending operations are recorded in command buffer objects. The advantage of this is that when we are ready to tell Vulkan what we want to do, all of the commands are submitted together and Vulkan can more efficiently process the commands since all of them are available together. In addition, this allows command recording to happen in multiple threads if desired.

In Vulkan, all rendering happens inside RenderPasses. In our example, the RenderPass will render into the FrameBuffer we've set up previously.

// CODELAB: hellovk.h

void HelloVK::recordCommandBuffer(VkCommandBuffer commandBuffer,

uint32_t imageIndex) {

VkCommandBufferBeginInfo beginInfo{};

beginInfo.sType = VK_STRUCTURE_TYPE_COMMAND_BUFFER_BEGIN_INFO;

beginInfo.flags = 0;

beginInfo.pInheritanceInfo = nullptr;

VK_CHECK(vkBeginCommandBuffer(commandBuffer, &beginInfo));

VkRenderPassBeginInfo renderPassInfo{};

renderPassInfo.sType = VK_STRUCTURE_TYPE_RENDER_PASS_BEGIN_INFO;

renderPassInfo.renderPass = renderPass;

renderPassInfo.framebuffer = swapChainFramebuffers[imageIndex];

renderPassInfo.renderArea.offset = {0, 0};

renderPassInfo.renderArea.extent = swapChainExtent;

VkViewport viewport{};

viewport.width = (float)swapChainExtent.width;

viewport.height = (float)swapChainExtent.height;

viewport.minDepth = 0.0f;

viewport.maxDepth = 1.0f;

vkCmdSetViewport(commandBuffer, 0, 1, &viewport);

VkRect2D scissor{};

scissor.extent = swapChainExtent;

vkCmdSetScissor(commandBuffer, 0, 1, &scissor);

static float grey;

grey += 0.005f;

if (grey > 1.0f) {

grey = 0.0f;

}

VkClearValue clearColor = {grey, grey, grey, 1.0f};

renderPassInfo.clearValueCount = 1;

renderPassInfo.pClearValues = &clearColor;

vkCmdBeginRenderPass(commandBuffer, &renderPassInfo,

VK_SUBPASS_CONTENTS_INLINE);

vkCmdBindPipeline(commandBuffer, VK_PIPELINE_BIND_POINT_GRAPHICS,

graphicsPipeline);

vkCmdBindDescriptorSets(commandBuffer, VK_PIPELINE_BIND_POINT_GRAPHICS,

pipelineLayout, 0, 1, &descriptorSets[currentFrame],

0, nullptr);

vkCmdDraw(commandBuffer, 3, 1, 0, 0);

vkCmdEndRenderPass(commandBuffer);

VK_CHECK(vkEndCommandBuffer(commandBuffer));

}

You may also need to update the Uniform Buffer as we are using the same transformation matrix for all the vertices we're rendering.

// CODELAB: hellovk.h

void HelloVK::updateUniformBuffer(uint32_t currentImage) {

SwapChainSupportDetails swapChainSupport =

querySwapChainSupport(physicalDevice);

UniformBufferObject ubo{};

getPrerotationMatrix(swapChainSupport.capabilities, pretransformFlag,

ubo.mvp);

void *data;

vkMapMemory(device, uniformBuffersMemory[currentImage], 0, sizeof(ubo), 0,

&data);

memcpy(data, &ubo, sizeof(ubo));

vkUnmapMemory(device, uniformBuffersMemory[currentImage]);

}

And it is time to render! Get the command buffer you've composed and submit it to the queue.

// CODELAB: hellovk.h

void HelloVK::render() {

if (orientationChanged) {

onOrientationChange();

}

vkWaitForFences(device, 1, &inFlightFences[currentFrame], VK_TRUE,

UINT64_MAX);

uint32_t imageIndex;

VkResult result = vkAcquireNextImageKHR(

device, swapChain, UINT64_MAX, imageAvailableSemaphores[currentFrame],

VK_NULL_HANDLE, &imageIndex);

if (result == VK_ERROR_OUT_OF_DATE_KHR) {

recreateSwapChain();

return;

}

assert(result == VK_SUCCESS ||

result == VK_SUBOPTIMAL_KHR); // failed to acquire swap chain image

updateUniformBuffer(currentFrame);

vkResetFences(device, 1, &inFlightFences[currentFrame]);

vkResetCommandBuffer(commandBuffers[currentFrame], 0);

recordCommandBuffer(commandBuffers[currentFrame], imageIndex);

VkSubmitInfo submitInfo{};

submitInfo.sType = VK_STRUCTURE_TYPE_SUBMIT_INFO;

VkSemaphore waitSemaphores[] = {imageAvailableSemaphores[currentFrame]};

VkPipelineStageFlags waitStages[] = {

VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT};

submitInfo.waitSemaphoreCount = 1;

submitInfo.pWaitSemaphores = waitSemaphores;

submitInfo.pWaitDstStageMask = waitStages;

submitInfo.commandBufferCount = 1;

submitInfo.pCommandBuffers = &commandBuffers[currentFrame];

VkSemaphore signalSemaphores[] = {renderFinishedSemaphores[currentFrame]};

submitInfo.signalSemaphoreCount = 1;

submitInfo.pSignalSemaphores = signalSemaphores;

VK_CHECK(vkQueueSubmit(graphicsQueue, 1, &submitInfo,

inFlightFences[currentFrame]));

VkPresentInfoKHR presentInfo{};

presentInfo.sType = VK_STRUCTURE_TYPE_PRESENT_INFO_KHR;

presentInfo.waitSemaphoreCount = 1;

presentInfo.pWaitSemaphores = signalSemaphores;

VkSwapchainKHR swapChains[] = {swapChain};

presentInfo.swapchainCount = 1;

presentInfo.pSwapchains = swapChains;

presentInfo.pImageIndices = &imageIndex;

presentInfo.pResults = nullptr;

result = vkQueuePresentKHR(presentQueue, &presentInfo);

if (result == VK_SUBOPTIMAL_KHR) {

orientationChanged = true;

} else if (result == VK_ERROR_OUT_OF_DATE_KHR) {

recreateSwapChain();

} else {

assert(result == VK_SUCCESS); // failed to present swap chain image!

}

currentFrame = (currentFrame + 1) % MAX_FRAMES_IN_FLIGHT;

}

Handle Orientation Change by recreating the swap chain.

// CODELAB: hellovk.h

void HelloVK::onOrientationChange() {

recreateSwapChain();

orientationChanged = false;

}

Integrate into application lifecycle.

// CODELAB: vk_main.cpp

/**

* Called by the Android runtime whenever events happen so the

* app can react to it.

*/

static void HandleCmd(struct android_app *app, int32_t cmd) {

auto *engine = (VulkanEngine *)app->userData;

switch (cmd) {

case APP_CMD_START:

if (engine->app->window != nullptr) {

engine->app_backend->reset(app->window, app->activity->assetManager);

engine->app_backend->initVulkan();

engine->canRender = true;

}

case APP_CMD_INIT_WINDOW:

// The window is being shown, get it ready.

LOGI("Called - APP_CMD_INIT_WINDOW");

if (engine->app->window != nullptr) {

LOGI("Setting a new surface");

engine->app_backend->reset(app->window, app->activity->assetManager);

if (!engine->app_backend->initialized) {

LOGI("Starting application");

engine->app_backend->initVulkan();

}

engine->canRender = true;

}

break;

case APP_CMD_TERM_WINDOW:

// The window is being hidden or closed, clean it up.

engine->canRender = false;

break;

case APP_CMD_DESTROY:

// The window is being hidden or closed, clean it up.

LOGI("Destroying");

engine->app_backend->cleanup();

default:

break;

}

}

/*

* Entry point required by the Android Glue library.

* This can also be achieved more verbosely by manually declaring JNI functions

* and calling them from the Android application layer.

*/

void android_main(struct android_app *state) {

VulkanEngine engine{};

vkt::HelloVK vulkanBackend{};

engine.app = state;

engine.app_backend = &vulkanBackend;

state->userData = &engine;

state->onAppCmd = HandleCmd;

android_app_set_key_event_filter(state, VulkanKeyEventFilter);

android_app_set_motion_event_filter(state, VulkanMotionEventFilter);

while (true) {

int ident;

int events;

android_poll_source *source;

while ((ident = ALooper_pollAll(engine.canRender ? 0 : -1, nullptr, &events,

(void **)&source)) >= 0) {

if (source != nullptr) {

source->process(state, source);

}

}

HandleInputEvents(state);

engine.app_backend->render();

}

}

At the end of this step, you will finally see a colored triangle on the screen!

Check that this is the case, and if anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: update uniform buffer, record command buffer and draw.

9. Rotate triangle

To rotate the triangle, we need to apply the rotation to our MVP matrix before passing on the matrix to the shader. This is to prevent duplicated work of calculating the same matrix to be done for each of the vertices in the model.

To calculate the MVP matrix on the application side, a rotation transformation matrix is needed. GLM Library is a C++ mathematics library for writing graphics software based on GLSL specifications and it has the rotate function needed to build the matrix with rotation applied.

// CODELAB: hellovk.h

// Additional includes to make our lives easier than composing

// transformation matrices manually

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

// change our UniformBufferObject construct to use glm::mat4

struct UniformBufferObject {

glm::mat4 mvp;

};

// CODELAB: hellovk.h

/*

* getPrerotationMatrix handles screen rotation with 3 hardcoded rotation

* matrices (detailed below). We skip the 180 degrees rotation.

*/

void getPrerotationMatrix(const VkSurfaceCapabilitiesKHR &capabilities,

const VkSurfaceTransformFlagBitsKHR &pretransformFlag,

glm::mat4 &mat, float ratio) {

// mat is initialized to the identity matrix

mat = glm::mat4(1.0f);

// scale by screen ratio

mat = glm::scale(mat, glm::vec3(1.0f, ratio, 1.0f));

// rotate 1 degree every function call.

static float currentAngleDegrees = 0.0f;

currentAngleDegrees += 1.0f;

if ( currentAngleDegrees >= 360.0f ) {

currentAngleDegrees = 0.0f;

}

mat = glm::rotate(mat, glm::radians(currentAngleDegrees), glm::vec3(0.0f, 0.0f, 1.0f));

}

At the end of this step, you will see that your triangle is rotating on the screen! Check that this is the case, and if anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: rotate triangle.

10. Apply Texture

To apply texture to the triangle, the image file first needs to be loaded in uncompressed format in memory. This step uses stb image library to load and decode the image data to RAM which is then copied over to Vulkan's buffer (VkBuffer).

// CODELAB: hellovk.h

void HelloVK::decodeImage() {

std::vector<uint8_t> imageData = LoadBinaryFileToVector("texture.png",

assetManager);

if (imageData.size() == 0) {

LOGE("Fail to load image.");

return;

}

unsigned char* decodedData = stbi_load_from_memory(imageData.data(),

imageData.size(), &textureWidth, &textureHeight, &textureChannels, 0);

if (decodedData == nullptr) {

LOGE("Fail to load image to memory, %s", stbi_failure_reason());

return;

}

size_t imageSize = textureWidth * textureHeight * textureChannels;

VkBufferCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_BUFFER_CREATE_INFO;

createInfo.size = imageSize;

createInfo.usage = VK_BUFFER_USAGE_TRANSFER_SRC_BIT;

createInfo.sharingMode = VK_SHARING_MODE_EXCLUSIVE;

VK_CHECK(vkCreateBuffer(device, &createInfo, nullptr, &stagingBuffer));

VkMemoryRequirements memRequirements;

vkGetBufferMemoryRequirements(device, stagingBuffer, &memRequirements);

VkMemoryAllocateInfo allocInfo{};

allocInfo.sType = VK_STRUCTURE_TYPE_MEMORY_ALLOCATE_INFO;

allocInfo.allocationSize = memRequirements.size;

allocInfo.memoryTypeIndex = findMemoryType(memRequirements.memoryTypeBits,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT | VK_MEMORY_PROPERTY_HOST_COHERENT_BIT);

VK_CHECK(vkAllocateMemory(device, &allocInfo, nullptr, &stagingMemory));

VK_CHECK(vkBindBufferMemory(device, stagingBuffer, stagingMemory, 0));

uint8_t *data;

VK_CHECK(vkMapMemory(device, stagingMemory, 0, memRequirements.size, 0,

(void **)&data));

memcpy(data, decodedData, imageSize);

vkUnmapMemory(device, stagingMemory);

stbi_image_free(decodedData);

}

Next, create VkImage from the VkBuffer populated with the image data from the earlier step.

VkImage is the object that holds the actual texture data. It holds the pixel data into the main memory of the texture, but doesn't contain a lot of information on how to read it. That's why we need to create VkImageView in the next section.

// CODELAB: hellovk.h

void HelloVK::createTextureImage() {

VkImageCreateInfo imageInfo{};

imageInfo.sType = VK_STRUCTURE_TYPE_IMAGE_CREATE_INFO;

imageInfo.imageType = VK_IMAGE_TYPE_2D;

imageInfo.extent.width = textureWidth;

imageInfo.extent.height = textureHeight;

imageInfo.extent.depth = 1;

imageInfo.mipLevels = 1;

imageInfo.arrayLayers = 1;

imageInfo.format = VK_FORMAT_R8G8B8_UNORM;

imageInfo.tiling = VK_IMAGE_TILING_OPTIMAL;

imageInfo.initialLayout = VK_IMAGE_LAYOUT_UNDEFINED;

imageInfo.usage =

VK_IMAGE_USAGE_TRANSFER_DST_BIT | VK_IMAGE_USAGE_SAMPLED_BIT;

imageInfo.samples = VK_SAMPLE_COUNT_1_BIT;

imageInfo.sharingMode = VK_SHARING_MODE_EXCLUSIVE;

VK_CHECK(vkCreateImage(device, &imageInfo, nullptr, &textureImage));

VkMemoryRequirements memRequirements;

vkGetImageMemoryRequirements(device, textureImage, &memRequirements);

VkMemoryAllocateInfo allocInfo{};

allocInfo.sType = VK_STRUCTURE_TYPE_MEMORY_ALLOCATE_INFO;

allocInfo.allocationSize = memRequirements.size;

allocInfo.memoryTypeIndex = findMemoryType(memRequirements.memoryTypeBits,

VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT);

VK_CHECK(vkAllocateMemory(device, &allocInfo, nullptr, &textureImageMemory));

vkBindImageMemory(device, textureImage, textureImageMemory, 0);

}

// CODELAB: hellovk.h

void HelloVK::copyBufferToImage() {

VkImageSubresourceRange subresourceRange{};

subresourceRange.aspectMask = VK_IMAGE_ASPECT_COLOR_BIT;

subresourceRange.baseMipLevel = 0;

subresourceRange.levelCount = 1;

subresourceRange.layerCount = 1;

VkImageMemoryBarrier imageMemoryBarrier{};

imageMemoryBarrier.sType = VK_STRUCTURE_TYPE_IMAGE_MEMORY_BARRIER;

imageMemoryBarrier.srcQueueFamilyIndex = VK_QUEUE_FAMILY_IGNORED;

imageMemoryBarrier.dstQueueFamilyIndex = VK_QUEUE_FAMILY_IGNORED;

imageMemoryBarrier.image = textureImage;

imageMemoryBarrier.subresourceRange = subresourceRange;

imageMemoryBarrier.srcAccessMask = 0;

imageMemoryBarrier.dstAccessMask = VK_ACCESS_TRANSFER_WRITE_BIT;

imageMemoryBarrier.oldLayout = VK_IMAGE_LAYOUT_UNDEFINED;

imageMemoryBarrier.newLayout = VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL;

VkCommandBuffer cmd;

VkCommandBufferAllocateInfo cmdAllocInfo{};

cmdAllocInfo.sType = VK_STRUCTURE_TYPE_COMMAND_BUFFER_ALLOCATE_INFO;

cmdAllocInfo.commandPool = commandPool;

cmdAllocInfo.level = VK_COMMAND_BUFFER_LEVEL_PRIMARY;

cmdAllocInfo.commandBufferCount = 1;

VK_CHECK(vkAllocateCommandBuffers(device, &cmdAllocInfo, &cmd));

VkCommandBufferBeginInfo beginInfo{};

beginInfo.sType = VK_STRUCTURE_TYPE_COMMAND_BUFFER_BEGIN_INFO;

vkBeginCommandBuffer(cmd, &beginInfo);

vkCmdPipelineBarrier(cmd, VK_PIPELINE_STAGE_HOST_BIT,

VK_PIPELINE_STAGE_TRANSFER_BIT, 0, 0, nullptr, 0,

nullptr, 1, &imageMemoryBarrier);

VkBufferImageCopy bufferImageCopy{};

bufferImageCopy.imageSubresource.aspectMask = VK_IMAGE_ASPECT_COLOR_BIT;

bufferImageCopy.imageSubresource.mipLevel = 0;

bufferImageCopy.imageSubresource.baseArrayLayer = 0;

bufferImageCopy.imageSubresource.layerCount = 1;

bufferImageCopy.imageExtent.width = textureWidth;

bufferImageCopy.imageExtent.height = textureHeight;

bufferImageCopy.imageExtent.depth = 1;

bufferImageCopy.bufferOffset = 0;

vkCmdCopyBufferToImage(cmd, stagingBuffer, textureImage,

VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL,

1, &bufferImageCopy);

imageMemoryBarrier.srcAccessMask = VK_ACCESS_TRANSFER_WRITE_BIT;

imageMemoryBarrier.dstAccessMask = VK_ACCESS_SHADER_READ_BIT;

imageMemoryBarrier.oldLayout = VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL;

imageMemoryBarrier.newLayout = VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL;

vkCmdPipelineBarrier(cmd, VK_PIPELINE_STAGE_TRANSFER_BIT,

VK_PIPELINE_STAGE_FRAGMENT_SHADER_BIT, 0, 0, nullptr,

0, nullptr, 1, &imageMemoryBarrier);

vkEndCommandBuffer(cmd);

VkSubmitInfo submitInfo{};

submitInfo.sType = VK_STRUCTURE_TYPE_SUBMIT_INFO;

submitInfo.commandBufferCount = 1;

submitInfo.pCommandBuffers = &cmd;

VK_CHECK(vkQueueSubmit(graphicsQueue, 1, &submitInfo, VK_NULL_HANDLE));

vkQueueWaitIdle(graphicsQueue);

}

Next, create VkImageView and VkSampler which can be used by the fragment shader to sample the color for each of the rendered pixels.

VkImageView is a wrapper on top of the VkImage. It holds information about how to interpret the data of the texture, for example, if you want to only access a region or layer, and if you want to shuffle the pixel channels in a specific way.

VkSampler holds the data for the specific shader access to the texture. It holds information about how to blend the pixels, or how to do mipmapping. Samplers are used with VkImageViews in descriptors.

// CODELAB: hellovk.h

void HelloVK::createTextureImageViews() {

VkImageViewCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_IMAGE_VIEW_CREATE_INFO;

createInfo.image = textureImage;

createInfo.viewType = VK_IMAGE_VIEW_TYPE_2D;

createInfo.format = VK_FORMAT_R8G8B8_UNORM;

createInfo.components.r = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.components.g = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.components.b = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.components.a = VK_COMPONENT_SWIZZLE_IDENTITY;

createInfo.subresourceRange.aspectMask = VK_IMAGE_ASPECT_COLOR_BIT;

createInfo.subresourceRange.baseMipLevel = 0;

createInfo.subresourceRange.levelCount = 1;

createInfo.subresourceRange.baseArrayLayer = 0;

createInfo.subresourceRange.layerCount = 1;

VK_CHECK(vkCreateImageView(device, &createInfo, nullptr, &textureImageView));

}

We will also need to create a Sampler to pass to our shader

// CODELAB: hellovk.h

void HelloVK::createTextureSampler() {

VkSamplerCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_SAMPLER_CREATE_INFO;

createInfo.magFilter = VK_FILTER_LINEAR;

createInfo.minFilter = VK_FILTER_LINEAR;

createInfo.addressModeU = VK_SAMPLER_ADDRESS_MODE_REPEAT;

createInfo.addressModeV = VK_SAMPLER_ADDRESS_MODE_REPEAT;

createInfo.addressModeW = VK_SAMPLER_ADDRESS_MODE_REPEAT;

createInfo.anisotropyEnable = VK_FALSE;

createInfo.maxAnisotropy = 16;

createInfo.borderColor = VK_BORDER_COLOR_INT_OPAQUE_BLACK;

createInfo.unnormalizedCoordinates = VK_FALSE;

createInfo.compareEnable = VK_FALSE;

createInfo.compareOp = VK_COMPARE_OP_ALWAYS;

createInfo.mipmapMode = VK_SAMPLER_MIPMAP_MODE_LINEAR;

createInfo.mipLodBias = 0.0f;

createInfo.minLod = 0.0f;

createInfo.maxLod = VK_LOD_CLAMP_NONE;

VK_CHECK(vkCreateSampler(device, &createInfo, nullptr, &textureSampler));

}

And finally modify our shaders to sample the image instead of using vertex color. Texture coordinates are floating-point positions that map locations on a texture to locations on a geometric surface. In our example, this process is completed by defining the vTexCoords as output of the vertex shader which we fill with texCoords of the vertex directly as we have a normalized ({1, 1} sized) triangle.

// CODELAB: shader.vert

#version 450

// Uniform buffer containing an MVP matrix.

// Currently the vulkan backend only sets the rotation matrix

// required to handle device rotation.

layout(binding = 0) uniform UniformBufferObject {

mat4 MVP;

} ubo;

vec2 positions[3] = vec2[](

vec2(0.0, 0.577),

vec2(-0.5, -0.289),

vec2(0.5, -0.289)

);

vec2 texCoords[3] = vec2[](

vec2(0.5, 1.0),

vec2(0.0, 0.0),

vec2(1.0, 0.0)

);

layout(location = 0) out vec2 vTexCoords;

void main() {

gl_Position = ubo.MVP * vec4(positions[gl_VertexIndex], 0.0, 1.0);

vTexCoords = texCoords[gl_VertexIndex];

}

Fragment shader using Sampler and textures.

// CODELAB: shader.frag

#version 450

layout(location = 0) in vec2 vTexCoords;

layout(binding = 1) uniform sampler2D samp;

// Output colour for the fragment

layout(location = 0) out vec4 outColor;

void main() {

outColor = texture(samp, vTexCoords);

}

At the end of this step, you will see that your rotating triangle is textured!

Check that this is the case, and if anything goes wrong, you can compare your work with the commit of the repo titled [codelab] step: apply texture.

11. Adding Validation Layer

Validation layers are optional components that hook into Vulkan function calls to apply additional operations such as:

- Validating the values of parameters to detect misuse

- Tracking creation and destruction of objects to find resource leaks

- Checking thread safety

- Call logging for profiling and replaying

As the validation layer is a sizable download, we chose to not ship them within the APK. As such in order to enable validation layer, please follow the simple steps below:

Download the latest android binaries from: https://github.com/KhronosGroup/Vulkan-ValidationLayers/releases

Place them in their respective ABI folders located in: app/src/main/jniLibs

Follow the steps below to enable validation layers

// CODELAB: hellovk.h

void HelloVK::createInstance() {

assert(!enableValidationLayers ||

checkValidationLayerSupport()); // validation layers requested, but not available!

auto requiredExtensions = getRequiredExtensions(enableValidationLayers);

VkApplicationInfo appInfo{};

appInfo.sType = VK_STRUCTURE_TYPE_APPLICATION_INFO;

appInfo.pApplicationName = "Hello Triangle";

appInfo.applicationVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.pEngineName = "No Engine";

appInfo.engineVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.apiVersion = VK_API_VERSION_1_0;

VkInstanceCreateInfo createInfo{};

createInfo.sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO;

createInfo.pApplicationInfo = &appInfo;

createInfo.enabledExtensionCount = (uint32_t)requiredExtensions.size();

createInfo.ppEnabledExtensionNames = requiredExtensions.data();

createInfo.pApplicationInfo = &appInfo;

if (enableValidationLayers) {

VkDebugUtilsMessengerCreateInfoEXT debugCreateInfo{};

createInfo.enabledLayerCount =

static_cast<uint32_t>(validationLayers.size());

createInfo.ppEnabledLayerNames = validationLayers.data();

populateDebugMessengerCreateInfo(debugCreateInfo);

createInfo.pNext = (VkDebugUtilsMessengerCreateInfoEXT *)&debugCreateInfo;

} else {

createInfo.enabledLayerCount = 0;

createInfo.pNext = nullptr;

}

VK_CHECK(vkCreateInstance(&createInfo, nullptr, &instance));

uint32_t extensionCount = 0;

vkEnumerateInstanceExtensionProperties(nullptr, &extensionCount, nullptr);

std::vector<VkExtensionProperties> extensions(extensionCount);

vkEnumerateInstanceExtensionProperties(nullptr, &extensionCount,

extensions.data());

LOGI("available extensions");

for (const auto &extension : extensions) {

LOGI("\t %s", extension.extensionName);

}

}

12. Congratulations

Congratulations, you have successfully set up your Vulkan rendering pipeline and are ready to develop your game!

Stay tuned as we will add more features from Vulkan to Android.

For more information on getting started with Vulkan on Android, read Get started with Vulkan on Android.