Android 15 introduces great new features and APIs for developers. The following sections summarize these features to help you get started with the related APIs.

For a detailed list of new, modified, and removed APIs, read the API diff report. For details on new APIs visit the Android API reference — new APIs are highlighted for visibility. Also, to learn about areas where platform changes might affect your apps, be sure to review the Android 15 behavior changes that affect apps when they target Android 15 and behavior changes that affect all apps regardless oftargetSdkVersion.

Camera and media

Android 15 includes a variety of features that improve the camera and media experience and that give you access to tools and hardware to support creators in bringing their vision to life on Android.

In-app camera controls

Android 15 adds a new extension for more control over the camera hardware and its algorithms on supported devices:

- Advanced flash strength adjustments enabling precise control of flash

intensity in both

SINGLEandTORCHmodes while capturing images.

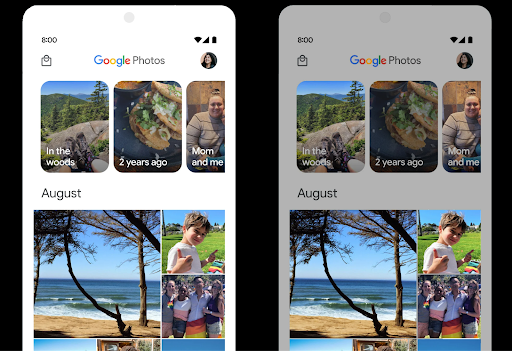

HDR headroom control

Android 15 chooses HDR headroom that is appropriate for the underlying device

capabilities and bit-depth of the panel. For pages that have lots of SDR

content, such as a messaging app displaying a single HDR thumbnail, this

behavior can end up adversely influencing the perceived brightness of the SDR

content. Android 15 lets you control the HDR headroom with

setDesiredHdrHeadroom to strike a balance between SDR

and HDR content.

Loudness control

Android 15 introduces support for the CTA-2075 loudness standard to help you avoid audio loudness inconsistencies and ensure users don't have to constantly adjust volume when switching between content. The system leverages known characteristics of the output devices (headphones and speaker) along with loudness metadata available in AAC audio content to intelligently adjust the audio loudness and dynamic range compression levels.

To enable this feature, you need to ensure loudness metadata is available in

your AAC content and enable the platform feature in your app. For this, you

instantiate a LoudnessCodecController object by

calling its create factory method with the audio

session ID from the associated AudioTrack; this

automatically starts applying audio updates. You can pass an

OnLoudnessCodecUpdateListener to modify or filter

loudness parameters before they are applied on the

MediaCodec.

// Media contains metadata of type MPEG_4 OR MPEG_D

val mediaCodec = …

val audioTrack = AudioTrack.Builder()

.setSessionId(sessionId)

.build()

...

// Create new loudness controller that applies the parameters to the MediaCodec

try {

val lcController = LoudnessCodecController.create(mSessionId)

// Starts applying audio updates for each added MediaCodec

AndroidX media3 ExoPlayer will soon be updated to leverage

LoudnessCodecController APIs for a seamless app integration.

Low Light Boost

Android 15 introduces Low Light Boost, a new auto-exposure mode available to both Camera 2 and the night mode camera extension. Low Light Boost adjusts the exposure of the Preview stream in low-light conditions. This is different from how the night mode camera extension creates still images, because night mode combines a burst of photos to create a single, enhanced image. While night mode works very well for creating a still image, it can't create a continuous stream of frames, but Low Light Boost can. Thus, Low Light Boost enables new camera capabilities, such as:

- Providing an enhanced image preview, so users are better able to frame their low-light pictures

- Scanning QR codes in low light

If you enable Low Light Boost, it automatically turns on when there's a low light level, and turns off when there's more light.

Apps can record off the Preview stream in low-light conditions to save a brightened video.

For more information, see Low Light Boost.

Virtual MIDI 2.0 devices

Android 13 added support for connecting to MIDI 2.0 devices using USB, which communicate using Universal MIDI Packets (UMP). Android 15 extends UMP support to virtual MIDI apps, enabling composition apps to control synthesizer apps as a virtual MIDI 2.0 device just like they would with an USB MIDI 2.0 device.

Connectivity

Android 15 updates the platform to give your app access to the latest advances in communication.

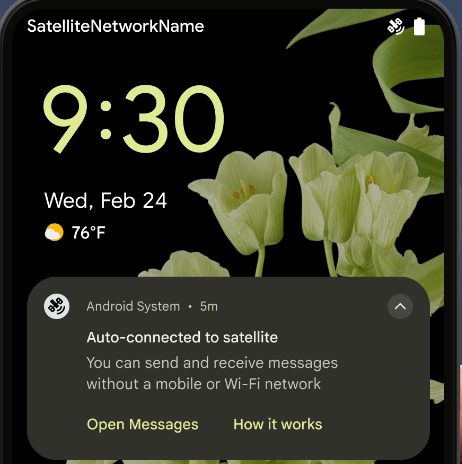

Satellite support

Android 15 continues to extend platform support for satellite connectivity and includes some UI elements to ensure a consistent user experience across the satellite connectivity landscape.

Apps can use ServiceState.isUsingNonTerrestrialNetwork() to

detect when a device is connected to a satellite, giving them more awareness of

why full network services might be unavailable. Additionally, Android 15

provides support for SMS and MMS apps as well as preloaded RCS apps to use

satellite connectivity for sending and receiving messages.

Smoother NFC experiences

Android 15 is working to make the tap to pay experience more seamless and

reliable while continuing to support Android's robust NFC app ecosystem. On

supported devices, apps can request the NfcAdapter to enter

observe mode, where the device listens but doesn't respond to NFC

readers, sending the app's NFC service PollingFrame

objects to process. The PollingFrame objects can be used to auth

ahead of the first communication to the NFC reader, allowing for a one tap

transaction in many cases.

Wallet role

Android 15 introduces a new Wallet role that allows tighter integration with the user's preferred wallet app. This role replaces the NFC default contactless payment setting. Users can manage the Wallet role holder by navigating to Settings > Apps > Default Apps.

The Wallet role is used when routing NFC taps for AIDs registered in the payment category. Taps always go to the Wallet role holder unless another app that is registered for the same AID is running in the foreground.

This role is also used to determine where the Wallet QuickAccess tile should go when activated. When the role is set to "None", the QuickAccess tile isn't available and payment category NFC taps are only delivered to the foreground app.

Developer productivity and tools

While most of our work to improve your productivity centers around tools like Android Studio, Jetpack Compose, and the Android Jetpack libraries, we always look for ways in the platform to help you more easily realize your vision.

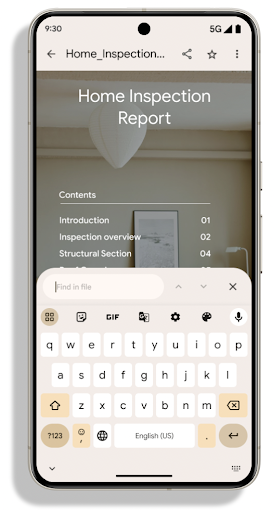

PDF improvements

Android 15 Developer Preview 2 includes an early preview of substantial

improvements to the PdfRenderer APIs. Apps can incorporate

advanced features such as rendering password-protected files,

annotations, form editing, searching, and

selection with copy. Linearized PDF optimizations are supported to

speed local PDF viewing and reduce resource use.

The PdfRenderer has been moved to a module that can be updated using Google

Play system updates independent of the platform release, and we're supporting

these changes back to Android 11 (API level 30) by creating a compatible

pre-Android 15 version of the API surface, called

PdfRendererPreV.

We value your feedback on the enhancements we've made to the PdfRenderer API

surface, and we plan to make it even easier to incorporate these APIs into your

app with an upcoming Android Jetpack library.

Automatic language switching refinements

Android 14 added on-device, multi-language recognition in audio with automatic

switching between languages, but this can cause words to get dropped,

especially when languages switch with less of a pause between the two

utterances. Android 15 adds additional controls to help apps tune this switching

to their use case.

EXTRA_LANGUAGE_SWITCH_INITIAL_ACTIVE_DURATION_TIME_MILLIS

confines the automatic switching to the beginning of the audio session, while

EXTRA_LANGUAGE_SWITCH_MATCH_SWITCHES deactivates the

language switching after a defined number of switches. These options are

particularly useful if you expect that there will be a single language spoken

during the session that should be autodetected.

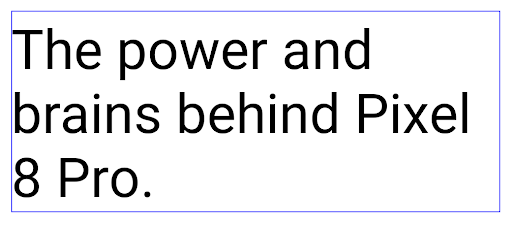

Granular line break controls

Starting in Android 15, a TextView and the underlying

line breaker can preserve the given portion of text in the same line to improve

readability. You can take advantage of this line break customization by using

the <nobreak> tag in string resources or

createNoBreakSpan. Similarly, you can preserve words from

hyphenation by using the <nohyphen> tag or

createNoHyphenationSpan.

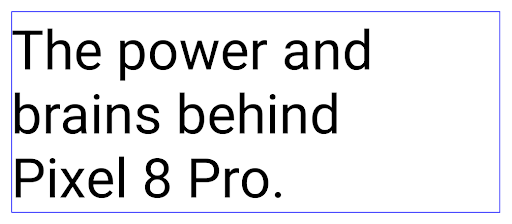

For example, the following string resource doesn't include a line break, and renders with the text "Pixel 8 Pro." breaking in an undesirable place:

<resources>

<string name="pixel8pro">The power and brains behind Pixel 8 Pro.</string>

</resources>

In contrast, this string resource includes the <nobreak> tag, which wraps the

phrase "Pixel 8 Pro." and prevents line breaks:

<resources>

<string name="pixel8pro">The power and brains behind <nobreak>Pixel 8 Pro.</nobreak></string>

</resources>

The difference in how these strings are rendered is shown in the following images:

<nobreak> tag.

<nobreak> tag.OpenJDK 17 updates

Android 15 continues the work of refreshing Android's core libraries to align with the features in the latest OpenJDK LTS releases.

The following key features and improvements are included:

- Quality-of-life improvements around NIO buffers

- Streams

- Additional

mathandstrictmathmethods utilpackage updates including sequencedcollection,map, andsetByteBuffersupport inDeflater- Security updates such as

X500PrivateCredentialand security key updates

These APIs are updated on over a billion devices running Android 12 (API level 31) and higher through Google Play System updates, so you can target the latest programming features.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

Large screens and form factors

Android 15 gives your apps the support to get the most out of Android's form factors, including large screens, flippables, and foldables.

Cover screen support

Your app can declare a property that Android 15 uses to

allow your Application or Activity to be presented on the small cover

screens of supported flippable devices. These screens are too small to be

considered as compatible targets for Android apps to run on, but your app can

opt in to supporting them, making your app available in more places.

Performance and battery

Android continues its focus on helping you improve the performance and quality of your apps. Android 15 introduces new APIs that help make tasks in your app more efficient to execute, optimize app performance, and gather insights about your apps.

ApplicationStartInfo API

In previous versions of Android, app startup has been a bit of a mystery. It was

challenging to determine within your app whether it started from a cold, warm,

or hot state. It was also difficult to know how long your app spent during the

various launch phases: forking the process, calling onCreate, drawing the

first frame, and more. When your Application class was instantiated, you had no

way of knowing whether the app started from a broadcast, a content provider, a

job, a backup, boot complete, an alarm, or an Activity.

The ApplicationStartInfo API on Android 15 provides

all of this and more. You can even choose to add your own timestamps into the

flow to help collect timing data in one place. In addition to collecting

metrics, you can use ApplicationStartInfo to help directly optimize app

startup; for example, you can eliminate the costly instantiation of UI-related

libraries within your Application class when your app is starting up due to a

broadcast.

Detailed app size information

Since Android 8.0 (API level 26), Android has included the

StorageStats.getAppBytes API that summarizes the installed

size of an app as a single number of bytes, which is a sum of the APK size, the

size of files extracted from the APK, and files that were generated on the

device such as ahead-of-time (AOT) compiled code. This number is not very

insightful in terms of how your app is using storage.

Android 15 adds the

StorageStats.getAppBytesByDataType([type]) API, which lets

you get insight into how your app is using up all that space, including APK file

splits, AOT and speedup related code, dex metadata, libraries, and guided

profiles.

SQLite database improvements

Android 15 introduces new SQLite APIs that expose advanced features from the underlying SQLite engine that target specific performance issues that can manifest in apps.

Developers should consult best practices for SQLite performance to get the most out of their SQLite database, especially when working with large databases or when running latency-sensitive queries.

- Read-only deferred transactions: when issuing transactions that are

read-only (don't include write statements), use

beginTransactionReadOnly()andbeginTransactionWithListenerReadOnly(SQLiteTransactionListener)to issue read-onlyDEFERREDtransactions. Such transactions can run concurrently with each other, and if the database is in WAL mode, they can run concurrently withIMMEDIATEorEXCLUSIVEtransactions. - Row counts and IDs: new APIs were added to retrieve the count of changed

rows or the last inserted row ID without issuing an additional query.

getLastChangedRowCount()returns the number of rows that were inserted, updated, or deleted by the most recent SQL statement within the current transaction, whilegetTotalChangedRowCount()returns the count on the current connection.getLastInsertRowId()returns therowidof the last row to be inserted on the current connection. - Raw statements: issue a raw SQlite statement, bypassing convenience wrappers and any additional processing overhead that they may incur.

Android Dynamic Performance Framework updates

Android 15 continues our investment in the Android Dynamic Performance Framework (ADPF), a set of APIs that allow games and performance intensive apps to interact more directly with power and thermal systems of Android devices. On supported devices, Android 15 will add new ADPF capabilities:

- A power-efficiency mode for hint sessions to indicate that their associated threads should prefer power saving over performance, great for long-running background workloads.

- GPU and CPU work durations can both be reported in hint sessions, allowing the system to adjust CPU and GPU frequencies together to best meet workload demands.

- Thermal headroom thresholds to interpret possible thermal throttling status based on headroom prediction.

To learn more about how to use ADPF in your apps and games, head over to the documentation.

Privacy

Android 15 includes a variety of features that help app developers protect user privacy.

Screen recording detection

Android 15 adds support for apps to detect that they are being recorded. A callback is invoked whenever the app transitions between being visible or invisible within a screen recording. An app is considered visible if activities owned by the registering process's UID are being recorded. This way, if your app is performing a sensitive operation, you can inform the user that they're being recorded.

val mCallback = Consumer<Int> { state ->

if (state == SCREEN_RECORDING_STATE_VISIBLE) {

// We're being recorded

} else {

// We're not being recorded

}

}

override fun onStart() {

super.onStart()

val initialState =

windowManager.addScreenRecordingCallback(mainExecutor, mCallback)

mCallback.accept(initialState)

}

override fun onStop() {

super.onStop()

windowManager.removeScreenRecordingCallback(mCallback)

}

Expanded IntentFilter capabilities

Android 15 builds in support for more precise Intent resolution through

UriRelativeFilterGroup, which contains a set of

UriRelativeFilter objects that form a set of Intent

matching rules that must each be satisfied, including URL query parameters, URL

fragments, and blocking or exclusion rules.

These rules can be defined in the AndroidManifest XML file with the new

<uri-relative-filter-group> tag, which can optionally include an

android:allow tag. These tags can contain <data> tags that use existing data

tag attributes as well as the new android:query and android:fragment

attributes.

Here's an example of the AndroidManifest syntax:

<intent-filter>

<action android:name="android.intent.action.VIEW" />

<category android:name="android.intent.category.BROWSABLE" />

<data android:scheme="http" />

<data android:scheme="https" />

<data android:domain="astore.com" />

<uri-relative-filter-group>

<data android:pathPrefix="/auth" />

<data android:query="region=na" />

</uri-relative-filter-group>

<uri-relative-filter-group android:allow="false">

<data android:pathPrefix="/auth" />

<data android:query="mobileoptout=true" />

</uri-relative-filter-group>

<uri-relative-filter-group android:allow="false">

<data android:pathPrefix="/auth" />

<data android:fragmentPrefix="faq" />

</uri-relative-filter-group>

</intent-filter>

Privacy Sandbox on Android

Android 15 brings Android Ad Services up to extension level 10, incorporating the latest version of the Privacy Sandbox on Android, part of our work to develop new technologies that improve user privacy and enable effective, personalized advertising experiences for mobile apps. Our privacy sandbox page has more information about the Privacy Sandbox on Android developer preview and beta programs to help you get started.

Health Connect

Android 15 integrates Android 14 extensions 10 around Health Connect by Android, a secure and centralized platform to manage and share app-collected health and fitness data. This update adds support for new data types across fitness, nutrition, and more.

Partial screen sharing

Android 15 supports partial screen sharing so users can share or record just an

app window rather than the entire device screen. This feature, first enabled in

Android 14 QPR2, includes

MediaProjection callbacks that allow your app

to customize the partial screen sharing experience. Note that for apps targeting

Android 14 (API level 34) or higher,

user consent is now required for each

MediaProjection capture session.

Security

Android 15 helps you enhance your app's security and protect your app's data.

Protect files using fs-verity

Android 15's FileIntegrityManager includes new APIs

that tap into the power of the fs-verity

feature in the Linux kernel. With fs-verity, files can be protected by custom

cryptographic signatures, helping you ensure they

haven't been tampered with or corrupted. This leads to

enhanced security, protecting against potential malware or unauthorized file

modifications that could compromise your app's functionality or data.

User experience

Android 15 gives app developers and users more control and flexibility for configuring their device to fit their needs.

Improved Do Not Disturb rules

AutomaticZenRule lets apps customize Attention

Management (Do Not Disturb) rules and decide when to activate or deactivate

them. Android 15 greatly enhances these rules with the goal of improving the

user experience. The following enhancements are included:

- Adding types to

AutomaticZenRule, allowing the system to apply special treatment to some rules. - Adding an icon to

AutomaticZenRule, helping to make the modes be more recognizable. - Adding a

triggerDescriptionstring toAutomaticZenRulethat describes the conditions on which the rule should become active for the user. - Added

ZenDeviceEffectstoAutomaticZenRule, allowing rules to trigger things like grayscale display, night mode, or dimming the wallpaper.